26

A simple camera for a large team - UIImagePicker edition

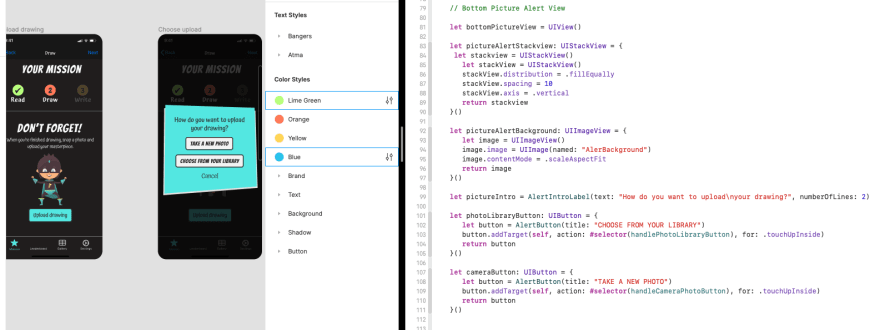

At the end of my time at Lambda School’s iOS program, we participated in a program called Labs. Labs, in short, are supposed to be an internship that allows students to work on real-world projects that are production-based, and or concept pieces that are being taken to market. These are great opportunities for students to shine and demonstrate their newfound skills to each other but importantly to stakeholders whose products are not manufactured by the school i.e. a school project.

In my case, I was able to be part of a large team of 15 developers. 4 iOS, 7 Web, and 4 Data Science students. The project we were assigned to was for a small start-up company called Story Squad. The company was founded by a 6th-grade teacher who wanted to provide an alternative teaching method to young students. In which they are able to process stories through creative writing and art. To learn more about Story Squad follow this link.

This company’s product has many cool features to help students learn more in-depth about the material they are assigned to interact with. The primary features we were asked to implement and solve were to allow the users to access the camera of the iPhone to take images of documents created by students. As most projects go for a large iOS team the first real challenge is diving up the tasks. Luckily I volunteered in taking on the implementation of the first camera function. Which simply will take an image of the users drawing to then later send to a back-end.

|

|

My task was to work out a way to give users access to the iPhones camera. This was a simple straightforward build, which was a feature that had yet to be implemented.

One big concern before I started to implement my solution was that the project was going to be built in ProgrammaticUI. Not that views written in code are an issue but making sure the developer in charge of writing out the code understands that not all the team members may know ProgrammaticUI. In which can create conflicts with in the team if proper measures are not taken. Like that of making it a common practice to create comments for those who may not know ProgrammaticUI.

When implementing a camera view in a project of this size. The first thing one needs to consider is if the project would need a custom camera view? The second thing to consider is the stakeholder or UX designer prefers for style. Once the choice has been made then the built can continue. In this case there was no need for a custom camera view. What I opt to do was to insert a simple image picker that gave us the built-in camera view.

@objc func handleCameraPhotoButton() {

let photoPicker = UIImagePickerController()

photoPicker.delegate = self

if UIImagePickerController.isSourceTypeAvailable(.camera) {

photoPicker.allowsEditing = false

photoPicker.sourceType = UIImagePickerController.SourceType.camera

photoPicker.cameraCaptureMode = .photo

photoPicker.modalPresentationStyle = .fullScreen

present(photoPicker, animated: true, completion: nil)

} else {

noCamera()

}

}I started by creating a constant called photoPicker that was an instance of a UI Image Picker Controller.

let photoPicker = UIImagePickerController()Then added a delegate so that once my function is triggered it can notify the view that it needs to be updated with an image in this example.

photoPicker.delegate = selfAfter this, I create an if-else statement in which we start by checking if the data source type availability for an internal camera.

if UIImagePickerController.isSourceTypeAvailable(.camera) {

photoPicker.allowsEditing = false

photoPicker.sourceType = UIImagePickerController.SourceType.camera

photoPicker.cameraCaptureMode = .photo

photoPicker.modalPresentationStyle = .fullScreen

present(photoPicker, animated: true, completion: nil)

}In the else, we just create a function that presents a UI alert that informs the user that they have no system camera available to them.

else {

noCamera()

}One challenge you have to keep in mind when testing the camera view. Is that you cannot test the camera view through the simulator so you will have to test the project through your device. Which turns out to be pretty simple. All you have to do is change the test environment to your phone

The current state of the project is incomplete as we only had a small piece to a big idea. Currently, the feature we were tasked to build is a Minimum Viable Product (MVP). The current shipped features are.

In conclusion, the overall build was as difficult as I originally anticipated. The team I was assigned to was always communicating. Which made our responsibilities that much clearer. I do hope one day in the future that they may add features like live learning or communication in which would allow fellow students to socialize while remotely learning.

In the end, this project helped me better understand what it is to work on a team of this scale. Where invested stakeholders hold as much compassion as the developers to see their project built. I would like to give a special thank you to my team (Bryson Saclausa, Norlan Tibanear, and Bohdan Tkachenko) for all the hard work they put into this 2-month long project. Hope to one day work with you all soon.

26