22

The Ultimate Guide to Browser-Side Storage

by author Craig Buckler

It's usually necessary to store user data server side in a database or similar repository. This guarantees persistence and ensures users can access their data from any web-connected browser (presuming your storage system is reliable, of course!)

Storing data in the browser becomes a more viable option when:

You're retaining an application's state, such as the active panel, chosen theme, input options, etc.

You're storing data locally, perhaps for practicality, performance, privacy, or pre-upload reasons.

You're creating a Progressive Web App which works offline and has no server-side requirements other than the initial download and updates.

The data you store in the browser will either be persistent (retained until you delete it) or temporary (deleted when the user's session ends). Browsers are more complex:

The OS, browser, plugins, or user can block or delete data depending on the storage space available, supported technologies, vendor policies, configuration settings, etc.

Temporary data is often retained beyond the session. For example, you can close then reopen a browser tab and data should remain available.

In all cases, data is domain-specific: values stored by one domain (or sub-domain) cannot be read, updated, or deleted by another. That said, a third-party page script loaded from another domain has the same level of access as any of your scripts.

To help you choose the best option, browser storage options below are split into three categories:

Current options. APIs you can use today in all modern and most older browsers.

Future options. Experimental APIs which should be stable within a few years.

Past options. Deprecated APIs you should avoid at all costs!

But first, a couple of tips that will help you analyze your current situation and decide what to do with it.

The DevTools Application panel (Storage in Firefox) permits viewing, modification, and deletion of most storage containers. The Network panel is also useful when examining downloaded data or cookies sent by HTTP requests and responses.

You can verify storage API support by looking for specific properties or methods of the

window object, e.g.// is localStorage available?

if (typeof localStorage !== 'undefined') {

// yes!

}It's more difficult to check when a data store has space available. You can use a

try/catch block to detect failures:try {

localStorage.setItem('myObject', myObject);

}

catch (e) {

console.log('localStorage failed:', e);

}Alternatively, you can use the Promise-based Storage API to check the remaining space for Web Storage, IndexedDB, and the Cache API. The asynchronous

.estimate() method returns:quota property: the space available to the domain, andusage property: the space already in use.

(async () => {

// Storage API support

if (!navigator.storage) return;

const storage = await navigator.storage.estimate();

console.log(`permitted: ${ storage.quota / 1024 } Kb`);

console.log(`used : ${ storage.usage / 1024 } Kb`);

console.log(`% used : ${ Math.round((storage.usage / storage.quota) * 100) }%`);

console.log(`remaining: ${ Math.floor((storage.quota - storage.usage) / 1024) } Kb`);

})();This API is not supported in Safari or Internet Explorer. Neither will it help you decide what to do when storage runs out! You may need to consider server-side storage but retain a proportion of frequently-used data locally.

The following client-side storage APIs are available in all browsers going back a decade or longer. They should pose no problems in modern applications or IE11.

JavaScript variables are the fastest storage option but they're highly volatile. The browser deletes data when you refresh or navigate away. Variables have no storage limit (other than your computer's memory limit), but the browser will slow down as you fill the available memory.

const

a = 1,

b = 'two',

c = {

one: 'Object',

two: 'three'

};Use when:

Advantages:

Disadvantages:

While not strictly a storage method, you can append values to any DOM node in named attributes or as a property of that object, e.g.

// locate a DOM node

const element = document.getElementById('mycomponent');

// store values

element.myValue1 = 1;

element.setAttribute('myValue2', 2);

element.dataset.myValue3 = 3;

// retreive values

console.log( element.myValue1 ); // 1

console.log( element.getAttribute('myValue2') ); // "2"

console.log( element.dataset.myValue3 ); // "3"It's fast and there are no strict limits, although it's not ideal for large volumes of data. Third-party scripts or a refresh wipes the data, although the server can write attributes to the HTML again.

Adding a property (

element.myValue1) makes no change to the HTML itself and uses the prototypal nature of JavaScript objects.Alternatively, you can set and get element attributes with:

.setAttribute() and .getAttribute(). Be careful not to use an HTML attribute with associated functionality such as disabled or hidden.

the dataset property. This appends an attribute prefixed with data- so it will not have default functionality.

the classList API to add or remove classes from the class attribute. These cannot (easily) have a value assigned so are most practical for retaining Boolean variables.

All three options convert the value to a string so deserialization may become necessary.

Use when:

Advantages:

Disadvantages:

window.localStorage for persistent data, andwindow.sessionStorage for temporary session data.The browser limits each domain to 5MB and, unusually, read and write operations are synchronous so it can delay other JavaScript processes.

localStorage.setItem('value1', 1);

localStorage.getItem('value1'); // "1"

localStorage.removeItem('value1'); // goneChanging a value raises a

storage event in all other browser tabs/windows on the same domain so your application views can update as necessary:window.addEventListener('storage', e => {

console.log(`key changed: ${ e.key }`);

console.log(`from : ${ e.oldValue }`);

console.log(`to : ${ e.newValue }`);

});Use when:

Advantages:

Disadvantages:

name used by another componentSo why do few developers adopt IndexedDB?

The main reason is the API. It's old, requires callback juggling, and feels inelegant when compared to modern

async/await operations. You can Promisify it with wrapper functions, e.g. create a database connection when passed a name, version number, and upgrade function called when the version changes:// connect to IndexedDB database

function dbConnect(dbName, version, upgrade) {

return new Promise((resolve, reject) => {

const request = indexedDB.open(dbName, version);

request.onsuccess = e => {

resolve(e.target.result);

};

request.onerror = e => {

console.error(`connect error: ${ e.target.errorCode }`);

};

request.onupgradeneeded = upgrade;

});

}Then connect and initialize a

mystore object store:(async () => {

const db = await dbConnect('db', 1.0, e => {

db = e.target.result;

db.createObjectStore('mystore');

})

})();Use the

db connection to .add new data items in a transaction:db.transaction(['mystore'], 'readwrite')

.objectStore('mystore')

.add('the value', 'key1')

.onsuccess = () => console.log( 'added' );or retrieve values:

db.transaction(['mystore'], 'readonly')

.objectStore('mystore')

.get('key1')

.onsuccess = data => console.log( data.target.result );Use when:

Advantages:

Disadvantages:

The Cache API stores HTTP request and response objects. It's primarily used in Progressive Web Apps to cache network responses so an app can serve cached resources when it's offline. It's not practical for storing other types of data.

The cache storage size depends on the device. Chrome-based browsers typically permit 100MB per domain, but Safari limits it to 50Mb and expires data after 14 days.

You're unlikely to encounter the Promise-based Cache API outside Service Workers, but you can store the content of a request in a named cache:

// open a cache

const cache = await caches.open( 'myCache' );

// fetch and store response

await cache.add( '/myfile.json' );and retrieve it later:

const

// open cache

cache = await caches.open( 'myCache' ),

// fetch stored response

resp = await cache.match( '/myfile.json' ),

// get content as text

response = await resp.text();Use when:

Advantages:

Disadvantages:

Cookies have a bad reputation, but they're essential for any web system which needs to maintain server/browser state such as logging on. Strictly speaking, cookies are not a client-side storage option since the server and browser can modify data. It's also the only option which can automatically expire data after a period of time.

A domain can store no more than 20 named cookies with a maximum string of 4Kb in each. This is a restrictive 80Kb limit, but every HTTP request and response sends the cookie data. If you set a total of 50Kb then request ten 1-byte files, it would incur more than 1MB of cookie-specific bandwidth.

The JavaScript

document.cookie API is simplistic with name/value pairs separated by an equals symbol (=):document.cookie = 'cookie1=1';

document.cookie = 'cookie2=two';Values cannot contain commas, semicolons, or whitespace so the

encodeURIComponent() may be necessary:document.cookie = `cookie3=${ encodeURIComponent('Hello world!') }`;You can add further options with semi-colon separators:

| option | description |

|---|---|

;domain= |

cookies are only accessible to the current domain, but ;path=site.com permits use on any subdomain |

;path= |

cookies only available to the current and child paths, ;path=/ permits use on any path |

;max-age= |

the cookie expiry time in seconds |

;expires= |

a cookie expiry date, e.g. ;expires=Mon, 12 July 2021 11:22:33 UTC (format with date.toUTCString()) |

;secure |

send the cookie over HTTPS only |

;HTTPOnly |

cookies are server-only and not available to client-side JavaScript |

For example, set a state object accessible on any path which expires in 10 minutes:

const state = { id:123 };

document.cookie = `state=${ encodeURIComponent(JSON.stringify(state)) }; path=/; max=age=600`;To examine cookies, you must parse the long

document.cookie string to extract name and value pairs using a function such as:const state = cookieValue('state');

// cache of parsed cookie values

let cookieCache;

// return a cookie value

function cookieValue(name) {

if (!cookieCache) {

cookieCache = cookieCache || {};

// parse

document.cookie

.split('; ')

.map(nv => {

nv = nv.split('=');

if (nv[0]) {

let v = decodeURIComponent( nv[1] || '' );

try { v = JSON.parse(v); }

catch(e){}

cookieCache[ nv[0] ] = v;

}

});

}

// return cookie value

return cookieCache[ name ];

}Use when:

Advantages:

max-age and Expires

Disadvantages:

The following client-side storage APIs are new. They have limited browser support, may be behind experimental flags, and are subject to change. There's no guarantee they'll ever become a Web Standard so avoid using them for mission-critical applications.

Browsers run in an isolated sandboxed environment which prevents malicious sites accessing personal data stored on your hard drive.

The downside is that you cannot 'edit' a local file. Consider a web-based text editor. You must upload a file to that application, edit it, then download the updated version. And what if you're working on several files? You could store the file in the cloud or use synchronization services such as Dropbox and OneDrive, but those can be impractical or overkill for simple apps.

The new File System Access API allows a browser to read, write, update, and delete from your local file system when you grant permission to a specific file or directory. A returned FileSystemHandle allows your web application to behave like a desktop app.

The following example function saves a Blob to a local file:

async function save( blob ) {

// create handle to a local file

const handle = await window.showSaveFilePicker();

// create writable stream

const stream = await handle.createWritable();

// write data

await stream.write(blob);

// close the file

await stream.close();

}Use when:

Advantages:

Disadvantages:

The File and Directory Entries API provides a domain-specific virtual local file system where a web app can read and write files without having to request user permission.

While some support is available in most browsers, it's not on the Web Standards track, and could easily slip into the "storage options to avoid" category. The API is unlikely to be practical for a number of years.

Use when:

Advantages:

Disadvantages:

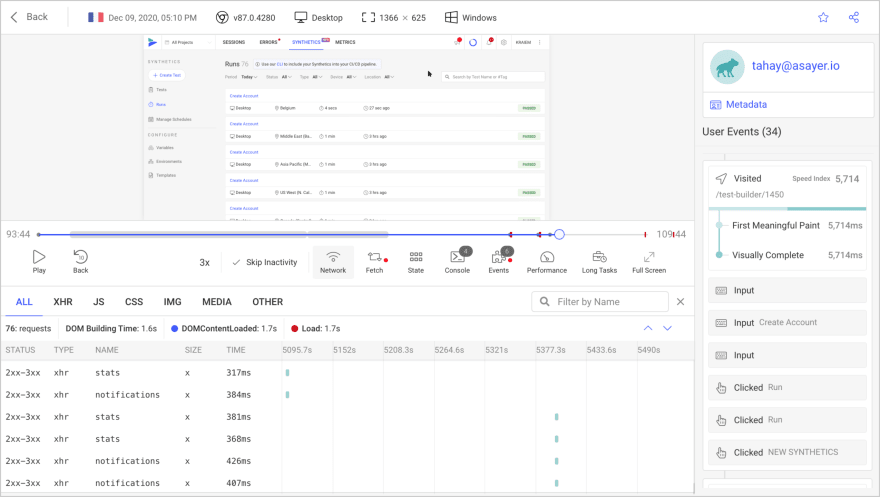

Debugging a web application in production may be challenging and time-consuming. OpenReplay is an Open-source alternative to FullStory, LogRocket and Hotjar. It allows you to monitor and replay everything your users do and shows how your app behaves for every issue.

It’s like having your browser’s inspector open while looking over your user’s shoulder.

OpenReplay is the only open-source alternative currently available.

It’s like having your browser’s inspector open while looking over your user’s shoulder.

OpenReplay is the only open-source alternative currently available.

Happy debugging, for modern frontend teams - Start monitoring your web app for free

The following client-side storage APIs are old, deprecated, or hacks. They may continue to work, but are best avoided for new projects.

The

window.name property sets and gets the name of the current window. This was typically used to keep track of several windows/tabs, but the browser retains the string value between refreshes or linking elsewhere.Bizarrely,

window.name will accept a few megabytes of string data:let value = { a:1, b:2, c:3 };

window.name = JSON.stringify( value );which you can read back at any point:

let newValue = JSON.parse( window.name );window.name was never designed for data storage but was often used as a hack or to polyfill localStorage APIs in IE7 and below.Use when:

Advantages:

Disadvantages:

window.name when you link elsewhere.// create database (name, version, description, size in bytes)

const db = openDatabase('todo', '1.0', 'to-do list', 1024 * 1024);

db.transaction( t => {

// create table

t.executeSql('CREATE TABLE task (id unique, name)');

// add record

t.executeSql('INSERT INTO task (id,name) VALUES (1, "write article")');

});

// output all items

db.transaction( t => {

t.executeSql(

"SELECT * FROM task",

[],

(t, res) => { console.log(res.rows); }

);

});Chrome and Safari offered varying inconsistent implementations of WebSQL, but Mozilla and Microsoft opposed it in favor of IndexedDB. The API was deprecated in 2010 and will not receive updates.

Use when:

Advantages:

Disadvantages:

CACHE MANIFEST

# cache files

index.html

css/style.css

js/main.js

images/image.png

# use from network when available

NETWORK:

network.html

# fallback when not available

FALLBACK:

. fallback.html

images/ images/offline.pngThis looks simple when compared with Service Workers, but there were many AppCache issues and gotchas which would break your site. For example, the cache would only update when you changed the manifest and non-cached resources would refuse to load on a cached page. AppCache was a failure - avoid it!

Use when:

Advantages:

Disadvantages:

Browsers have evolved over the past three decades so it's not surprising they offer a multitude of storage APIs which essentially do the same thing. It's not always easy to find the 'best' option and you may have to combine several depending on the functionality you're implementing, e.g.

22