35

How FirstPort execute a Database as Code Strategy using DbUp, Terraform & GitHub Actions

As many of you will already know, I am Head of Technology at FirstPort.

A key part of my role is delivering FirstPort’s vision of ‘People First’ technology. To do this, it is imperative that I select the right technology to underpin the delivery of services that help make customers’ lives easier.

Today I want to talk about my selection of DbUp

DbUp is an open-source .NET library that helps you to deploy changes to SQL Server databases. It tracks which SQL scripts have been run already, and runs the change scripts that are needed to get your database up to date.

You could ask the question why DbUp and not EF Migrations?

I have used a lot of them and I have to say that DbUp seems to me the most pure solution. I don’t like C# "wrappers" to generate SQL for me. DDL is an easy language and I don’t think we need a special tool for generating it.

Here at FirstPort, we also use Terraform to build the Azure SQL infrastructure and of course GitHub Actions. The focus of today however will mainly be on DbUp and the GitHub Action workflows.

There are a number of goals we are aiming for:

At its core, DbUp is a script runner. Changes made to the database are done via a script:

Script001_AddTableX.sql

Script002_AddColumnFirstPortIdToTableX.sql

Script003_AddColumnCustomerIdToTableX.sql

Script002_AddColumnFirstPortIdToTableX.sql

Script003_AddColumnCustomerIdToTableX.sql

DbUp runs through a console application you write yourself, so you control which options to use, and you don’t need a lot of code.

You bundle those scripts and tell DbUp to run them. It compares that list against a list stored in the destination database. Any scripts not in that destination’s database list will be run. The scripts are executed in alphabetical order, and the results of each script are displayed on the console. Very simple to implement and understand.

Checking whether journal table exists..

Journal table does not exist

Is upgrade required: True

Beginning database upgrade

Checking whether journal table exists..

Journal table does not exist

Executing Database Server script 'DbUpLeaseExtract.BeforeDeploymentScripts.001_CreateLeaseExtractSchemaIfNotExists.sql'

Checking whether journal table exists..

Creating the [SchemaVersions] table

The [SchemaVersions] table has been created

Upgrade successful

Success!That works great when you’re deploying to a development or test environment. At FirstPort we prefer our DBAs to approve scripts before going to production. Maybe a staging or pre-production environment as well. This approval process is essential, especially when you first start deploying databases.

Migration scripts are a double-edged sword. You have total control, which gives you great power. However, it’s also easy to mess up. It all depends on the type of change being done and the SQL skills of the writer. The DBA's trust in the process will be low when inexperienced C# developers are writing these migration scripts.

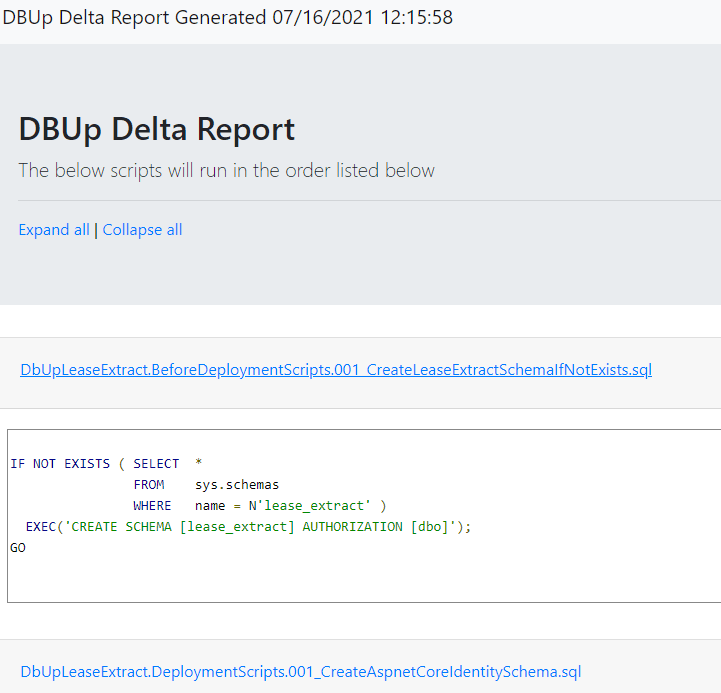

At FirstPort we added some code to generate a HTML report. It is an extension method where you give it the path of the report you want to generate. That means this section goes from:

var result = upgrader.PerformUpgrade();

// Display the result

if (result.Successful)

{

Console.ForegroundColor = ConsoleColor.Green;

Console.WriteLine("Success!");

}

else

{

Console.ForegroundColor = ConsoleColor.Red;

Console.WriteLine(result.Error);

Console.WriteLine("Failed!");

}To:

if (args.Any(a => a.StartsWith("--PreviewReportPath", StringComparison.InvariantCultureIgnoreCase)))

{

// Generate a preview file so GitHub Actions can generate an artifact for approvals

var report = args.FirstOrDefault(x => x.StartsWith("--PreviewReportPath", StringComparison.OrdinalIgnoreCase));

report = report.Substring(report.IndexOf("=") + 1).Replace(@"""", string.Empty);

var fullReportPath = Path.Combine(report, "UpgradeReport.html");

Console.WriteLine($"Generating the report at {fullReportPath}");

upgrader.GenerateUpgradeHtmlReport(fullReportPath);

}

else

{

var result = upgrader.PerformUpgrade();

// Display the result

if (result.Successful)

{

Console.ForegroundColor = ConsoleColor.Green;

Console.WriteLine("Success!");

}

else

{

Console.ForegroundColor = ConsoleColor.Red;

Console.WriteLine(result.Error);

Console.WriteLine("Failed!");

}

}

}This code will generate a report containing all the scripts that will be run.

With the above features, we put together a .NET Core DbUp console application to deploy to Azure SQL. Then we will put together a process in GitHub Actions to run that console application.

I chose .NET Core over .NET Framework because it could be built and run anywhere. DbUp is a .NET Standard library. DbUp will work just as well in a .NET Framework application.

Let’s fire up our IDE of choice and create a .NET Core console application. I am using VSCode to build this console application. I prefer it over full blown Visual Studio.

The console application needs some scripts to deploy. I’m going to add three folders and populate them with some script files:

I recommend you add a prefix, such as 001, 002, etc., to the start of your script file name. DbUp runs the scripts in alphabetical order, and that prefix helps ensure the scripts are run in the correct order.

By default, .NET will not include those scripts files when the console application is built, and we want to include those script files as embedded resources. Thankfully, we can easily add a reference to those files by including this code in the .csproj file:

<ItemGroup>

<EmbeddedResource Include="BeforeDeploymentScripts\*.sql" />

<EmbeddedResource Include="DeploymentScripts\*.sql" />

<EmbeddedResource Include="PostDeploymentScripts\*.sql" />

</ItemGroup>The entire file looks like this:

<Project Sdk="Microsoft.NET.Sdk">

<PropertyGroup>

<OutputType>Exe</OutputType>

<TargetFramework>net5.0</TargetFramework>

</PropertyGroup>

<ItemGroup>

<EmbeddedResource Include="BeforeDeploymentScripts\*.sql" />

<EmbeddedResource Include="DeploymentScripts\*.sql" />

<EmbeddedResource Include="PostDeploymentScripts\*.sql" />

</ItemGroup>

<ItemGroup>

<PackageReference Include="dbup-sqlserver" Version="4.5.0" />

</ItemGroup>

</Project>The final step to get this application going is to add in the necessary code in the Program.cs to call DbUp. The application accepts parameters from the command-line, and GitHub Actions will be configured to send in the following parameters:

ConnectionString: For this demo, we are sending this as a parameter instead of storing it in the config file.

PreviewReportPath: The full path to save the preview report. The full path parameter is optional. When it is sent in we generate a preview HTML report for GitHub Actions to upload to Azure Blob storage. When it is not sent in, the code will do the actual deployment.

Let’s start by pulling the connection string from the command-line argument:

PreviewReportPath: The full path to save the preview report. The full path parameter is optional. When it is sent in we generate a preview HTML report for GitHub Actions to upload to Azure Blob storage. When it is not sent in, the code will do the actual deployment.

Let’s start by pulling the connection string from the command-line argument:

static void Main(string[] args)

{

var connectionString = args.FirstOrDefault(x => x.StartsWith("--ConnectionString", StringComparison.OrdinalIgnoreCase));

connectionString = connectionString.Substring(connectionString.IndexOf("=") + 1).Replace(@"""", string.Empty);DbUp uses a fluent API. We need to tell it about our folders, the type of script each folder is, and the order we want to run scripts in. If you use the Scripts Embedded In Assembly option with a StartsWith search, you need to supply the full NameSpace in your search.

var upgradeEngineBuilder = DeployChanges.To

.SqlDatabase(connectionString, null)

.WithScriptsEmbeddedInAssembly(Assembly.GetExecutingAssembly(), x => x.StartsWith("DbUpLeaseExtract.BeforeDeploymentScripts."), new SqlScriptOptions { ScriptType = ScriptType.RunAlways, RunGroupOrder = 0 })

.WithScriptsEmbeddedInAssembly(Assembly.GetExecutingAssembly(), x => x.StartsWith("DbUpLeaseExtract.DeploymentScripts"), new SqlScriptOptions { ScriptType = ScriptType.RunOnce, RunGroupOrder = 1 })

.WithScriptsEmbeddedInAssembly(Assembly.GetExecutingAssembly(), x => x.StartsWith("DbUpLeaseExtract.PostDeploymentScripts."), new SqlScriptOptions { ScriptType = ScriptType.RunAlways, RunGroupOrder = 2 })

.WithTransactionPerScript()

.LogToConsole();

var upgrader = upgradeEngineBuilder.Build();

Console.WriteLine("Is upgrade required: " + upgrader.IsUpgradeRequired());The upgrader has been built, and it is ready to run. This section is where we inject the check for the upgrade report parameter. If that parameter is set, do not run the upgrade. Instead, generate a report for GitHub Actions to upload to Azure Blob storage:

if (args.Any(a => a.StartsWith("--PreviewReportPath", StringComparison.InvariantCultureIgnoreCase)))

{

// Generate a preview file so GitHub Actions can generate an artifact for approvals

var report = args.FirstOrDefault(x => x.StartsWith("--PreviewReportPath", StringComparison.OrdinalIgnoreCase));

report = report.Substring(report.IndexOf("=") + 1).Replace(@"""", string.Empty);

var fullReportPath = Path.Combine(report, "UpgradeReport.html");

Console.WriteLine($"Generating the report at {fullReportPath}");

upgrader.GenerateUpgradeHtmlReport(fullReportPath);

}

else

{

var result = upgrader.PerformUpgrade();

// Display the result

if (result.Successful)

{

Console.ForegroundColor = ConsoleColor.Green;

Console.WriteLine("Success!");

}

else

{

Console.ForegroundColor = ConsoleColor.Red;

Console.WriteLine(result.Error);

Console.WriteLine("Failed!");

}

}When we put it all together, it looks like this:

using System;

using System.IO;

using System.Linq;

using System.Reflection;

using DbUp;

using DbUp.Engine;

using DbUp.Helpers;

using DbUp.Support;

namespace DbUpLeaseExtract

{

class Program

{

static void Main(string[] args)

{

var connectionString = args.FirstOrDefault(x => x.StartsWith("--ConnectionString", StringComparison.OrdinalIgnoreCase));

connectionString = connectionString.Substring(connectionString.IndexOf("=") + 1).Replace(@"""", string.Empty);

var upgradeEngineBuilder = DeployChanges.To

.SqlDatabase(connectionString, null)

.WithScriptsEmbeddedInAssembly(Assembly.GetExecutingAssembly(), x => x.StartsWith("DbUpLeaseExtract.BeforeDeploymentScripts."), new SqlScriptOptions { ScriptType = ScriptType.RunAlways, RunGroupOrder = 0 })

.WithScriptsEmbeddedInAssembly(Assembly.GetExecutingAssembly(), x => x.StartsWith("DbUpLeaseExtract.DeploymentScripts"), new SqlScriptOptions { ScriptType = ScriptType.RunOnce, RunGroupOrder = 1 })

.WithScriptsEmbeddedInAssembly(Assembly.GetExecutingAssembly(), x => x.StartsWith("DbUpLeaseExtract.PostDeploymentScripts."), new SqlScriptOptions { ScriptType = ScriptType.RunAlways, RunGroupOrder = 2 })

.WithTransactionPerScript()

.LogToConsole();

var upgrader = upgradeEngineBuilder.Build();

Console.WriteLine("Is upgrade required: " + upgrader.IsUpgradeRequired());

if (args.Any(a => a.StartsWith("--PreviewReportPath", StringComparison.InvariantCultureIgnoreCase)))

{

// Generate a preview file so GitHub Actions can generate an artifact for approvals

var report = args.FirstOrDefault(x => x.StartsWith("--PreviewReportPath", StringComparison.OrdinalIgnoreCase));

report = report.Substring(report.IndexOf("=") + 1).Replace(@"""", string.Empty);

var fullReportPath = Path.Combine(report, "UpgradeReport.html");

Console.WriteLine($"Generating the report at {fullReportPath}");

upgrader.GenerateUpgradeHtmlReport(fullReportPath);

}

else

{

var result = upgrader.PerformUpgrade();

// Display the result

if (result.Successful)

{

Console.ForegroundColor = ConsoleColor.Green;

Console.WriteLine("Success!");

}

else

{

Console.ForegroundColor = ConsoleColor.Red;

Console.WriteLine(result.Error);

Console.WriteLine("Failed!");

}

}

}

}

}We have a couple of different workflows. One runs on Pull Request to generate the HTML Report and another on merge to run the actual database scripts against the target Azure SQL instance.

First we have this to ensure it only runs on pull requests for changes within the db-deploy directory:

name: Create DB Delta Report

on:

pull_request:

branches:

- develop

paths:

- 'db-deploy/**'Next we checkout the code and lint using super-linter :

jobs:

db-delta-report:

name: db-delta-report

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@master

- name: Lint Code Base

uses: github/super-linter@master

env:

GITHUB_TOKEN: ${{ secrets.GH_TOKEN }}

VALIDATE_ALL_CODEBASE: true

VALIDATE_MD: true

VALIDATE_CSHARP: true

VALIDATE_SQL: trueNext we setup .NET core and build the project:

- name: Setup .NET Core

uses: actions/setup-dotnet@main

with:

dotnet-version: '5.0.x'

- name: Cache Packages

uses: actions/cache@v2

with:

path: ~/.nuget/packages

key: ${{ runner.os }}-nuget-${{ hashFiles('**/packages.lock.json') }}

restore-keys: |

${{ runner.os }}-nuget

- name: Restore dependencies

working-directory: db-deploy/lease_extract

run: dotnet restore

- name: Build Console App

working-directory: db-deploy/lease_extract

run: dotnet publish --no-restore --output DbUpLeaseExtractThis next step calls a PowerShell script that runs the console app. We utilise encrypted secrets to pass in the database connection string:

- name: Create DB Delta Report

env:

LEASE_EXTRACT_DB_CONNECTION_STRING_DEV: ${{ secrets.LEASE_EXTRACT_DB_CONNECTION_STRING_DEV }}

run: ./db-deploy/scripts/db-delta-report-dev.ps1

shell: pwshThis is the PowerShell script it is calling:

$packagePath = "db-deploy/lease_extract/DbUpLeaseExtract"

$connectionString = $Env:LEASE_EXTRACT_DB_CONNECTION_STRING_DEV

$reportPath = "db-deploy/lease_extract/DbUpLeaseExtract"

$dllToRun = "$packagePath/DbUpLeaseExtract.dll"

$generatedReport = "$reportPath/UpgradeReport.html"

if ((test-path $reportPath) -eq $false){

New-Item $reportPath -ItemType "directory"

}

dotnet $dllToRun --ConnectionString="$connectionString" --PreviewReportPath="$reportPath"Currently the GitHub API has no way of attaching a file to a PR comments, so as a workaround I have decided to upload the HTML report to Azure Blob storage and link to it in the PR comment:

- name: Upload DB Delta Report to Azure Blob

uses: azure/powershell@v1

with:

inlineScript: |

az storage blob upload --account-name storageaccountname --container-name '$web' --file "db-deploy/lease_extract/DbUpLeaseExtract/UpgradeReport.html" --name UpgradeReport.html

azPSVersion: "latest"

- name: Comment on PR

uses: unsplash/comment-on-pr@master

env:

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

with:

msg: "Please review the [DB Delta Report](https://storageaccountname.z33.web.core.windows.net/)"Once this has been reviewed by our DBA's and the pull request is merged. A different workflow is triggered:

name: DB Upgrade

on:

push:

branches:

- develop

paths:

- 'db-deploy/**'Instead of running the HTML report, its runs an upgrade on the database by calling a different script:

- name: Deploy DB Upgrade

env:

LEASE_EXTRACT_DB_CONNECTION_STRING_DEV: ${{ secrets.LEASE_EXTRACT_DB_CONNECTION_STRING_DEV }}

run: ./db-deploy/scripts/db-upgrade-dev.ps1

shell: pwshThe only thing different about this script is that the PreviewReportPath switch is missing:

$packagePath = "db-deploy/lease_extract/DbUpLeaseExtract"

$connectionString = $Env:LEASE_EXTRACT_DB_CONNECTION_STRING_DEV

$reportPath = "db-deploy/lease_extract/DbUpLeaseExtract"

$dllToRun = "$packagePath/DbUpLeaseExtract.dll"

$generatedReport = "$reportPath/UpgradeReport.html"

if ((test-path $reportPath) -eq $false){

New-Item $reportPath -ItemType "directory"

}

dotnet $dllToRun --ConnectionString="$connectionString"As a final step, we track all of our deployments in Code Climate Velocity - If you aren't using it yet, I highly recommend you check it out:

- name: Send Deployment to Code Climate

run: curl -d "token=${{ secrets.VELOCITY_DEPLOYMENT_TOKEN }}" -d "revision=${GITHUB_SHA}" -d "repository_url=${GITHUB_SERVER_URL}/${GITHUB_REPOSITORY}" -d "branch=develop" -d "environment=db-dev" -d "version=${GITHUB_RUN_NUMBER}" https://velocity.codeclimate.com/deploysThis same convention would be ran for dev, QA & prod etc with branches aligned to each environment.

DbUp has really helped FirstPort create a robust deployment pipeline for databases. Now DBA's (and others) can review changes via GitHub Actions before they are deployed. Having the ability to review the changes should help build trust in the process and help speed up the adoption.

In this post I demonstrated one technique to achieving automated database deployments using GitHub Actions. There are plenty of other solutions, and if you are using Entity Framework or similar these tools have migration support built in, but the core approach will be the same.

I hope I could help you learn something new today, and share how we do things here at FirstPort.

Any questions, get in touch on Twitter

✨Azure Readiness Checklist✨

Are you ready to go to prod on Azure? Use this checklist to find out: azurechecklist.com

Call for #contributors! - Help make this THE go to list for #Azure

#AzureDevOps #OpenSource - PLS RT ❤️

Are you ready to go to prod on Azure? Use this checklist to find out: azurechecklist.com

Call for #contributors! - Help make this THE go to list for #Azure

#AzureDevOps #OpenSource - PLS RT ❤️

09:24 AM - 01 Nov 2019

35

![Andrew [MVP] profile image](https://res.cloudinary.com/practicaldev/image/fetch/s--GXR7wJds--/c_limit%2Cf_auto%2Cfl_progressive%2Cq_auto%2Cw_880/https://pbs.twimg.com/profile_images/1266114810590179328/at1PK_qL_normal.jpg)