31

Big O Notation

Big O Notation is a way to measure an algorithm’s efficiency. It measures the time it takes to run your function as the input grows. Or in other words, how well does the function scale.

For example, you have 10 numbers (2, 36, 5, 7, 3, 9, 0, 1, 10, 4) and you want to sort these number in sequence. How will you decide which algorithm to use? What if there are 1000 numbers, then what will you do?, Big O is used to measure and find the best possible solution for these type of problems.

source: https://www.bigocheatsheet.com/

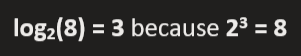

Before understanding what O(log n) is lets understand what log or logarithms are.

A logarithm is the power to which a number must be raised in order to get some other number. In computer science by default base of log is 2.

For example lets take a number 8. The base 2 logarithm of 8 is 3, because 2 raised to the power of 3 is 8:

In short what we ask is how many 2s need to be multiplied together to get a number.

Coming back to O(log n), it basically means, time goes up linearly while the n goes up exponentially. So, if it takes 1 second to compute 10 elements, it will take 2 seconds to compute 20 elements, 3 seconds to compute 40 elements, and so on. An example of an O(log n) algorithm would be a binary search algorithm:

function binarySearch(arr, val) {

let upper = 0;

let lower = arr.length - 1;

while (upper <= lower) {

let middle = Math.floor((upper + lower) / 2);

if (arr[middle] === val) {

// found the val

return middle;

} else if (arr[middle] < val) {

// continue searching to the right

upper = middle + 1;

} else {

// search searching to the left

lower = middle - 1;

}

}

// val wasn't found

return -1;

}

// Array should be sorted for binary search

let arr = [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10];No matter how many items there are, whether one ore one million, the amount of time to complete will remain same. For example:

const fishes = ["nemo", "marlin", "bruce", "crush", "dory"];

function firstFishInLine(arr) {

console.log(arr[0]);

}No matter how big the array is, we will always be grabbing first item in the array (We are only doing one thing).

In O(n) all the loops are an example of linear growth because there is one-to-one relationship between the data size and time to completion. So an array with 100 times more items will take exactly 100 times longer.

const fish = ["nemo", "marlin", "bruce", "crush", "dory"];

function findingDorry(arr) {

for (let i = 0; i <= arr.length; i++) {

if (arr[i] === "dory") {

console.log("🎉 Dory Found");

}

}

}

findingDorry(fish);More the fishes more time it will take because, It will check each element in an array.

O(n log n) implies that log n operations will occur n times. For example, searching for the element in sorted list of length n is O(log n). Searching for the element in n different sorted lists, each of length n is O(n log ).

function nLogn(n) {

let y = n;

while (n > 1) {

n = Math.floor(n / 2); // O(log n)

for (let i = 1; i <= y; i++) { // O(n)

console.log(i);

}

}

}O(n^2) is extremely inefficient. It can also be written as O(n^x). Where x is number of nested loops. Putting a loop inside a loop is great way of turning an array of 1000 items into million (1000 X 1000) operation search that'll freeze your browser. Any form of nested loop is an example of O(n^x). following is the example of O(n^2):

const fishes = ["nemo", "marlin", "bruce", "crush", "dory"];

function fishPairs(arr) {

for (let i = 0; i < arr.length; i++) {

for (let j = 0; j < arr.length; j++) {

console.log(arr[i], arr[j]);

}

}

}Difference between O(n^2) and O(2n)

- We Add (+) for steps in order (i.e.: n+n)

- And Multiply (*) for nested steps (i.e.: n*n)

O(2^n) denotes an algorithm whose growth doubles, with each addition to the input data set.

The growth curve of an O(2^n) function is exponential - starting off very shallow, then rising meteorically. A great example of O(2^n) will be Fibonacci series:

function fibonacci(num) {

if (num <= 1) {

return num;

}

return fibonacci(num - 2) + fibonacci(num - 1);

}O(n!) is one of the worst possibilities. To illustrate how fast factorial solutions will blow up in size, a standard deck of cards has 52 cards, with 52! possible orderings of cards after shuffling. This number is larger than the number of atoms on Earth.

One classic example is the traveling salesman problem through brute-force search.

If there are N cities, the brute force method will try each and every permutation of these N cities to find which one is cheapest. Now the number of permutations with N cities is N! making it's complexity factorial (O(N!)).

For simplicity in our examples we have taken only one type of array or input that's why it is n*n = n^2 or n+n =2n but different inputs should have different variables.(example, a*b or a+b)

You can calculate Big O by following these 5 steps:

- Break your algorithm/function into individual operations.

- Calculate the Big O of each operation.

- Add up the Big O of each operation together.

- Remove the constants.

- Certain terms dominate others. O(1) < O(log n) < O(n) < O(n log n) < O(n^2) < O(2^n) < O(n!). i.e. ignore lower order terms. Find the highest order term — this will be what we consider the Big O of our algorithm/function.

Let's try to calculate Big O of following example:

function foo(arr) {

let a = 30; // O(1) - only running once

a = 1 + 3; // O(1)

for (let i = 0; i < arr.length; i++) { // O(n) - because it will run n:times

someAnotherFunction(); // O(n) - Here we are calling some function. It will be called every time the loop is run.

let bool = true; // O(n) - again because inside for loop

a++; // O(n)

}

return a; // O(1)

}So, Big O will be:

1 + 1 + n + n + n + n + 1 = 3 + 4n => O(3+4n)

but lets try to simplify it more, look at the '5th' rule above. Certain terms dominate others, (O(1) is less significant then O(n)).

So, we will drop count 3 & now it will be O(4n).

In rule '4th' we have defined that we should remove constants, so are final answer will be : O(n)

31