33

Event Modeling By Example

Good design is a fundamental thing for me when it comes to building applications. Yes, there is a time in a place for a quick functional program that does a simple job ala scheduled batch file. But in almost all cases, committing even a small amount of time to thoughtful design has huge ramifications on the long-term success of a project.

Design for where you want your application to be, not where it is now

An element of this is the principles of Domain-Driven Design (DDD). For those who aren't aware, DDD is the name of a book written by Eric Evans in 2003 detailing a methodology for designing software. At its core, it maps business domain concepts into software artifacts.

Whilst I can heartily recommend the big blue book, I did find it difficult to take practical steps that I can use when working with clients in the real world. That is where event modeling comes in.

Event Modeling is a term coined by Adam Dymitruk. It builds upon a lot of the concepts set out in DDD. The main focus is on the events that happen in a business, as all software is at the core is a series of events happening one after another.

If the software focuses on things that the business can understand, the system can be easily understood by anybody looking at it. That includes everyone from C suite execs through the development team and even to customer service representatives with no background in IT.

There are some fantastic resources on Adam's website https://eventmodeling.org, but what I wanted to-do today was walk through my process for implementing event modeling in a 'real' application.

Before I dive into the step-by-step, I want to identify a couple of tools and resources I use for this process.

The first is the article on Adam's website on 'What is event modeling'. It adds a lot of context to the steps I'm going to follow in this article. I can recommend going to read that article before continuing with this one.

The second is Miro. Miro is a virtual whiteboard that allows multiple people to collaborate on the same board. Given more and more of the world is moving to a completely remote way of working, it's an invaluable tool when it is simply impossible to all stand at a physical whiteboard.

Even if you do use a physical whiteboard initially, it's a great idea to move that to a digital tool for future developments. Online, backed up, and accessible anywhere - what's not to love.

So now, on to the steps.

The first step of the process is to brainstorm ALL of the events that affect within the business domain being modeled. It's important to have stakeholders from all parts of the business in this session as each may have a valuable piece of insight into the workflows.

What you are looking for here is just a brain dump of all the things happening in the business. There is no need to organize or categorize at this stage, just get it all out there. Remember, an event is always in the past tense. SubmitOrder is not an event but OrderSubmitted is.

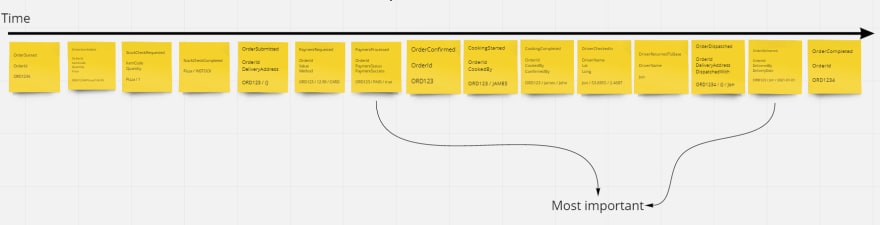

The second step is the first layer of organisation. All of the events should be added to a timeline that shows the journey through the system.

For anyone who has ever ordered food online, this series of events should be familiar.

The other thing you'll notice on this diagram is that I've identified a couple of important events. These events are the ones that are fundamental to the business. In this example, taking payments and delivering orders are the two things needed for the restaurant to succeed.

The third phase is to begin thinking about user interactions and the different people taking part in the process. Wireframe mockups are a great tool. These mock-ups don't need to be concrete though they are just to give a rough idea of the interactions with the system.

I find it helpful to split these down into swimlanes for the different people/systems who affect the system. It would also include any external systems (payment processors, CRM systems) that change state, albeit without direct human intervention.

In this example, I'm more concerned about the backend application than the look of the frontend. For that reason, I've just included some icons to indicate there will be interactions from either a UI or an external system.

Notice how I've included the cog icons for the stock checker and payment processor. It shows that there is a process that happens to cause a change in the system state. The interaction may not be driven by a human, but it is an interaction all the same.

Phase four is where things start to get interesting. It is where I begin to link the UI/UX to the events via commands.

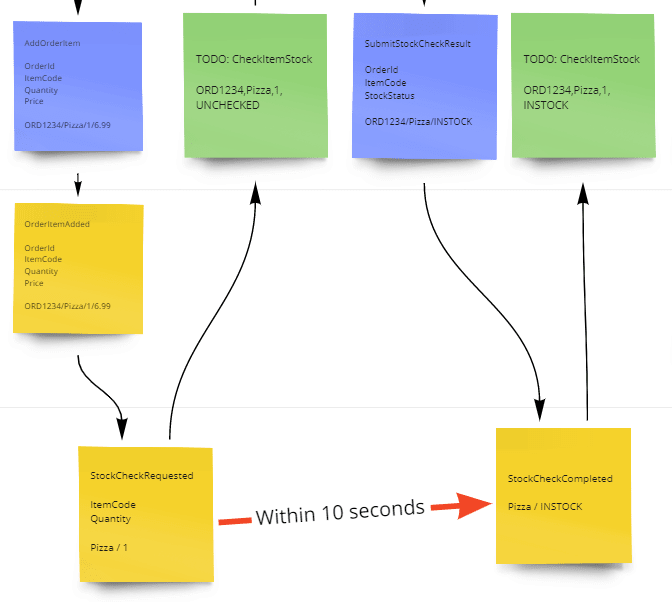

Taking the first workflow as a specific example:

Taking a look at the whiteboard now, there is a clear flow from left to right. This interaction submits this command then this event happens.

It can be beneficial at this point to talk about the command contents. Thinking about what makes up a SubmitStockCheckResults command can help to identify any missing data from earlier in the process.

Phase five is where I start to look at the information users need to make decisions.

I do this using bespoke read models that project the event information into a useful format for users. You can see these below on the green post-it notes.

For the stock checker to reliably check the stock of orders, it needs a view of all the orders that are currently waiting to have their stock checked. For the kitchen to cook the orders, they need to see a list of all the orders that have been paid and are awaiting production.

Again, it's important to not get bogged down in details here. It doesn't matter if you're using a queue, an event stream, or a relational database.

What matters is that the data needs to be exposed in a friendly format that can be used to make informed decisions about the system.

Adam refers to these read models as to-do lists, and I quite like that idea. A to-do list is a concept that almost everybody can understand. If I were to start talking about queues or a messages bus I would likely alienate the non-technical folk.

The system is saying here are some data items that need to have an action performed on them.

I find it's at this stage that any holes start to become apparent. For example, in the above screenshot, a SubmitPaymentResult command happens results in a PaymentProcessed event. This event has a PaymentSuccess field that looks like it should be conditional in the workflow.

When a PaymentProcessed event happens the order is placed on the OrdersAwaitingCook to-do list. But what if the payment fails?

And this is where the value of event modeling becomes apparent. You have got a room full of people, both technical and not, who can talk the same language and rationalize about the behavior of the system.

Architect: "So, what happens when the payment is processed but it fails for some reason?"

Mark in Order Processing: "Oh, if that happens I would just notify the customer to inform them that their payment has failed."

Architect: "Ok, so how would that interaction happen?"

Barbara in Customer Service: "Well, if the customer was stood in-front of me in the restaurant I could ask them to change their payment method. If they are ordering online, then I need them to know about the failure instantly so they can change their order, or at least know that they aren't going to be getting a pizza delivered."In a matter of moments, I have learned something about our domain and can amend the flow of events on the fly. In the first iteration of the timeline, I processed payments and then added all orders to the OrdersAwaitingCook to-do list. Now, I have a small branch at this point in the case of an order failing.

Almost there now.

The penultimate stage is to group the events into their service layers. Hopefully by this stage, you should start to see some pretty logical boundaries between the different events in your system.

An important note here, these service layers should be based on the business domain and NOT on a perceived microservices architecture. Remember, you are trying to keep everybody interested and on the same page.

It can be good at this stage to think about the make-up of teams. A team of people, technical and non-technical, can now begin work on an individual service layer. These service layers can then develop independently, but the shared vision is also well understood.

Yep, it sounds a lot like microservices. Resist the temptation, don't say the word!

The final stage is to build specific scenarios out of the workflows. A scenario is a user story that is built from this set of events, commands, and views.

Now what I have is a list of very specific functional requirements that when pieced together form the basis of the entire system. Each of these individual workflows can be assigned to a specific team and that is the workflow they own.

From here, a more developer-specific specification may be created in the form of a more traditional user story. What is important however, is that this event model is treated as the source of truth that the ENTIRE ORGANISATION can understand.

The development teams may have epics in Jira or cards on a Trello board. It's largely irrelevant. The importance is the common language the entire system shares and can be used to rationalize different scenarios.

There are a couple of additional bits of information that I just wanted to tack on at the end to answer some questions I know I had when I first encountered this model.

If there is one I would take away from this, it is that the implementation is irrelevant. Whether that's database providers, to-list implementations, programming languages. Don't worry about any of it.

The end goal should be for somebody non-technical and unfamiliar with the business to be able to understand what happens within the organisation very quickly.

Imagine starting work at a new company. In your first day, you understand the entire system at a high level and begin to ask questions of both domain experts and developers in a language everybody understands. It wouldn't matter if you were working in a technical or non-technical role.!

Whilst the implementation is irrelevant it can be useful to note on the diagram any time-sensitive operations.

You can see I've marked very explicitly there is a business-specific rule that a stock check must complete in 10 seconds. This is to provide feedback to a user in real-time when they are using the application.

Remember the detail of 'real-time' information isn't important (SignalR, websockets, emails, etc), it is just that there is some form of service level agreement that the system needs to adhere to.

The wireframes on all of my screenshots are icons taken from Miro's built-in icon sets. My intention with this document is to show the backend architecture of a system using event modeling. I didn't want to get too bogged down in front-end appearance. At least at this stage.

In a normal working session, these wireframes would be drawn up to look like how you'd actually want the UI to look. If the UI was going to have three inputs, a dropdown, and a button go ahead and create a wireframe in Miro that looks like that.

I hope you've found this step-by-step useful and that it has given you a good introduction to the concept of event modeling.

I know it's something I'll be using in future architecture discussions as another tool to bridge the gap between business domain and technical details.

A huge thanks to Adam Dymitruk for sharing so much information about this process. There is an abundance of YouTube videos out there containing real-world scenarios that Adam works through with businesses.

33