35

Logging & Tracing in Python, FastApi, OpenCensus and Azure

Logging. Even it's quite important to have good logging (and tracing) in an application, sometimes it's forgotten or underestimated. And even we can go well for some time just with

print(), establishing good logging can significantly improve DX and production debugging.We will go through the basics of logging in Python and its' configurations and then how to use OpenCensus to collect logs & traces and export them to Azure Application Insights.

This guide will try to cover the topic from zero to hero, therefore it will consist of several parts. This 1st part will be about Python, logging, and at the end, we'll create a simple FastApi server with a logging setup.

Python - Python language and logging module

FastApi - Modern python web framework for building APIs. It's based on Pydantic and type hints to validate, serialize, and deserialize data, and automatically auto-generate OpenAPI documents

OpenCensus - OpenCensus is a set of libraries for various languages that allow you to collect application metrics and distributed traces, then transfer the data to a backend of your choice in real-time

Azure AppInsights - is an Azure monitoring and analytics tool for your resources. It is aggregated by Azure Monitor or Azure LogAnalytics so this guide applies more/less for these tools too

Although we'll show examples mostly on FastApi and later on Azure, most of the stuff we'll learn we'll be easily applicable on any Python or OpenCensus powered applications.

Python comes by default with a logging module named

logging. The standard way how to use it is to request a named logger and use that to emit messages:import logging

log = logging.getLogger("my-api")

log.info("Hello 42")It's common to create a logger with

__name__ in each file to have it easily distinguishable or have one specific based on the name.It should be mentioned that getLogger("my-api") returns logger named my-api if created before otherwise it crates one and returns it. It was a little bit unexpected for me to have more experience in JS/React world and its' behavior will be important later in this guide.

There are several logging levels and almost all of them (not

NOTSET) have their appropriate log function like log.error("omg"):Setting the logging level to a certain value tells the library to handle all messages with the same level and up. So WARNING level will emit all WARNING, ERROR & CRITICAL events.

For now, it doesn't do much except ordinary printing. We need to configure it to make it really useful.

We can configure it:

fileConfig()

dictConfig()

Config is easier to read but a little bit less flexible. The combination is possible but I encountered some issues when I integrated "too many" features.

In this guide I will try to show dict & code variants, but later the final solution will be in code for reasons mentioned before.

Configuring logging or actually, logger(s) means basically adding

Formatter and Handler to them.Prepared basic configuration with default

Formatter and StreamHandlerimport logging

FORMAT = "%(levelname)s:%(message)s"

logging.basicConfig(format=FORMAT, level=logging.DEBUG)

logging.debug('This message should appear on the console')LOG_LEVEL: str = "DEBUG"

FORMAT: str = "%(asctime)s - %(name)s - %(levelname)s - %(message)s"

logging_config = {

"version": 1, # mandatory field

# if you want to overwrite existing loggers' configs

# "disable_existing_loggers": False,

"formatters": {

"basic": {

"format": FORMAT,

}

},

"handlers": {

"console": {

"formatter": "basic",

"class": "logging.StreamHandler",

"stream": "ext://sys.stderr",

"level": LOG_LEVEL,

}

},

"loggers": {

"simple_example": {

"handlers": ["console"],

"level": LOG_LEVEL,

# "propagate": False

}

},

}

import logging

# create logger

logger = logging.getLogger('simple_example')

# 'application' code

logger.debug('debug message')

logger.info('info message')

logger.warning('warn message')

logger.error('error message')

logger.critical('critical message')What happens here is pretty simple. We are configuring a logger named

simple_example with handler console that streams to standard output and uses a formatter named basic.getLogger(name)

So if you start to ask yourself. Yes. Either

formatters or also handlers can be omitted. Then standard output and format are used.import logging

# create logger

logger = logging.getLogger('simple_example')

logger.setLevel(logging.DEBUG)

# create console handler and set level to debug

ch = logging.StreamHandler()

ch.setLevel(logging.DEBUG)

# create formatter

formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

# add formatter to ch

ch.setFormatter(formatter)

# add ch to logger

logger.addHandler(ch)

# 'application' code

logger.debug('debug message')

logger.info('info message')

logger.warning('warn message')

logger.error('error message')

logger.critical('critical message')

Now we know everything necessary to implement logging into FastApi server. Let's start with the basic folder structure and files.

├── app

│ ├── core

│ │ └── log_config.py

│ ├── __init__.py

│ └── main.py

└── requirements.txtI recon AVOIDING renaming log_config.py to logging.py as it can create some conflicts with the logging module

requirements.txt

# requirements.txt

fastapi

uvicornlog_config.py

# log_config.py

# put one of 2 versions of config from above here

# you can choose whichever you like more

<code from abouve>main.py

# main.py

import logging

from logging.config import dictConfig

from fastapi import FastAPI

from app.core.log_config import app_dict_config, init_loggers

# This should run as soon as possible to catch all logs

# Run only one of these

init_loggers()

# dictConfig(app_dict_config)

# FastApi app

app = FastAPI()

# init our logger

log = logging.getLogger("simple_example")

# first endpoint

@app.get("/log_now")

def log_now():

log.debug("/api/log_now starts")

log.info("I'm logging")

log.warning("some warnings")

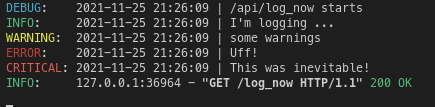

return {"result": "OK"}What happens here? We initiate loggers, start

app and create logger log named simple_example. Then we create endpoint /log_now that surprisingly... just logs.# install requirements

pip install -r requirements.txt

# start uvicorn server on 127.0.0.1:8000

uvicorn app.main:app --reloadThen in another terminal run

curl localhost:8000/log_nowAnd you should see something like this

Almost perfect. We can now update the formatting to polish it. Any default LogRecords attributes can be used. But to have the same colorful formatting as uvicorn we need to use also its'

formatter and its' formatting attribute %(levelprefix)sUpdate your config:

# both variants:

FORMAT: str = "%(levelprefix)s %(asctime)s | %(message)s"

# dict variant:

app_dict_config = {

# ...

"formatters": {

"basic": {

"()": "uvicorn.logging.DefaultFormatter",

"format": FORMAT,

}

# ...

}

}

# code variant:

def init_loggers(logger_name: str = "simple_example"):

#...

# create formatter

formatter = uvicorn.logging.DefaultFormatter(FORMAT)

# ...For last but not least we can change the date format with:

# dict

"datefmt": "%Y-%m-%d %H:%M:%S",

#code

formatter = uvicorn.logging.DefaultFormatter(FORMAT, datefmt="%Y-%m-%d %H:%M:%S")Voilà

What configuration or libs do you use for logging in Python? Or you miss anything important. Let me know in comments. Also this is my first "blog" so any feedback is appretiated :)

In the next episodes, we'll take a better look at how to get our logs into Azure AppInsights, trace requests, visualize them, and more...

35