25

Python Text Parsing Project: Furigana Inserter for Anki

Furigana is a Japanese reading aid for Kanji, which originally came from Chinese.

There are many implementations like this for inserting Furigana into Japanese text on the web through ruby formatting, but in this post we will cover the Anki format.

Anki's Furigana format is more readable and shorter, making editing easier as well as saving disk space. It's used on Anki but it's quite straightforward to convert it to the ruby format for the web.

Here are the examples for 辞めたい and 辿り着く:

Ruby format: <ruby><rb>辞</rb><rt>や</rt>めたい</ruby>

Anki format: 辞[や]めたい

Ruby format: <ruby><rb>辿</rb><rt>たど</rt>り</ruby><ruby><rb>着</rb><rt>つ</rt>く</ruby>

Anki format: 辿[たど]り 着[つ]く

Currently the two predominant tools for inserting Furigana on Anki are the Japanese Support addon and the Migaku Japanese addon.

There are some issues with these tools, however, as they fail on one or more of these issues.

- Hiragana in the middle of the word

- Special Japanese characters and punctuation parsing

- User-defined mapping for specific words with multiple readings as well as names and places.

We will address these issues in this project.

Text segmentation is a method to break down a sentence into smaller chunks called morphemes. This will enable us to look up the individual readings of each morpheme and apply a Furigana reading when necessary.

Instead of the common segmentation tool Mecab, this project will use Sudachi, which features multiple text segmentation modes as well as Furigana retrieval.

We will also use the Wanakana package for Python to for basic Japanese text manipulation.

pip install sudachipy sudachidict_small wanakana-pythonThere are three segmentation modes in Sudachi - A, B, and C.

We will use mode C to get the reading of the longest possible entries in the sentence.

from sudachipy import tokenizer

from sudachipy import dictionary

tokenizer_obj = dictionary.Dictionary(dict_type="small").create()

def add_furigana(text):

tokens = [m for m in tokenizer_obj.tokenize(text, tokenizer.Tokenizer.SplitMode.C)]

parsed = ''

# ...

return parsedWe also need to define certain non-Japanese characters (but considered as Japanese symbols by the Wanakana library:

from wanakana import to_hiragana, is_japanese, is_katakana, is_hiragana

import string

JAPANESE_PUNCTUATION = ' 〜!?。、():「」『』0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ'

def is_japanese_extended(text):

return is_japanese(text) and text not in string.punctuation and text not in JAPANESE_PUNCTUATIONWe can then add the logic to our furigana insertion method. to_parse is only True if the (morpheme) token contains Japanese characters with Kanji. In other words it is False if the text is purely Hiragana or Katakana.

Special characters like 〜 have to be singled out as they are attached to tokens in Sudachi.

SPECIAL_CHARACTERS = '〜'

def add_furigana(text):

tokens = [m for m in tokenizer_obj.tokenize(text, tokenizer.Tokenizer.SplitMode.C)]

parsed = ''

for index, token in enumerate(tokens):

to_parse = is_japanese_extended(token.surface()) and not is_katakana(token.surface()) and not is_hiragana(token.surface())

if to_parse:

if token.surface()[-1] in SPECIAL_CHARACTERS:

parsed += add_furigana(token.surface()[:-1]) + token.surface()[-1]

else:

if index > 0:

parsed += ' '

reading = to_hiragana(token.reading_form())

# ...

else:

parsed += token.surface()

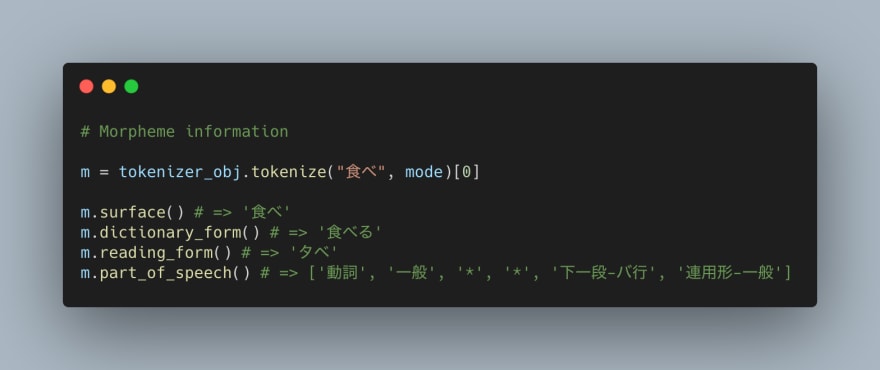

return parsedThere are multiple methods in the token item. We use the surface() method to get the original string, and reading_form() to get the Furigana reading of the morpheme.

An important note is that the reading_form() method returns the reading in Katakana, which needs to be converted to Hiragana and can be achieved with Wanakana's to_hiragana() method.

We can create a small dictionary to map custom items we want to do our own mapping. This can be everything from using more common readings (私 returns the reading わたくし instead of わたし) to names like 優那[ゆうな] which Sudachi incorrectly parses to 優[ゆう] 那[].

KANJI_READING_MAPPING = {

'私': '私[わたし]',

'貴女': '貴女[あなた]',

'何が': '何[なに]が',

'何を': '何[なに]を',

'我国': '我国[わがくに]',

'行き来': '行[い]き 来[き]',

'外宇宙': '外宇宙[がいうちゅう]',

'異星人': '異星人[いせいじん]',

'優那': '優那[ゆうな]',

'菜々美': '菜々美[ななみ]'

}We can also use a JSON file for easier data management but here we will keep it contained in the Python file.

To map them manually we check if their surface form is one of the keys of our KANJI_READING_MAPPING and append the corresponding value to our parsed result.

Sometimes the keyword may cover 2 tokens, for example 異星人 are broken down into the tokens 異星[いせい] and 人[にん], and 優那 into the tokens 優[ゆう] and 那[]. This is why we also check to see if the combined term of the current token and the next token token.surface() + tokens[index+1].surface() are also a key in our mapping dictionary.

token_indexes_to_skip = []

for index, token in enumerate(tokens):

if index in token_indexes_to_skip:

continue

# ...

if index < len(tokens)-1 and token.surface() + tokens[index+1].surface() in KANJI_READING_MAPPING:

parsed += KANJI_READING_MAPPING[tokens[index].surface() + tokens[index+1].surface()]

token_indexes_to_skip.append(index+1)

elif token.surface() in KANJI_READING_MAPPING:

parsed += KANJI_READING_MAPPING[token.surface()]

else:

# ...We add a list token_indexes_to_skip to store the index+1 tokens that are already used for the custom mapping. This way we can skip parsing it in the next iteration.

We set two pointers, surface_index to go through each character of the surface form, and reading_index for the *reading.

For example, the surface form 教える has the reading form オシエル and subsequently おしえる after Hiragana conversion.

If the character is Hiragana or Katakana, we directly add it to the parsed string and increment both the pointers.

If it is Kanji, we find the reading for the Kanji by using a temporary variable reading_index_tail and increment that until we reach the end of the reading string or until the next reading character matches the next Hiragana character in the surface string.

By matching え in おしえる to 教える we can parse the reading of 教 to おし. reading_index's value is 0 and reading_index_tail's value is 1

We will also add an extra condition to move the reading pointer if the subsequent Hiragana character is the same as the current one.

For example 可愛い is parsed as 可愛[かわい]い instead of 可愛[かわ]いい. By checking the repetition of the final い we could force the first い to be included in the reading.

surface_index = 0

reading_index = 0

while len(token.surface()) > surface_index:

if is_hiragana(token.surface()[surface_index]) or is_katakana(token.surface()[surface_index]):

parsed += token.surface()[surface_index]

reading_index += 1

surface_index += 1

else:

next_index = -1

for token_index in range(surface_index, len(token.surface())):

if is_hiragana(token.surface()[token_index]) or is_katakana(token.surface()[token_index]):

next_index = token_index

break

if next_index < 0:

parsed += to_anki_format(

index=surface_index, kanji=token.surface()[surface_index:],

reading=reading[reading_index:])

break

else:

reading_index_tail = reading_index

while reading[reading_index_tail] != token.surface()[next_index] or (reading_index_tail < len(reading)-1 and reading[reading_index_tail] == reading[reading_index_tail+1]):

reading_index_tail += 1

parsed += to_anki_format(

index = surface_index,

kanji = token.surface()[surface_index:next_index],

reading = reading[reading_index:reading_index_tail])

reading_index = reading_index_tail

reading_length = next_index - surface_index

if reading_length > 0:

surface_index += reading_length

else:

breakAnki format is pretty straightforward. We add an extra space in the beginning if the parsed Kanji is in the middle of the sentence.

def to_anki_format(index, kanji, reading):

return '{}{}[{}]'.format(' ' if index > 0 else '', kanji, reading)There has been a lot more steps and conditional checking but in the end our parser could handle a lot more cases and be highly customizable.

Note: to run this example, you may need to run

pip install sudachidict_smallin the repl shell to install the Sudachi dictionary.

So far we have covered how to add Furigana to Japanese text in the Anki format but we have not covered how to make an Anki addon (plugin). It could be a simple hotkey control to convert a field to Japanese with Furigana, or it could be an addon to batch insert Furigana to an existing Anki Deck. We will leave that for another post.

Assume you have a relational database of Japanese text and you added an extra column to store all the text with inserted Furigana. You would need to retrieve the data and show it on the Web in ruby format.

You will need to write a simple text formatting function. No need for segmentation libraries here. You can have a look at my React JS implementation if you need help.

25