21

Adding Support for Multi-Audio Tracks in The Eyevinn Channel Engine

In this blog post, I'll describe how I extended the current demuxed audio feature so that the Channel Engine could play multiple audio tracks. I will also assume that reader is somewhat familiar with the HLS streaming format and Channel Engine or has at least read the documentation in the Channel Engine git repo link, or this article link beforehand.

The Eyevinn Channel Engine is an Open-Source service that works well with muxed VODs, but when it comes to demuxed VODs, it does the bare minimum at the moment, namely just using the first audio track it could find. This demuxed support feature can certainly be extended.

But before we get into it, I need to clarify what I mean when I say "audio tracks" and "audio groups", as I will be using these words throughout this post.

In an HLS master manifest, you can have a media item with the attribute TYPE=AUDIO with a reference to a media playlist manifest containing the audio segments. This is what I will be referring to as an "audio track". Multiple audio tracks can exist in the HLS master manifest. These tracks can be grouped/categorized, by the media item's GROUP-ID attribute. Audio tracks that have the same GROUP-ID value is what I will refer as an "audio group". In other words, an audio group consists of one or more audio tracks. GROUP-IDs are an HLS requirement for media items.

Now, a quick overview as to how the old demuxed audio feature worked.

The Channel Engine would create a master manifest for its channel stream based on the specifications detailed in the ChannelManager object, which one passes as an option to the Channel Engine instance. If we passed a variable signaling the Channel Engine that we want to use demuxed content then the Channel Engine will do the following extra steps when creating the master manifest.

The Channel Engine will add 1 media item of type audio to the master manifest with the GROUP-ID attribute set to the first GROUP-ID found in a stream item in the VOD asset's master manifest.

Then when the audio track is requested by the player/client, the Channel Engine will respond with an audio playlist manifest. The playlist will have references to audio segments belonging to the VOD asset's first available audio track for that audio group. Even if there are multiple audio groups in the VOD, they won't be used. Even if there are multiple audio tracks within the audio groups, they won't be used. There is clearly potential here to add support for using more than one specific audio track and audio group.

The task in question will have some implementation challenges.

A few things needed to be taken into account. Namely:

- How to have the client select a track of a certain audio group.

- How to have the client select a certain language/audio track within the selected audio group.

- How to handle the case where the requested audio group is not present in the current VOD.

- How to handle the case where the requested language is not present amongst the current VOD's audio tracks for that audio group.

- How to handle VOD stitching when VODs have a different set of audio groups.

- How to handle VOD stitching when VODs have a different set of languages/audio tracks.

My implemented solution in its current state did not cover every edge case. Meaning that some points mentioned in Challenges have yet to be addressed. However, the implementation works fairly well for the most basic case and can be extended in the future to handle more edge cases.

My solution will assume that every VOD uses the same audio GROUP-ID and uses mostly the same languages in their audio tracks.

As a side-note, the effects of VODs not using the same GROUP-ID will result in an error. A proposed solution is mentioned in the Future Work chapter.

The following steps give an overview of how I added support for multi-audio in the Channel Engine.

Step 1: Adding audio media items to the master manifest based on a set of predefined audio languages.

To address the challenge of how the client is to select a certain audio group and audio track, I extended the current method in place, which became a reiteration of the method used for selecting different VOD profiles.

The plan was to let the client select a track based on what's been predefined. So to have it work like it does for VOD profiles, I needed to extend the ChannelManager class with an extra function.

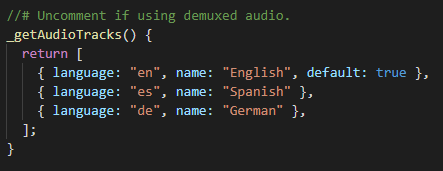

Media Items are added to the Master Manifest with attribute values set according to a predefined JSON object, defined in a _getAudioTracks() function in the ChannelManager class/object.

Now the resulting master manifest may look something like this...

#EXTM3U

#EXT-X-VERSION:4

## Created with Eyevinn Channel Engine library (version=2.19.3)

## https://www.npmjs.com/package/eyevinn-channel-engine

#EXT-X-SESSION-DATA:DATA-ID="eyevinn.tv.session.id",VALUE="1"

#EXT-X-SESSION-DATA:DATA-ID="eyevinn.tv.eventstream",VALUE="/eventstream/1"

# AUDIO groups

#EXT-X-MEDIA:TYPE=AUDIO,GROUP-ID="audio",LANGUAGE="en", NAME="English",AUTOSELECT=YES,DEFAULT=YES,CHANNELS="2",URI="master-audio_en.m3u8;session=1"

#EXT-X-MEDIA:TYPE=AUDIO,GROUP-ID="audio",LANGUAGE="sv", NAME="Swedish",AUTOSELECT=YES,DEFAULT=NO,CHANNELS="2",URI="master-audio_sv.m3u8;session=1"

#EXT-X-STREAM-INF:BANDWIDTH=6134000,RESOLUTION=1024x458,CODECS="avc1.4d001f,mp4a.40.2",AUDIO="audio"

master6134000.m3u8;session=1

#EXT-X-STREAM-INF:BANDWIDTH=2323000,RESOLUTION=640x286,CODECS="avc1.4d001f,mp4a.40.2",AUDIO="audio"

master2323000.m3u8;session=1

#EXT-X-STREAM-INF:BANDWIDTH=1313000,RESOLUTION=480x214,CODECS="avc1.4d001f,mp4a.40.2",AUDIO="audio"

master1313000.m3u8;session=1Note: Notice that GROUP-ID is not a field in the audioTrack JSON, and so the GROUP-ID in the master manifest's media items are actually permanently set to the first GROUP-ID found in the very first VOD. This is how it worked before, and my feature extension has kept it that way for now. See Delimitations.

Next was to make some small adjustments to the route handler (specifically _handleAudioManifest()) for the endpoint of a URI in a media item.

The Channel Engine reads parameter values from the client request in a clever way. Values can be extracted from the request path itself.

Values extracted are the audio group Id and language. This tells us what segments we are to include in the media manifest response.

Step 2: Make it possible in HLS-vodtolive, to load in all audio groups, and also all audio tracks for each audio group.

Now that we know what segments the client is looking for, how do we find them? This is where the Eyevinn dependency package hls-vodtolive comes into play.

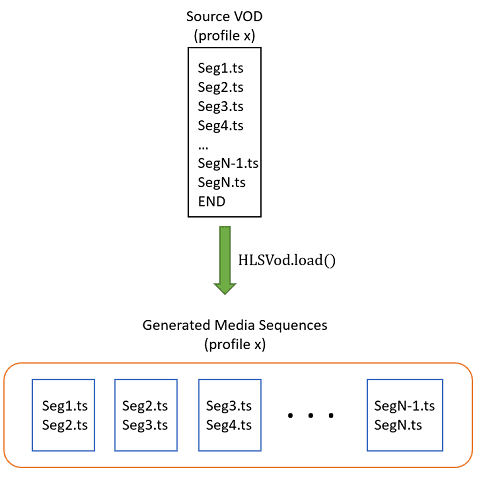

In short, the hls-vodtolive package creates an HLSVod class/object which given a VOD master manifest as input, will load and store all segments referenced in that manifest into a JSON object organized by profiles. An HLSVod object will also divide the segments into an array of subsets, that we call media sequences. So each subset/media sequence will be used to create a pseudo-live looking media manifest.

This class however, did not properly load segments from audio media manifests. An extension was needed.

Not going into detail, I can say that it was changed so that the HLSVod would load all audio segments from every audio media manifest and organize them by audio groups, then by languages.

Effectively, storing all audio segments possible from the original VOD manifest.

Step 3: Make it so that you can stitch audio tracks between two HLSVods.

This step involves more expansions to the hls-vodtolive package. Expansions are done to the HLSVod class function _loadPrevious().

You see, an HLSVod can load after another when using the function loadAfter(), and when doing so will inherit some segments from the HLSVod before it. This basically makes it possible to create media sequences that smoothly go from the contents of one VOD to the other, using HLS discontinuity tags.

Look at the Channel Engine chapter in Server-less OTT-Only Playout article for more info on it Link.

The tricky part is deciding who inherits what from who.

Ideally, if the 2 HLSVods in question have the same set of languages and audio group names, then it's fairly straightforward who gets what. But if they have nothing in common, then it suddenly becomes ambiguous. However, it is probably more likely that the Channel Engine User is using VOD assets that have at least some common languages/audio tracks.

That being said, it is a possibility that the VOD assets may have named their GROUP-IDs differently. However, as of now, it is assumed that this is not the case. This addressed in the Delimitations chapter, and then again in the Future Work chapter below.

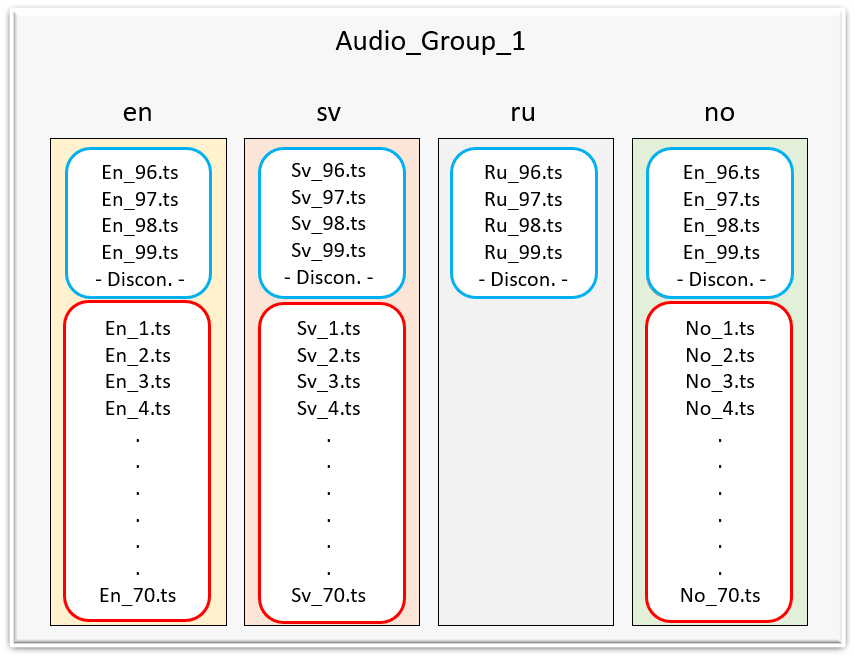

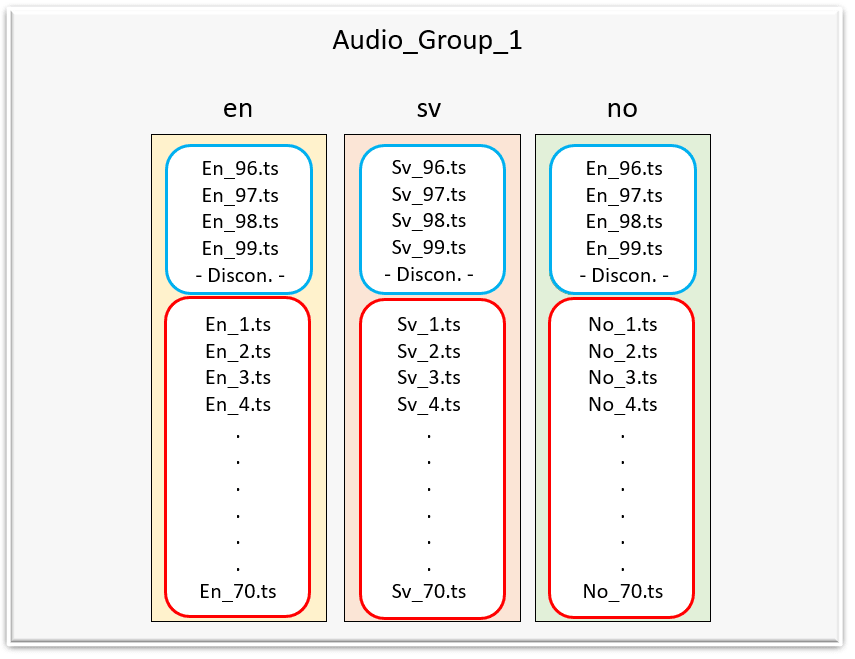

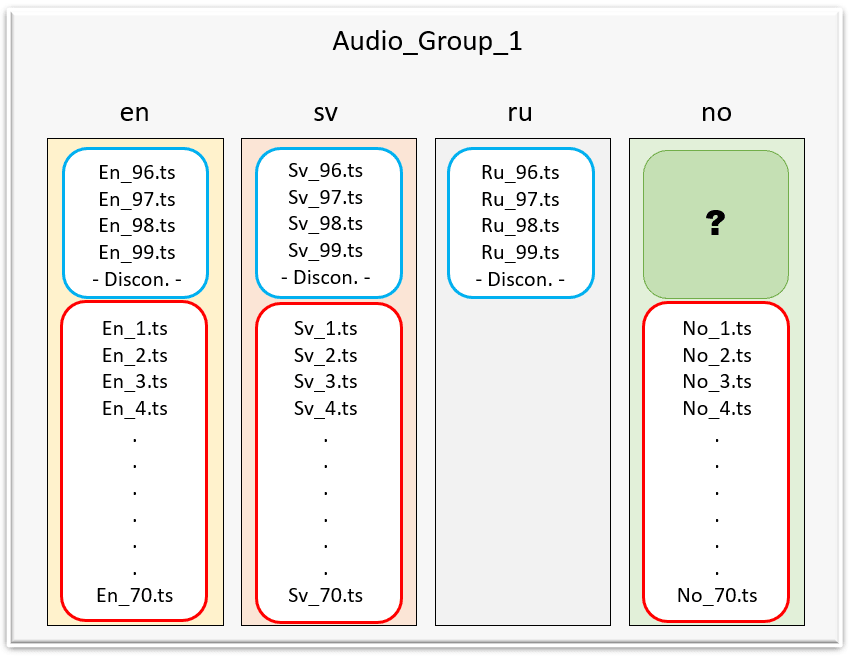

See the figures below for a visual representation of the challenge. The figure depicts a scenario where the prior HLSVod has audio tracks for the languages English, Swedish, and Russian. While the current HLSVod has audio tracks for English, Swedish, and Norwegian.

The outer box represents the current HLSVod object, and the inner colored boxes represent audio tracks in the HLSVod.

The blue and red represent the prior VOD segments and current VOD segments respectively. Again it is assumed that both HLSVods have the same audio group.

So... matching languages inherit segments from each other, but what segments does the unique languages inherit?

The answer is... any segments really. What is important is that we can enable generating media sequences that transition smoothly and that the client gets the proper audio for the VOD. Sure it might not be in the expected language, but at least the HLS player will not be confused.

However, I thought it would be best for the unique languages to inherit segments from the previous VOD's default language, or more specifically the first loaded language (which usually corresponds to the default language in a demuxed VOD).

Lastly, in case a loaded language from the previous VOD did not get inherited at all, then we simply remove it from our collection of audio tracks for an audio group, so that we do not evoke any false positives when a request comes for that language.

But this brings up an interesting question. What do we do if a request comes for a language that is not in the HLSVod's collection?

Well, we simply provide a fallback track. In other words, let's say the client is requesting an audio track in the language of Russian but the current VOD only has English and Swedish, then we will respond with the English audio track instead, assuming that English was the first loaded audio track for the HLSVod.

And that's all there was to it!

After these steps it now became possible to play, select, and transition between audio tracks for demuxed VODs in the Channel Engine.

- Add support for a fallback audio group

Adding a fallback audio group when an audio group is not found will help to ensure that the Channel Engine stream will always have audio to play. Doing this, it is important to make sure that every audio track in the fallback audio group has segments from the prior VOD stitched in front of it. It would probably work again to distribute segments from the prior VODs first loaded audio track for its first loaded audio group.

- Add support for presetting, selecting, and using multiple audio groups.

As of now, we only support the use of a single audio group. If there ever is a need to want to use more than one audio group at a time in Channel Engine, then we would need to expand channel options in the ChannelManager. However, there will be a challenge in how we then deal with mapping between audio groups of different names among VODs.

- Loading audio tracks that have the same language in a single VOD.

We do not support the loading of duplicate languages, but they do occur in HLS manifests. For example, a VOD could have an English track and an English Commentary track in it, both setting their language value to "en". In our current state, only the first English track would be loaded.

Now the use case for having an English commentary track as a preset track is not very common, I'd imagine. But it could be nice to have support for it if it ever became a desired feature in Channel Engine.

That said... An immediate workaround would be to prepare the HLS manifest beforehand and just make sure that every media item has a unique language value.

Nicholas Frederiksen is a developer at Eyevinn Technology, the European leading independent consultancy firm specializing in video technology and media distribution.

If you need assistance in the development and implementation of this, our team of video developers are happy to help you out. If you have any questions or comments just drop a line in the comments section to this post.

21