25

Fetch Application Inventory using Systems Manager

Background

We need a way to report on “software versions” deployed to Cloud.

Objective

Get application inventory from each EC2 instance and store it in centralized account S3 bucket. To query the information from s3 bucket, we are integrating Glue, Athena (from another account).

Proposed Architecture

Implementation Procedure

- Enable system manager inventory in (PROD/NONPROD) each account (one-time activity)

- Create S3 bucket in centralised account to receive logs from system manager (one-time activity)

- Create resource data sync in each account (PROD/NONPROD) to send the log to centralised bucket (one-time activity)

- Create Lambda function in centralised account to assign object level permission to each object if the analytical services are running in different account, so object level permission needs to be updated (work around given by AWS)

- Configure Glue and Athena in required account to query the S3 bucket (s3://ssm-application-inventory-logs).

Enable System Manager inventory in each account

Its a one time activity in each account , we have to enable this feature using AWS console.

Glue Details

Create Glue crawler, Glue database and Table in required account to match the ssm inventory output.

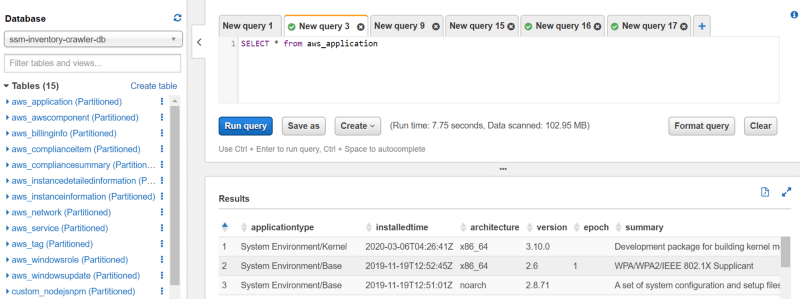

Athena Query

- This service is enabled in a different account.

Run Crawler For Latest Report

- To get latest report we have to run Glue crawler manually. Post successful execution, follow the Athena examples.

EXAMPLE 1:

- To fetch whole inventory details from all the accounts, use the below query.

SELECT * from aws_application

EXAMPLE 2:

- To fetch application wise instance details use the below query — replace ‘java%’ with your application name.

SELECT java.name, java.version, a.resourceid, accountid

FROM “ssm-inventory-crawler-db”.”aws_application” a, (select distinct (resourceid), version, name from “ssm-inventory-crawler-db”.”aws_application” where name like ‘java%’) java

where a.resourceid = java.resourceid

order by a.resourceidEXAMPLE 3:

- To fetch custom inventory library like “python” using Athena,

SELECT * FROM “ssm-inventory-crawler-db”.”custom_python2libraries”;EXAMPLE 4:

To fetch custom inventory library like “npm” using Athena,

SELECT * FROM “ssm-inventory-crawler-db”.”custom_nodejsnpm”;Run Query in an automated way using Lambda

- You can use below python script to query Athena every month day 1 using CloudWatch event rule and Lambda.

#!/usr/bin/python3

import boto3

import datetime

import os

import json

import logging

# boto3.setup_default_session(profile_name='saml',region_name='ap-southeast-1')

logger = logging.getLogger()

logger.setLevel(logging.DEBUG)

def poll_status(_id, message):

athena= boto3.client('athena',region_name='ap-southeast-1')

result = athena.get_query_execution( QueryExecutionId = _id )

state = result['QueryExecution']['Status']['State']

while state == "QUEUED":

result = athena.get_query_execution( QueryExecutionId = _id )

state = result['QueryExecution']['Status']['State']

while state == "RUNNING":

result = athena.get_query_execution( QueryExecutionId = _id )

state = result['QueryExecution']['Status']['State']

if state == "FAILED":

logger.info("FAILED-LOG: {} ".format(result))

# return result

else:

logger.info("SUCCESS-LOG : {} ".format(result))

sns_arn = os.environ["SNS_ARN"] #"arn:aws:sns:ap-southeast-1:928167378288:application-inventory-logs"

notification= _sns_notification(sns_arn,message)

return result

def run_query(query, database, s3_output):

client = boto3.client('athena',region_name='ap-southeast-1')

response = client.start_query_execution(

QueryString=query,

QueryExecutionContext={

'Database': database

},

ResultConfiguration={

'OutputLocation': s3_output,

}

)

print('Execution ID: ' + response['QueryExecutionId'])

QueryExecutionId = response['QueryExecutionId']

return QueryExecutionId

# local_filename = QueryExecutionId + '.csv'

def _sns_notification(snsarn,message):

client = boto3.client('sns')

response = client.publish(TargetArn=snsarn,Message=json.dumps({'default': json.dumps(message)}),MessageStructure='json')

logger.warning("SNS-NOTIFICATION : {} ".format(response))

return response

def lambda_handler(event, context):

#Athena configuration

s3_output = os.environ['ATHENA_OUTPUT_BUCKET'] #'s3://athena-query-result-logs/result/'

database = os.environ['GLUE_DB'] #'ssminventorycrawlerdb'

table = os.environ["GLUE_TABLE"] #'aws_application' #

app_search = os.environ["APPLICATION_LIST"] # "java% python% node%" #

app_list= app_search.split(' ')

sns_arn = "arn:aws:sns:ap-southeast-1:928167378288:application-inventory-logs" #os.environ["SNS-ARN"]

#Athena database and table definition

#Query definitions & Execute all queries

python_library = "SELECT * FROM "'"%s"'"."'"%s"'" ;" % (database, "custom_python2libraries")

npm_librarary = "SELECT * FROM "'"%s"'"."'"%s"'" ;" % (database, "custom_nodejsnpm")

all_app = "SELECT * FROM "'"%s"'"."'"%s"'" ;" % (database, table)

queries = [ python_library, npm_librarary, all_app ]

for qa in queries:

print("Executing query: %s" % (qa))

resp = run_query(qa, database, s3_output)

logger.info("QUERY-ID: {} ".format(resp))

s3_key = resp + '.csv'

message= {"Application-Inventory": s3_key,"Bucket-Details": s3_output,"App-name":qa}

logger.info("MESSAGE-DETAILS : {} ".format(message))

result = poll_status(resp, message)

for app in app_list:

specific_app = ("""SELECT java.name, java.version, a.resourceid, accountid

FROM "%s"."%s" a, (select distinct (resourceid), version, name from "%s"."%s" where name like '%s') java

where a.resourceid = java.resourceid

order by a.resourceid """) % (database, table,database, table,app)

# queries = [ python_library, npm_librarary, all_app, specific_app ]

# for q in queries:

print("Executing query: %s" % (specific_app))

res = run_query(specific_app, database, s3_output)

logger.info("QUERY-ID: {} ".format(res))

s3_key = res + '.csv'

messages= {"Application-Inventory": s3_key,"Bucket-Details": s3_output,"App-name":specific_app}

logger.info("MESSAGE-DETAILS : {} ".format(messages))

results = poll_status(res, messages)

if __name__ == '__main__':

lambda_handler("event", "context")Resources

- Custom Inventory:

- CFN resource data sync:

25