26

What is Virtualization? | Bare Metal vs Virtual Machines vs Containers

Link to video: https://www.youtube.com/watch?v=EYOGh2rK-h8

Virtualization simplified and the comparison between Bare Metal, Virtual Machines (VMs) & Containers

"Cloud Computing! DevOps! Docker! Kubernetes! Serverless!…"

If you, as a Software Engineer, haven't come across buzz words like these lately, you have probably been living under a rock.

But what do all these technologies seem to embrace?

VIRTUALIZATION!!!

But, what actually is Virtualization?

I believe it is best described with an example - let's take the following…

Suppose company 'XYZ' needs to run 2 different applications - App A & App B. Each app needs a particular operating system or has a set of dependencies / libraries which are not compatible with the other app.

Not a very uncommon scenario.

In a world without virtualization, the company naturally gets 2 different physical machines for each app.

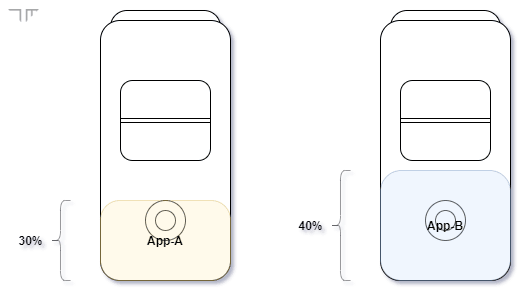

Notice that, App A utilizes 30% of the first machine while App B utilizes 40% of the second.

We can immediately note the major downsides to this approach:

In absence of a solution, the downsides and higher overhead might not be trivial. Therefore, this has been the traditional way of running applications for many years.

Fortunately, we already have the solution to this problem - and it's called… (yes, you've guessed it right)

Using virtualization, we can make a single computer act like multiple virtual ones.

"Is this a joke??!"

Absolutely not!

Virtualization allows company XYZ to run both of their apps A & B on a single machine- saving them lots of money and pain in the 🍑.

Running App A and B on a single machine using virtualization

With virtualization, the single physical machine can be partitioned into several smaller ones; each of which acts as if it's a completely separate machine - therefore the term "virtual".

A program running inside a virtual environment only has access to the resources assigned to that virtual machine or partition.

So, company XYZ could even have a third application on the same machine if it consumed less then 30% of the machines resources! (although it is recommended to not run machines at 100% load for long periods)

We can now note the major benefits of virtualization:

Hardware Cost Savings: Better hardware utilization enables us to use a physical machine's capacity more efficiently by distributing its resources (RAM, CPU, storage etc.) among many projects, users or environments.

Application isolation: Each virtual environment acts like a separate machine, therefore not interfering with an application running on another environment e.g. not causing conflicting dependencies. It also means that each application can developed & deployed separately.

Improved scalability & availability: Virtual resources are easier and faster to create, manage and tear down - compared to getting new hardware. Therefore, they are more scalable, and provide the means to build systems which are more available.

Centralized administration & security: Since the physical resources are isolated from the applications that use them - the resources can be centrally managed & security policies can be centrally enforced.

There are several types of virtualization, like:

Hardware virtualization (VMs)

Operating system virtualization (Containers)

Network virtualization

Data virtualization

etc.

However, we will only be focusing on the first 2, as the rest are beyond the scope of this blog.

Still with me? Great! :)

This is the first approach that company XYZ took.

The traditional way of running an application was directly on dedicated hardware - i.e. one machine, one operating system (OS), one service/task.

The biggest benefit of bare-metal is that, it can provide the best performance since there isn't a virtualization layer between the Host and the libraries and apps. Although, it's important to note that the performance penalty of virtualization is usually tiny & insignificant for most use cases.

When organizations chose an IT stack to work with, they were usually locked-in to that vendor's specific hardware, OS or license agreements - making it very difficult to run multiple applications on a single machine.

As a result, the physical resources were highly underutilized, could not be partitioned easily for different teams/apps, and were difficult to scale - as buying new machine for every new app is expensive to buy and maintain.

Something had to be done…

In the 1990s, virtualization really took off - addressing the problems with bare metal deployments. Virtual Machines (VMs) were being widely adopted!

The key piece of technology that makes VMs work is called a hypervisor.

A Hypervisor is a software that imitates a particular piece of computer hardware or the entire computer - allowing the available physical resources to be partitioned into multiple virtual ones, called Virtual Machines (VMs).

The computer that runs a hypervisor is called the Host System, and the VMs created and managed by the hypervisor are called the Guest Systems.

Hypervisors can sit directly on top of the hardware (type-1) or on top of an OS (type-2). Although, the distinction between the two is hard to draw sometimes, like the case for Kernel-based Virtual Machine (KVM) - the virtualization module within the Linux kernel.

VMs are great, in that they solved many of the problems we faced with bare metal i.e. better resource utilization, environment isolation etc.

But they still have a major drawback - each VM virtualizes an entire operating system and its underlying hardware.

Sounds redundant? Can we do better?

Enter…

Containers virtualize just the operating system, instead of virtualizing the the entire physical machine like VMs.

They don't need a Guest OS or hypervisor - instead, all containers running on a host machine share the OS kernel of the Host System, and only contain the application(s) and their libraries and dependencies.

This makes them extremely lightweight & fast!

Additionally, since containers are not concerned with the underlying hardware, they can be run on a myriad of different platforms, data centers and cloud providers - which makes them very flexible & portable!

This is why containers form the basis for Microservices or Cloud Native applications, many DevOps practices like CI/CD pipelines and much, much more.

But, containers are not the one size fits all solution though.

Since many containers run on the same host machine and do not virtualize the hardware, they provide a lower level of isolation when compared to VMs. This could give rise to security or compliance issues, which might be an important consideration in some industries or projects.

But this is not a concern for most applications of today, so… chill out!

From the sufferings of bare metal, we got the the virtualization revolution. It powers most if not all real world applications of today - including what we now know today as Cloud Computing!

As like most other areas in technology, virtualization continues to evolve - disrupting how businesses do business, and accelerating innovation. The concept of OS-level virtualization has been around for a while (as early as the 2000s), but the modern container era began with the introduction of Docker in 2013.

Docker is among one of my favorite technologies, and I'm preparing a series of tutorials on it to make it as easy as possible, specially for absolute beginners!

So, stay tuned and keep learning!

But most importantly…

Tech care!

Like my writing? Read other blogs I've written:

26