23

Normalization in Deep learning

Deep learning is an exciting field in Artificial intelligence, it is at the forefront of the most innovative and exciting fields such as computer vision, reinforcement learning, and natural language processing. Deep learning has a complex architecture, which comes with some problems. These deep neural networks have tons of layers, which are difficult to train because they are responsive to the learning algorithm's initial random weights and configuration.

The input layer may have certain features which dominate the process, due to having high numerical values. This can create a bias in the network because only those features contribute to the outcome of the training. For example, imagine feature one having values between 1 and 5, and feature two having values between 100 and 10000. During training, due to the difference in scale of both features, feature two would dominate the network and only that feature would have a contribution to the outcome of the model.

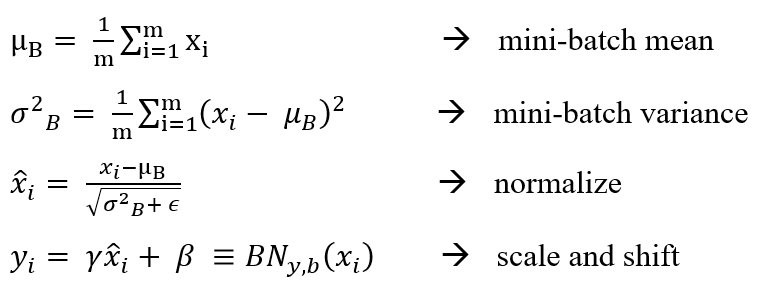

Batch normalization is the most common form of normalization in deep learning. It scales the inputs to a layer to a common value for every mini-batch during the training of deep neural networks. This stabilizes the learning process and significantly reduces the number of epochs required to train deep networks, enabling the network to train faster.

The way batch normalization works are by calculating the mean and variance of every feature in the mini-batch, then the mean is subtracted and each feature is divided by the standard deviation of the mini-batch.

You can find more in the following article Normalization in Deep learning

23