32

Anonymize your data using Amazon S3 Object Lambda

Anonymization or pseudonymization are some of the technics commonly adopted to do protect some data. In both case, you want to remove the ability to identify someone and more important the link to his personal information (financial, health, preferences…), while keeping the data practically useful. Anonymization consists in removing any direct (and part of indirect) identifying data. Pseudonymization does not remove these information but modify them so that we cannot make a link with the original individual.

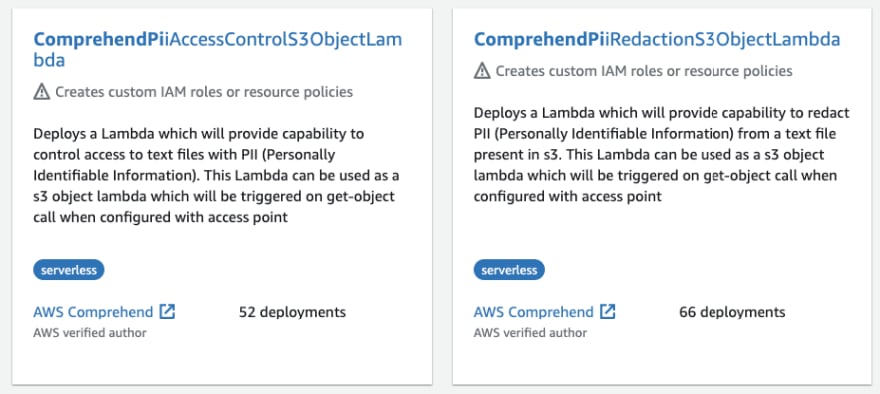

Multiple papers, algorithms (k-anonymity) and technics exist to perform anonymization and pseudonymization. AWS also provides 2 functions — available in the Serverless Application Repository (SAR) — that use Amazon Comprehend and its ability to detect PII:

On my side, as the input file is pretty straightforward, I don’t need Comprehend to detect sensible information.

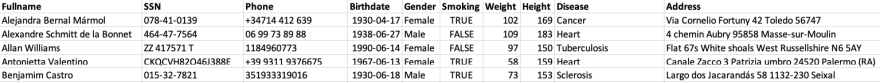

Here is my (naive) approach:

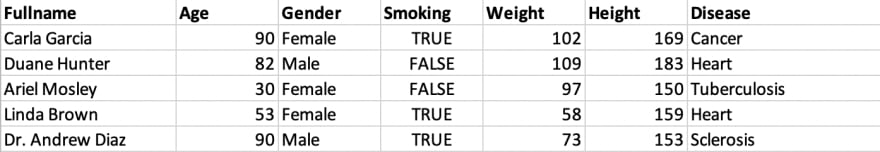

After this process, we should end up with the following information, clear from any identifying information (names have been replaced):

Now that we know what we want to do, let’s see it in the context of our workload.

We have 3 main components in our workload:

To provide anonymized data to these applications, we have several options:

Both options add complexity and costs. So this is were I introduce S3 Object Lambda, a capability recently announced by AWS and that will actually act as this proxy. Except that you don’t have to manage any infrastructure, just your Lambda function(s).

Let’s implement this solution. First thing to do is to create a Lambda function. To do so, use your preferred framework (SAM, Serverless, CDK, …). I use SAM and my function is in Python 3.8.

The function must have permission to

WriteGetObjectResponse, in order to provide the response to downstream application(s). Note this is not in the s3 namespace but s3-object-lambda:{

"Action": "s3-object-lambda:WriteGetObjectResponse",

"Resource": "*",

"Effect": "Allow",

"Sid": "WriteS3GetObjectResponse"

}And here is the code of my function (commented to understand the details):

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| """Anonymization Lambda function.""" | |

| import time | |

| from typing import Tuple | |

| from faker import Faker | |

| from collections import defaultdict | |

| from datetime import datetime, date, timedelta | |

| from aws_lambda_powertools import Logger | |

| import boto3 | |

| import requests | |

| import csv | |

| import io | |

| s3 = boto3.client('s3') | |

| logger = Logger() | |

| faker = Faker() | |

| today = date.today() | |

| @logger.inject_lambda_context | |

| def handler(event, context): | |

| """ | |

| Lambda function handler | |

| """ | |

| logger.set_correlation_id(event["xAmzRequestId"]) | |

| logger.info('Received event with requestId: %s', event["xAmzRequestId"]) | |

| logger.debug(f'Raw event {event}') | |

| object_get_context = event["getObjectContext"] | |

| request_route = object_get_context["outputRoute"] | |

| request_token = object_get_context["outputToken"] | |

| s3_url = object_get_context["inputS3Url"] | |

| # Get original object (not anonymized) from S3 | |

| time1 = time.time() | |

| original_object = download_file_from_s3(s3_url) | |

| time2 = time.time() | |

| logger.debug(f"Downloaded original file in {(time2 - time1)} seconds") | |

| # Anonymize | |

| time1 = time.time() | |

| rows, anonymized_object = anonymize(original_object) | |

| time2 = time.time() | |

| logger.debug(f"Anonymized the file ({rows} records) in {(time2 - time1)} seconds") | |

| # Send response back to requester | |

| time1 = time.time() | |

| s3.write_get_object_response( | |

| Body=anonymized_object, | |

| RequestRoute=request_route, | |

| RequestToken=request_token) | |

| time2 = time.time() | |

| logger.debug(f"Sending anonymized file in {(time2 - time1)} seconds") | |

| return {'status_code': 200} | |

| def download_file_from_s3(presigned_url): | |

| """ | |

| Download the file from a S3's presigned url. | |

| Python AWS-SDK doesn't provide any method to download from a presigned url directly | |

| So we have to make a simple GET httpcall using requests. | |

| """ | |

| logger.debug(f"Downloading object with presigned url {presigned_url}") | |

| response = requests.get(presigned_url) | |

| if response.status_code != 200: | |

| logger.error("Failed to download original file from S3") | |

| raise Exception(f"Failed to download original file from S3, error {response.status_code}") | |

| return response.content.decode('utf-8-sig') | |

| def filter_columns(reader, keys): | |

| """ | |

| Select only columns we want to keep in the final result, | |

| the ones useful for the final client and not containing identifying information | |

| """ | |

| for r in reader: | |

| yield dict((k, r[k]) for k in keys) | |

| def anonymize(original_object)-> Tuple[int, str]: | |

| """ | |

| Read through the original CSV object and perform anonymization: | |

| - Select only columns we want to keep | |

| - Perform some pseudonymization on Name / Birthdate | |

| Write the new CSV object | |

| """ | |

| logger.debug("Anonymizing object") | |

| reader = csv.DictReader(io.StringIO(original_object)) | |

| input_selected_fieldnames = ['Fullname', 'Birthdate', 'Gender', 'Smoking', 'Weight', 'Height', 'Disease'] | |

| output_selected_fieldnames = input_selected_fieldnames.copy() | |

| output_selected_fieldnames.remove('Birthdate') | |

| output_selected_fieldnames.insert(1, 'Age') | |

| transformed_object = '' | |

| with io.StringIO() as output: | |

| writer = csv.DictWriter(output, fieldnames=output_selected_fieldnames, quoting=csv.QUOTE_NONE) | |

| writer.writeheader() | |

| rows = 0 | |

| for row in filter_columns(reader, input_selected_fieldnames): | |

| writer.writerow(pseudonymize_row(row)) | |

| rows += 1 | |

| transformed_object = output.getvalue() | |

| return rows, transformed_object | |

| def pseudonymize_row(row): | |

| """ | |

| Replace some identifying information with others: | |

| - Fake name | |

| - Birthdate is replaced with the age | |

| """ | |

| anonymized_row = row.copy() | |

| # using Faker (https://faker.readthedocs.io/en/master/), we generate fake names | |

| if anonymized_row['Gender'] == 'Female': | |

| anonymized_row['Fullname'] = faker.name_female() | |

| else: | |

| anonymized_row['Fullname'] = faker.name_male() | |

| del anonymized_row['Birthdate'] | |

| birthdate = datetime.strptime(row['Birthdate'], '%Y-%m-%d') | |

| age = today.year - birthdate.year - ((today.month, today.day) < (birthdate.month, birthdate.day)) | |

| anonymized_row['Age'] = age | |

| return anonymized_row |

My Lambda function is really simple and if you would like to get something more production-ready, I encourage you to have a look at the AWS samples, mentioned above.

Once the function is created and deployed, we need to create an Access Point. Amazon S3 Access Points simplify managing data access for applications using shared data sets on S3, exactly what we want to do here. Using the AWS CLI:

aws s3control create-access-point --account-id 012345678912 --name anonymized-access --bucket my-bucket-with-cidThen we create the Object Lambda Access Point. It will make the Lambda function act as a proxy to your access point. To do so with the AWS CLI, we need a JSON file. Be sure to replace with your account id, region, access point name (previously created) and function ARN:

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| { | |

| "SupportingAccessPoint" : "arn:aws:s3:eu-central-1:012345678912:accesspoint/anonymized-access", | |

| "TransformationConfigurations": [{ | |

| "Actions" : ["GetObject"], | |

| "ContentTransformation" : { | |

| "AwsLambda": { | |

| "FunctionArn" : "arn:aws:lambda:eu-central-1:012345678912:function:data-anonymizer-AnonymiserFunction-RTHQH8LO8WN9" | |

| } | |

| } | |

| }] | |

| } |

Finally, we create the Object Lambda Access Point using the following command:

aws s3control create-access-point-for-object-lambda --account-id 012345678912 --name anonymize-lambda-accesspoint --configuration file://anonymize-lambda-accesspoint.jsonAnd that’s it! You can now test your access point and the anonymization process with a simple get. Note that you don’t perform a get directly on the S3 bucket, but on the access point previously created, using its ARN, just like that:

aws s3api get-object --bucket arn:aws:s3-object-lambda:eu-central-1:012345678912:accesspoint/anonymize-lambda-accesspoint --key patients.csv ./anonymized.csvYou can now provide this access point ARN to the analytics application so it can retrieve anonymized data and perform whatever it needs to.

In this article, I’ve shared how to leverage S3 Object Lambda in order to anonymize your data. In just a few commands and a bit of code, we can safely share data containing identifying information with other applications without duplicating it or building a complex infrastructure.

Note that you can use the same technology to enrich some data (retrieving information in a database), or modify it on the fly (eg. image resizing), or modifying the format (eg. xml to json, csv to parquet, …), and I guess you will find some usage too.

The code of this article is available in github, together with a full sam template to create everything (bucket, access points and Lambda function).

32