40

Convolutional Neural Networks to Categorizing

These are various datasets that I used for applying convolutional neural networks.

Before going for transfer learning, try improve your base CNN models.

If you're studying deep learning, I confidently know that you already see this picture. This dataset is often used for practicing algorithm made for image classification as the dataset is fairly easy to conquer. Hence, I recommend that this should be your first dataset if you just forayed this field.

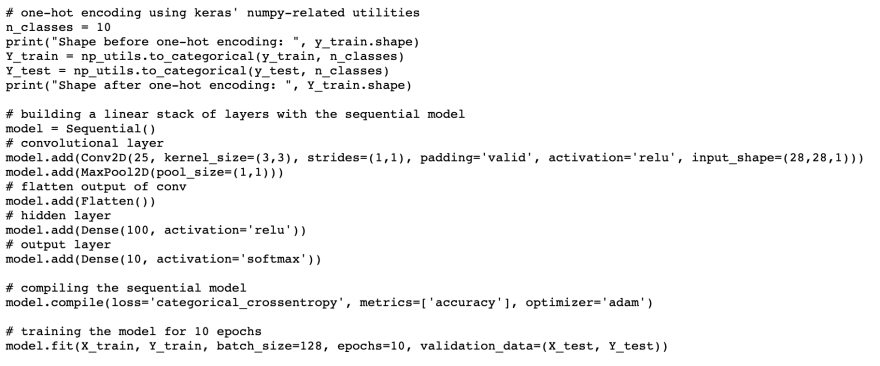

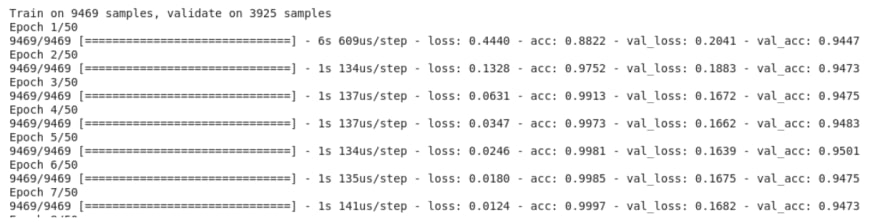

✔️ You can get good validation accuracy around 97%. One major advantage of using CNNs over NNs is that you do not need to flatten the input images to 1D as they are capable of working with image data in 2D. This helps in retaining the “spatial” properties of images. I additionally add more Convolution layers and hyperparameters for accuracy. So I get 98%+ accuracy.

Now we will distinguish images 32X32 pixel , 50000 training images and 10,000 testing images. These images are taken in varying lighting conditions and at different angles, and since these are colored images, you will see that there are many variations in the color itself of similar objects (for example, the color of ocean water). If you use the simple CNN architecture that we saw in the MNIST example above, you will get a low validation accuracy of around 60%.

model = Sequential()

// convolutional layer

model.add(Conv2D(50, kernel_size=(3,3), strides=(1,1), padding='same', activation='relu', input_shape=(32, 32, 3)))

//convolutional layer

model.add(Conv2D(75, kernel_size=(3,3), strides=(1,1), padding='same', activation='relu'))

model.add(MaxPool2D(pool_size=(2,2)))

model.add(Dropout(0.25))

model.add(Conv2D(125, kernel_size=(3,3), strides=(1,1), padding='same', activation='relu'))

model.add(MaxPool2D(pool_size=(2,2)))

model.add(Dropout(0.25))

// flatten output of conv

model.add(Flatten())

// hidden layer

model.add(Dense(500, activation='relu'))

model.add(Dropout(0.4))

model.add(Dense(250, activation='relu'))

model.add(Dropout(0.3))

// output layer

model.add(Dense(10, activation='softmax'))

✔️ In a conclusion , you can see accuracy is increasing. There’s also CIFAR-100 available in Keras that you can use for further practice.

40