29

Building ETL Pipelines in Python: Part 1

Data engineering refers to the development of software that performs three tasks:

The process is complex involving numerous software tools and advanced technical expertise. For one thing is can be challenging and overwhelming to identify the starting point and resources from which to acquire the basic knowledge. No wonder the demand for data engineers in the technology sector has risen by an order of magnitude in the past years. As the need for data generation grows, the thirst for data engineers will continue to intensify.

This post, part 1 of a three-part tutorial shows you how to build a simple ETL[extract,transform,and load] pipeline with CSV files in Python, describes the extraction steps.

Software Requirements

You will need the following software:

glob, a package that is preinstalled that searches for files in relative paths.

For details, see this section for documentation.

pandas, a package used for data manipulation. To install, type on the command line, pip install pandas.

For details, see the pandas site.

virtualenvwrapper, a package that sets up virtual environments for Python. For details, see the related documentation.

This Kaggle dataset for the CSV data.

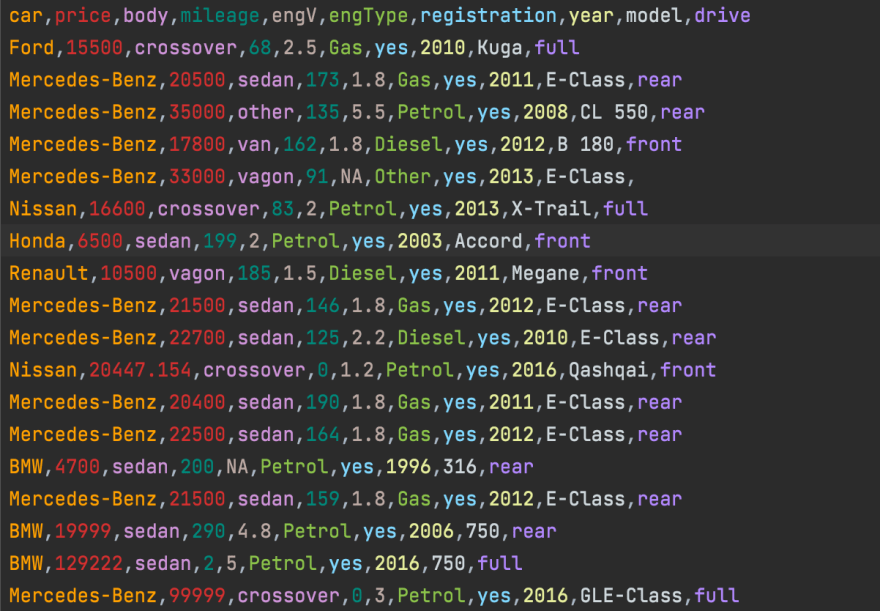

Here's an example of CSV data on car sales:

The Procedure:

Create a project called etl_car_sales with PyCharm.

Create a virtual environment with the command line mkvirtualenv etl_car_sales.

Install pandas and virtualenwrapper.

Extract the zip file and move the CSV files for car_sales to your etl_car_sales directory, like this:

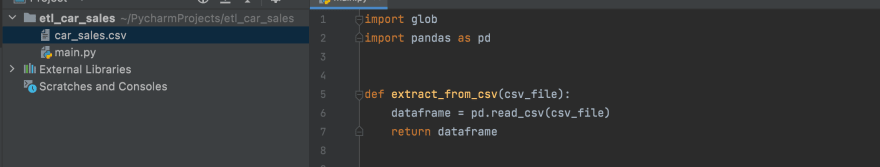

extract_from_csv function to read the CSV file

in your main directory and then return a Dataframe with the pandas function read_csv.

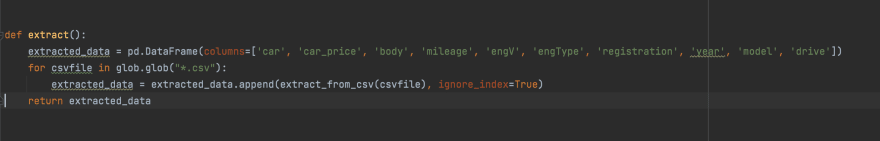

extract function to read the data from the extracted csv file and append the data to the Dataframe that

is being return the extract_from_csv function.

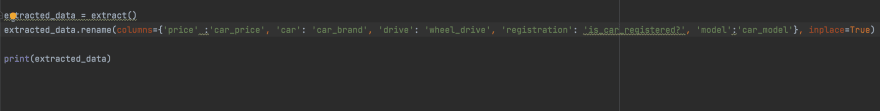

Tip: To rename the columns, do the following:

extracted_data variable and the result would read like this:

Part 2 will describe how to transform data in the ETL pipeline. Stay Tuned.

29