29

TF-Agents Tutorial

Reinforcement learning (RL) is a general framework where agents learn to perform actions in an environment so as to maximize a reward. The two main components are the environment, which represents the problems to be solved, and the agent, which represents the learning algorithm.

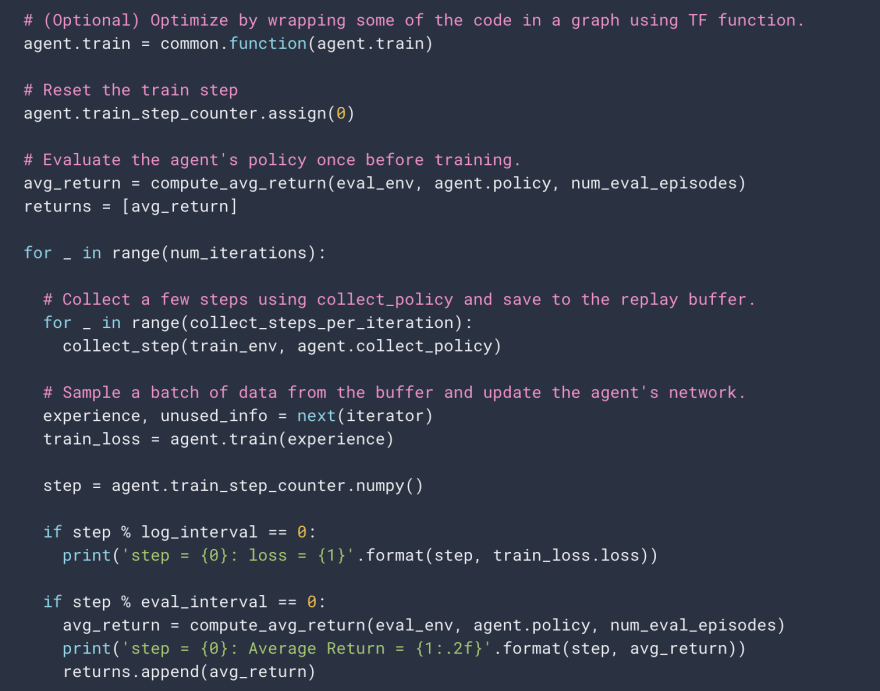

The agent and environment continuously interact with each other. At each time step, the agent takes an action on the environment based on its policy , where is the current observation from the environment, and receives a reward and the next observation from the environment. The goal is to improve the policy so as to maximize the sum of rewards.

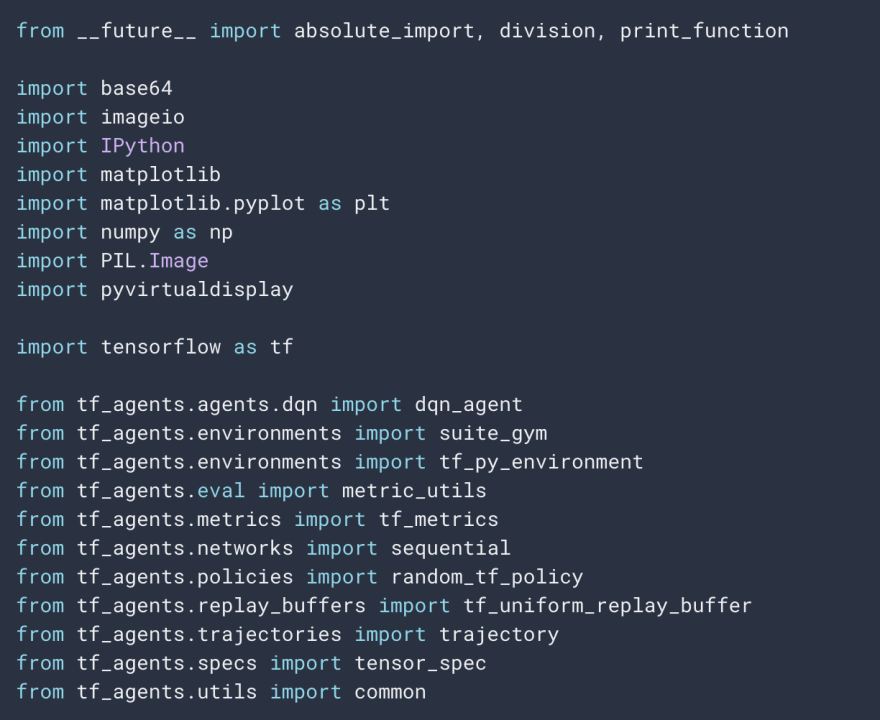

TF-Agents makes designing, implementing and testing new RL algorithms easier, by providing well tested modular components that can be modified and extended. It enables fast code iteration, with good test integration and benchmarking.

The Cartpole environment is one of the most well known classic RL.

- the pole tips over some angle limit

- the cart moves outside of the world edges

- 200 time steps pass.

The DQN (Deep Q-Network) algorithm was developed by DeepMind in 2015. It was able to solve a wide range of Atari games (some to superhuman level) by combining reinforcement learning and deep neural networks at scale. The algorithm was developed by enhancing a classic RL algorithm called Q-Learning with deep neural networks and a technique called experience replay.

In the Cartpole environment:

observation is an array of 4 floats:

- the position and velocity of the cart

- the angular position and velocity of the pole

reward is a scalar float valueaction is a scalar integer with only two possible values:

-

0— "move left" -

1— "move right"

It will take ~7 minutes to run

29