24

Errors and suspicious code fragments in .NET 6 sources

The .NET 6 turned out to be much-awaited and major release. If you write for .NET, you could hardly miss such an event. We also couldn't pass by the new version of this platform. We decided to check what interesting things we can find in the sources of .NET libraries.

I took the sources from the branch of .NET 6 release on GitHub. This article covers suspicious places only from the libraries (those that lies in src/libraries). I didn't analyze the runtime itself - maybe next time. :)

I checked the code with the PVS-Studio static analyzer. As you probably guessed from this article, PVS-Studio 7.16 supports the analysis of projects on .NET 6. You can read more about new enhancements of the current release here. The PVS-Studio C# analyzer for Linux and macOS now works on .NET 6 as well.

Over the year, PVS-Studio significantly expanded the functionality of the C# analyzer. In addition to the support of the .NET 6 platform, we added the plugin for Visual Studio 2022 and new security-diagnostics. Besides, we also optimized the C# analyzer's performance for large projects.

But you came here to read about .NET 6, didn't you? Let's not waste time.

This section includes various interesting code fragments that I could not group together into common category.

Issue 1

Let's start with something simple.

public enum CompressionLevel

{

Optimal,

Fastest,

NoCompression,

SmallestSize

}

internal static void GetZipCompressionMethodFromOpcCompressionOption(

CompressionOption compressionOption,

out CompressionLevel compressionLevel)

{

switch (compressionOption)

{

case CompressionOption.NotCompressed:

{

compressionLevel = CompressionLevel.NoCompression;

}

break;

case CompressionOption.Normal:

{

compressionLevel = CompressionLevel.Optimal; // <=

}

break;

case CompressionOption.Maximum:

{

compressionLevel = CompressionLevel.Optimal; // <=

}

break;

case CompressionOption.Fast:

{

compressionLevel = CompressionLevel.Fastest;

}

break;

case CompressionOption.SuperFast:

{

compressionLevel = CompressionLevel.Fastest;

}

break;

// fall-through is not allowed

default:

{

Debug.Fail("Encountered an invalid CompressionOption enum value");

goto case CompressionOption.NotCompressed;

}

}

}PVS-Studio warning: V3139 Two or more case-branches perform the same actions. ZipPackage.cs 402

In fact, this method performs mapping from CompressionOption to CompressionLevel. The suspicious thing here is that the CompressionOption.Normal and CompressionOption.Maximum values are mapped to the CompressionLevel.Optimal value.

Probably CompressionOption.Maximum should match CompressionLevel.SmallestSize.

Issue 2

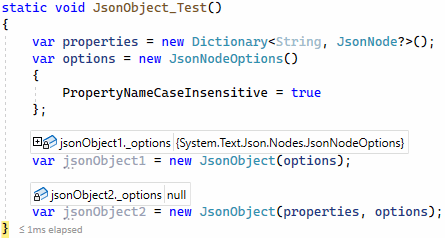

Now let's practice a little. Let's take the System.Text.Json.Nodes.JsonObject for our experiments. If you wish, you can repeat the described operations using the release version of .NET 6 SDK.

The JsonObject type has 2 constructors: one constructor accepts only options, the other - properties and options. Well, it's clear what kind of behavior we should expect from them. Documentation is available here.

Let's create two instances of the JsonObject type and use each of the constructors.

static void JsonObject_Test()

{

var properties = new Dictionary<String, JsonNode?>();

var options = new JsonNodeOptions()

{

PropertyNameCaseInsensitive = true

};

var jsonObject1 = new JsonObject(options);

var jsonObject2 = new JsonObject(properties, options);

}Now let's check the state of the objects we created.

The jsonObject1 state is expected, but the jsonObject2 object state is not. Why the null value is written in the _options field? It's a little confusing. Well, let's open the source code and look at these constructors.

public sealed partial class JsonObject : JsonNode

{

....

public JsonObject(JsonNodeOptions? options = null) : base(options) { }

public JsonObject(IEnumerable<KeyValuePair<string, JsonNode?>> properties,

JsonNodeOptions? options = null)

{

foreach (KeyValuePair<string, JsonNode?> node in properties)

{

Add(node.Key, node.Value);

}

}

....

}In the second constructor, the options parameter is simply abandoned - it is not passed anywhere and is not used in any way. Whereas in the first constructor, options are passed to the base class constructor, where they are written to the field:

internal JsonNode(JsonNodeOptions? options = null)

{

_options = options;

}The corresponding PVS-Studio warning: V3117 Constructor parameter 'options' is not used. JsonObject.cs 35

Issue 3

If we talk about the forgotten parameters, there was another interesting fragment.

public class ServiceNameCollection : ReadOnlyCollectionBase

{

....

private ServiceNameCollection(IList list, string serviceName)

: this(list, additionalCapacity: 1)

{ .... }

private ServiceNameCollection(IList list, IEnumerable serviceNames)

: this(list, additionalCapacity: GetCountOrOne(serviceNames))

{ .... }

private ServiceNameCollection(IList list, int additionalCapacity)

{

Debug.Assert(list != null);

Debug.Assert(additionalCapacity >= 0);

foreach (string? item in list)

{

InnerList.Add(item);

}

}

....

}PVS-Studio warning: V3117 Constructor parameter 'additionalCapacity' is not used. ServiceNameCollection.cs 46

According to code, the additionalCapacity parameter of the last constructor is checked in Debug.Assert and not used for anything else. It looks suspicious. It's especially amusing - other constructors pass some values for additionalCapacity parameter.

Issue 4

Here's the test for the ability of foresight (oops, spoilers). Study the following code and try to guess what triggered the analyzer.

public override void CheckErrors()

{

throw new XsltException(SR.Xslt_InvalidXPath,

new string[] { Expression },

_baseUri,

_linePosition,

_lineNumber,

null);

}It would seem that an exception is simply thrown. To understand what is wrong here, you need to look at the XsltException constructor.

internal XsltException(string res,

string?[] args,

string? sourceUri,

int lineNumber,

int linePosition,

Exception? inner) : base(....)

{ .... }If you compare the order of arguments and parameters, it becomes clear what triggered the analyzer. It looks like the line position and the line number switched places.

Order of arguments:

Order of parameters:

PVS-Studio warning: V3066 Possible incorrect order of arguments passed to 'XsltException' constructor: '_linePosition' and '_lineNumber'. Compiler.cs 1187

Issue 5

Here is sufficiently large piece of code. There must be some kind of typo hidden there... Would you like to try to find it?

public Parser(Compilation compilation,

in JsonSourceGenerationContext sourceGenerationContext)

{

_compilation = compilation;

_sourceGenerationContext = sourceGenerationContext;

_metadataLoadContext = new MetadataLoadContextInternal(_compilation);

_ilistOfTType = _metadataLoadContext.Resolve(

SpecialType.System_Collections_Generic_IList_T);

_icollectionOfTType = _metadataLoadContext.Resolve(

SpecialType.System_Collections_Generic_ICollection_T);

_ienumerableOfTType = _metadataLoadContext.Resolve(

SpecialType.System_Collections_Generic_IEnumerable_T);

_ienumerableType = _metadataLoadContext.Resolve(

SpecialType.System_Collections_IEnumerable);

_listOfTType = _metadataLoadContext.Resolve(typeof(List<>));

_dictionaryType = _metadataLoadContext.Resolve(typeof(Dictionary<,>));

_idictionaryOfTKeyTValueType = _metadataLoadContext.Resolve(

typeof(IDictionary<,>));

_ireadonlyDictionaryType = _metadataLoadContext.Resolve(

typeof(IReadOnlyDictionary<,>));

_isetType = _metadataLoadContext.Resolve(typeof(ISet<>));

_stackOfTType = _metadataLoadContext.Resolve(typeof(Stack<>));

_queueOfTType = _metadataLoadContext.Resolve(typeof(Queue<>));

_concurrentStackType = _metadataLoadContext.Resolve(

typeof(ConcurrentStack<>));

_concurrentQueueType = _metadataLoadContext.Resolve(

typeof(ConcurrentQueue<>));

_idictionaryType = _metadataLoadContext.Resolve(typeof(IDictionary));

_ilistType = _metadataLoadContext.Resolve(typeof(IList));

_stackType = _metadataLoadContext.Resolve(typeof(Stack));

_queueType = _metadataLoadContext.Resolve(typeof(Queue));

_keyValuePair = _metadataLoadContext.Resolve(typeof(KeyValuePair<,>));

_booleanType = _metadataLoadContext.Resolve(SpecialType.System_Boolean);

_charType = _metadataLoadContext.Resolve(SpecialType.System_Char);

_dateTimeType = _metadataLoadContext.Resolve(SpecialType.System_DateTime);

_nullableOfTType = _metadataLoadContext.Resolve(

SpecialType.System_Nullable_T);

_objectType = _metadataLoadContext.Resolve(SpecialType.System_Object);

_stringType = _metadataLoadContext.Resolve(SpecialType.System_String);

_dateTimeOffsetType = _metadataLoadContext.Resolve(typeof(DateTimeOffset));

_byteArrayType = _metadataLoadContext.Resolve(

typeof(byte)).MakeArrayType();

_guidType = _metadataLoadContext.Resolve(typeof(Guid));

_uriType = _metadataLoadContext.Resolve(typeof(Uri));

_versionType = _metadataLoadContext.Resolve(typeof(Version));

_jsonArrayType = _metadataLoadContext.Resolve(JsonArrayFullName);

_jsonElementType = _metadataLoadContext.Resolve(JsonElementFullName);

_jsonNodeType = _metadataLoadContext.Resolve(JsonNodeFullName);

_jsonObjectType = _metadataLoadContext.Resolve(JsonObjectFullName);

_jsonValueType = _metadataLoadContext.Resolve(JsonValueFullName);

// Unsupported types.

_typeType = _metadataLoadContext.Resolve(typeof(Type));

_serializationInfoType = _metadataLoadContext.Resolve(

typeof(Runtime.Serialization.SerializationInfo));

_intPtrType = _metadataLoadContext.Resolve(typeof(IntPtr));

_uIntPtrType = _metadataLoadContext.Resolve(typeof(UIntPtr));

_iAsyncEnumerableGenericType = _metadataLoadContext.Resolve(

IAsyncEnumerableFullName);

_dateOnlyType = _metadataLoadContext.Resolve(DateOnlyFullName);

_timeOnlyType = _metadataLoadContext.Resolve(TimeOnlyFullName);

_jsonConverterOfTType = _metadataLoadContext.Resolve(

JsonConverterOfTFullName);

PopulateKnownTypes();

}Well, how's it going? Or maybe there is no typo at all?

Let's first look at the analyzer warning: V3080 Possible null dereference of method return value. Consider inspecting: Resolve(...). JsonSourceGenerator.Parser.cs 203

The Resolve method can return null. That's what method's signature indicates. And that's what PVS-Studio warns us about when it detects the possibility of returning null value with the help of the interprocedural analysis.

public Type? Resolve(Type type)

{

Debug.Assert(!type.IsArray,

"Resolution logic only capable of handling named types.");

return Resolve(type.FullName!);

}Let's go further, to another overload of Resolve.

public Type? Resolve(string fullyQualifiedMetadataName)

{

INamedTypeSymbol? typeSymbol =

_compilation.GetBestTypeByMetadataName(fullyQualifiedMetadataName);

return typeSymbol.AsType(this);

}Note that typeSymbol is written as nullable reference type: INamedTypeSymbol?. Let's go even further - to the AsType method.

public static Type AsType(this ITypeSymbol typeSymbol,

MetadataLoadContextInternal metadataLoadContext)

{

if (typeSymbol == null)

{

return null;

}

return new TypeWrapper(typeSymbol, metadataLoadContext);

}As you can see, if the first argument is a null reference, then the null value is returned from the method.

And now let's go back to the Parser type constructor. In this type constructor, usually the result of the Resolve method call is simply written to some field. But PVS-Studio warns that there is an exception:

_byteArrayType = _metadataLoadContext.Resolve(typeof(byte)).MakeArrayType();Here, the MakeArrayType instance method is called for the result of the Resolve method call. Consequently, if Resolve returns null, a NullReferenceException will occur.

Issue 6

public abstract partial class Instrument<T> : Instrument where T : struct

{

[ThreadStatic] private KeyValuePair<string, object?>[] ts_tags;

....

}PVS-Studio warning: V3079 'ThreadStatic' attribute is applied to a non-static 'ts_tags' field and will be ignored Instrument.netfx.cs 20

Let's quote the documentation: Note that in addition to applying the ThreadStaticAttribute attribute to a field, you must also define it as a static field (in C#) or a Shared field (in Visual Basic).

As you can see from code, the ts_tags is instance field. So, it makes no sense to mark the field with the ThreadStatic attribute. Or there's some kind of black magic going on here...

Issue 7

private static JsonSourceGenerationOptionsAttribute?

GetSerializerOptions(AttributeSyntax? attributeSyntax)

{

....

foreach (AttributeArgumentSyntax node in attributeArguments)

{

IEnumerable<SyntaxNode> childNodes = node.ChildNodes();

NameEqualsSyntax? propertyNameNode

= childNodes.First() as NameEqualsSyntax;

Debug.Assert(propertyNameNode != null);

SyntaxNode? propertyValueNode = childNodes.ElementAtOrDefault(1);

string propertyValueStr = propertyValueNode.GetLastToken().ValueText;

....

}

....

}PVS-Studio warning: V3146 Possible null dereference of 'propertyValueNode'. The 'childNodes.ElementAtOrDefault' can return default null value. JsonSourceGenerator.Parser.cs 560

If the childNodes collection contains fewer than two elements, the call of ElementAtOrDefault returns the default(SyntaxNode) value (i.e. null, since SyntaxNode is a class). In this case, a NullReferenceException is thrown on the next line. It is especially strange that propertyValueNode is a nullable reference type, but it (propertyValueNode) is dereferenced without checking.

Perhaps there is some implicit contract here that there is always more than one element in childNodes. For example, if there is propertyNameNode, then there is also propertyValueNode. In this case, to avoid unnecessary questions, one can use the ElementAt method call.

Issue 8

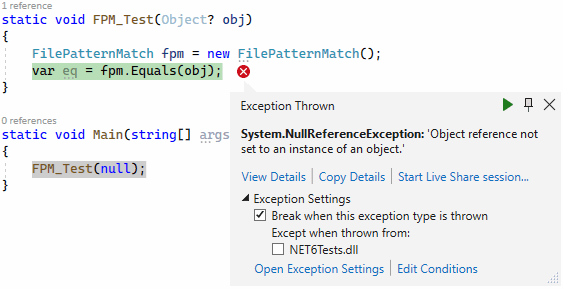

There is such a structure – Microsoft.Extensions.FileSystemGlobbing.FilePatternMatch. This structure overrides the Equals(Object) method, which seems logical. Documentation describing the method.

Let's say we have code that calls this method:

static void FPM_Test(Object? obj)

{

FilePatternMatch fpm = new FilePatternMatch();

var eq = fpm.Equals(obj);

}What do you think will happen if FPM_Test is called with a null value? Will the false value be written to the eq variable? Well, almost.

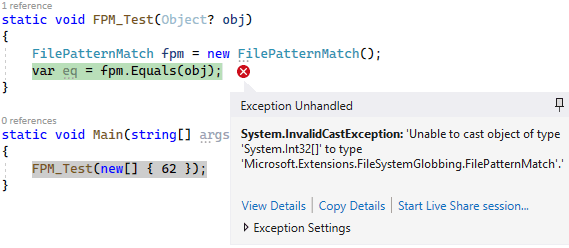

The exception is also thrown if we pass as an argument an instance of a type other than FilePatternMatch. For example... If we pass an array of some kind.

Have you guessed yet why this happens? The point is, in the Equals method, the argument is not checked in any way for a null value or for type compatibility, but is simply unboxed without any conditions:

public override bool Equals(object obj)

{

return Equals((FilePatternMatch) obj);

}PVS-Studio warning: V3115 Passing 'null' to 'Equals' method should not result in 'NullReferenceException'. FilePatternMatch.cs 61

Of course, judging from the documentation, no one promised us that Equals(Object) would return false if it does not accept FilePatternMatch. But that would probably be the most expected behavior.

The interesting thing about duplicate checks. You may not always explicitly know — is it just redundant code or should there be something else instead of one of duplicate checks. Anyway, let's look at a few examples.

Issue 9

internal DeflateManagedStream(Stream stream,

ZipArchiveEntry.CompressionMethodValues method,

long uncompressedSize = -1)

{

if (stream == null)

throw new ArgumentNullException(nameof(stream));

if (!stream.CanRead)

throw new ArgumentException(SR.NotSupported_UnreadableStream,

nameof(stream));

if (!stream.CanRead)

throw new ArgumentException(SR.NotSupported_UnreadableStream,

nameof(stream));

Debug.Assert(method == ZipArchiveEntry.CompressionMethodValues.Deflate64);

_inflater

= new InflaterManaged(

method == ZipArchiveEntry.CompressionMethodValues.Deflate64,

uncompressedSize);

_stream = stream;

_buffer = new byte[DefaultBufferSize];

}PVS-Studio warning: V3021 There are two 'if' statements with identical conditional expressions. The first 'if' statement contains method return. This means that the second 'if' statement is senseless DeflateManagedStream.cs 27

At the beginning of the method, there are several checks. But, here's the bad luck, one of the checks (!stream.CanRead) is completely duplicated (both the condition and then branch of the if statement).

Issue 10

public static object? Deserialize(ReadOnlySpan<char> json,

Type returnType,

JsonSerializerOptions? options = null)

{

// default/null span is treated as empty

if (returnType == null)

{

throw new ArgumentNullException(nameof(returnType));

}

if (returnType == null)

{

throw new ArgumentNullException(nameof(returnType));

}

JsonTypeInfo jsonTypeInfo = GetTypeInfo(options, returnType);

return ReadFromSpan<object?>(json, jsonTypeInfo)!;

}PVS-Studio warning: V3021 There are two 'if' statements with identical conditional expressions. The first 'if' statement contains method return. This means that the second 'if' statement is senseless JsonSerializer.Read.String.cs 163

Yeah, a similar situation, but in a completely different place. Before using, there is the returnType parameter check for null. It's good, but they check the parameter twice.

Issue 11

private void WriteQualifiedNameElement(....)

{

bool hasDefault = defaultValue != null && defaultValue != DBNull.Value;

if (hasDefault)

{

throw Globals.NotSupported(

"XmlQualifiedName DefaultValue not supported. Fail in WriteValue()");

}

....

if (hasDefault)

{

throw Globals.NotSupported(

"XmlQualifiedName DefaultValue not supported. Fail in WriteValue()");

}

}PVS-Studio warning: V3021 There are two 'if' statements with identical conditional expressions. The first 'if' statement contains method return. This means that the second 'if' statement is senseless XmlSerializationWriterILGen.cs 102

Here the situation is a little more exciting. If the previous duplicate checks followed one after another, here they are at different ends of the method - almost 20 lines apart. However, the hasDefault local variable being checked does not change during this time. Accordingly, either the exception will be thrown during the first check, or it will not be thrown at all.

Issue 12

internal static bool AutoGenerated(ForeignKeyConstraint fk, bool checkRelation)

{

....

if (fk.ExtendedProperties.Count > 0)

return false;

if (fk.AcceptRejectRule != AcceptRejectRule.None)

return false;

if (fk.DeleteRule != Rule.Cascade) // <=

return false;

if (fk.DeleteRule != Rule.Cascade) // <=

return false;

if (fk.RelatedColumnsReference.Length != 1)

return false;

return AutoGenerated(fk.RelatedColumnsReference[0]);

}PVS-Studio warning: V3022 Expression 'fk.DeleteRule != Rule.Cascade' is always false. xmlsaver.cs 1708

Traditionally, the question is - was it needed checking another value or is it just redundant code?

First, let's have a look at a couple of warnings found. Then, I'll tell you a little story.

Issue 13

internal void SetLimit(int physicalMemoryLimitPercentage)

{

if (physicalMemoryLimitPercentage == 0)

{

// use defaults

return;

}

_pressureHigh = Math.Max(3, physicalMemoryLimitPercentage);

_pressureLow = Math.Max(1, _pressureHigh - 9);

Dbg.Trace($"MemoryCacheStats",

"PhysicalMemoryMonitor.SetLimit:

_pressureHigh={_pressureHigh}, _pressureLow={_pressureLow}");

}PVS-Studio warning: V3138 String literal contains potential interpolated expression. Consider inspecting: _pressureHigh. PhysicalMemoryMonitor.cs 110

It almost seems like someone wanted to log the _pressureHigh and _pressureLow fields here. However, the substitution of values won't work, since the string is not interpolated. But the interpolation symbol is on the first argument of the Dbg.Trace method, and there is nothing to substitute in the argument. :)

Issue 14

private void ParseSpecs(string? metricsSpecs)

{

....

string[] specStrings = ....

foreach (string specString in specStrings)

{

if (!MetricSpec.TryParse(specString, out MetricSpec spec))

{

Log.Message("Failed to parse metric spec: {specString}");

}

else

{

Log.Message("Parsed metric: {spec}");

....

}

}

}PVS-Studio warning: V3138 String literal contains potential interpolated expression. Consider inspecting: spec. MetricsEventSource.cs 381

One is trying to parse the specString string. If it doesn't work out, one need to log the source string, if it works out - to log the result (the spec variable) and perform some other operations.

The problem again is that both in the first and in the second case the interpolation symbol is missing. As a consequence, the values of the specString and spec variables won't be substituted.

And now get ready for the promised story.

As I mentioned above, I checked the .NET Core libraries in 2019. I found several strings that most likely had to be interpolated, but because of the missed '$' symbol they were not. In that article, the corresponding warnings are described as issue 10 and issue 11.

I created the bug report on GitHub. After that, the .NET development team fixed some code fragments described in the article. Among them - the errors with interpolated strings. The corresponding pull request.

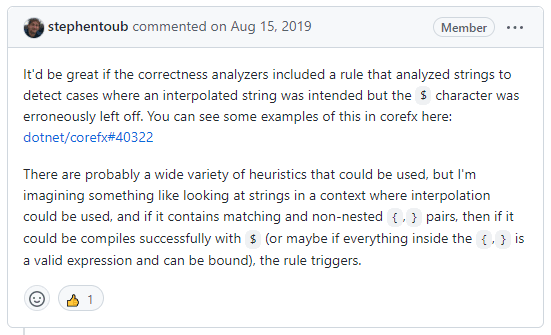

Moreover, in the Roslyn Analyzers issue tracker, was created the task of developing a new diagnostic that would detect such cases.

My colleague described the whole story in a little more detail here.

Let's get back to the present. I knew all this and remembered it, so I was very surprised when I came across errors with missed interpolation again. How can that be? After all, there already should be the out-of-the-box diagnostic to help avoid these errors.

I decided to check out that diagnostic development issue from August 15, 2019, and it turned out... that the diagnostic is not ready yet. That's the answer to the question - where the interpolation errors come from.

PVS-Studio has been detecting such problems since 7.03 release (June 25, 2019) - make use of it. ;)

During the check, I came across the warnings several times that seemed vaguely familiar to me. It turned out that I had already described them last time. Since they are still in the code, I assume that these are not errors.

For example, the code below seems to be a really unusual way to throw an ArgumentOutOfRangeException. This is issue 30 from the last check.

private ArrayList? _tables;

private DataTable? GetTable(string tableName, string ns)

{

if (_tables == null)

return _dataSet!.Tables.GetTable(tableName, ns);

if (_tables.Count == 0)

return (DataTable?)_tables[0];

....

}However, I have a few questions about other fragments already discovered earlier. For example, issue 25. In the loop, the seq collection is bypassed. But only the first element of the collection, seq[0], is constantly accessed. It looks... unusual.

public bool MatchesXmlType(IList<XPathItem> seq, int indexType)

{

XmlQueryType typBase = GetXmlType(indexType);

XmlQueryCardinality card = seq.Count switch

{

0 => XmlQueryCardinality.Zero,

1 => XmlQueryCardinality.One,

_ => XmlQueryCardinality.More,

};

if (!(card <= typBase.Cardinality))

return false;

typBase = typBase.Prime;

for (int i = 0; i < seq.Count; i++)

{

if (!CreateXmlType(seq[0]).IsSubtypeOf(typBase)) // <=

return false;

}

return true;

}PVS-Studio warning: V3102 Suspicious access to element of 'seq' object by a constant index inside a loop. XmlQueryRuntime.cs 729

This code confuses me a little. Does it confuse you?

Or let's take issue 34.

public bool Remove(out int testPosition, out MaskedTextResultHint resultHint)

{

....

if (lastAssignedPos == INVALID_INDEX)

{

....

return true; // nothing to remove.

}

....

return true;

}PVS-Studio warning: V3009 It's odd that this method always returns one and the same value of 'true'. MaskedTextProvider.cs 1531

The method always returned true before, and it does the same thing now. At the same time, the comment says that the method can also return false: Returns true on success, false otherwise. The same story we can find in the documentation.

The following example I will even put in a separate section. Even though it was also described in the previous article. Let's speculate a little not only on the code fragment itself, but also on one feature used in the fragment – nullable reference types.

In general, I have not yet figured out whether I like nullable reference types or not.

On the one hand, nullable reference types have a huge advantage. They make signature of methods more informative. One glance at a method is enough to understand whether it can return null, whether a certain parameter can have a null value, etc.

On the other hand, all this is built on trust. No one forbids you to write code like this:

static String GetStr()

{

return null!;

}

static void Main(string[] args)

{

String str = GetStr();

Console.WriteLine(str.Length); // NRE, str - null

}Yes, yes, yes, it's synthetic code, but you can write it this way! If such a code is written inside your company, we go (relatively speaking) to the author of GetStr and have a conversation. However, if GetStr is taken from some library and you don't have the sources of this library - such a surprise won't be very pleasant.

Let's return from synthetic examples to our main topic – .NET 6. And there are subtleties. For example, different libraries are divided into different solutions. And looking through them, I repeatedly wondered – is nullable context enabled in this project? The fact that there is no check for null - is this expected or not? Probably, this is not a problem when working within the context of one project. However, with cursory analysis of all projects, it creates certain difficulties.

And it really gets interesting. All sorts of strange things start showing up when there is migration to a nullable context. It seems like a variable cannot have null value, and at the same time there is a check. And let's face it, .NET has a few such places. Let me show you a couple of them.

private void ValidateAttributes(XmlElement elementNode)

{

....

XmlSchemaAttribute schemaAttribute

= (_defaultAttributes[i] as XmlSchemaAttribute)!;

attrQName = schemaAttribute.QualifiedName;

Debug.Assert(schemaAttribute != null);

....

}PVS-Studio warning: V3095 The 'schemaAttribute' object was used before it was verified against null. Check lines: 438, 439. DocumentSchemaValidator.cs 438

The '!' symbol hints that we are working with a nullable context here. Okay.

1. Why is the 'as' operator used for casting, and not a direct cast? If there is confidence that schemaAttribute is not null (that's how I read the implicit contract with '!'), so _defaultAttributes[i] does have the XmlSchemaAttribute type. Well, let's say a developer likes this syntax more - okay.

2. If schemaAttribute is not null, why is there the check for null in Debug.Assert below?

3. If the check is relevant and schemaAttribute can still have a null value (contrary to the semantics of nullable reference types), then execution will not reach Debug.Assert due to the thrown exception. The exception will be thrown when accessing schemaAttribute.QualifiedName.

Personally, I have a lot of questions at once when looking at such a small piece of code.

Here is a similar story:

public Node DeepClone(int count)

{

....

while (originalCurrent != null)

{

originalNodes.Push(originalCurrent);

newNodes.Push(newCurrent);

newCurrent.Left = originalCurrent.Left?.ShallowClone();

originalCurrent = originalCurrent.Left;

newCurrent = newCurrent.Left!;

}

....

}On the one hand, newCurrent.Left can have a null value, since the result of executing the ?. operator is written to it (originalCurrent.Left?.ShallowClone()). On the other hand, in the last line we see the annotation that newCurrent.Left not null.

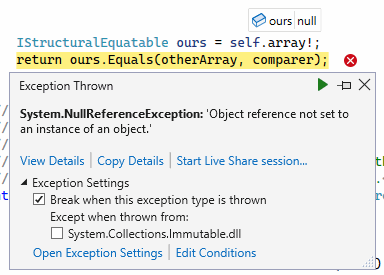

And now let's look at the code fragment from .NET 6, that in fact, was the reason why I started to write this section. The IStructuralEquatable.Equals(object? other, IEqualityComparer comparer) implementation in the ImmutableArray type.

internal readonly T[]? array;

bool IStructuralEquatable.Equals(object? other, IEqualityComparer comparer)

{

var self = this;

Array? otherArray = other as Array;

if (otherArray == null)

{

if (other is IImmutableArray theirs)

{

otherArray = theirs.Array;

if (self.array == null && otherArray == null)

{

return true;

}

else if (self.array == null)

{

return false;

}

}

}

IStructuralEquatable ours = self.array!;

return ours.Equals(otherArray, comparer);

}If you look at the last code lines in Visual Studio, the editor will helpfully tell you that ours is not null. It can be seen from the code – self.array is nonnullable reference variable.

OK, let's write the following code:

IStructuralEquatable immutableArr = default(ImmutableArray<String>);

var eq = immutableArr.Equals(null, EqualityComparer<String>.Default);Then we run it for execution and see a NullReferenceException.

Whoops. It seems that the ours variable, which is not null, in fact still turned out to be a null reference.

Let's find out how that happened.

Here you can have the counter-argument that the immutable array has incorrect state, since it was created not through special methods/properties, but through calling the default operator. But getting an NRE on an Equals call for such an object is still a bit strange.

However, that's not even the point. Code, annotations and hints indicates that ours is not null. In fact, the variable does have the null value. For me personally, this undermines trust in nullable reference types a bit.

PVS-Studio issues a warning: V3125 The 'ours' object was used after it was verified against null. Check lines: 1144, 1136. ImmutableArray_1.cs 1144

By the way, I wrote about this problem in the last article (issue 53). Then, however, there was no nullable annotations yet.

Note. Returning to the conversation about operations on the ImmutableArray instances in the default state, some methods/properties use special methods: ThrowNullRefIfNotInitialized and ThrowInvalidOperationIfNotInitialized. These methods report the uninitialized state of the object. Moreover, explicit implementations of interface methods use ThrowInvalidOperationIfNotInitialized. Perhaps it should have been used in the case described above.

Here I want to ask our audience – what kind of experience do you have working with nullable reference types? Do you like them? Or maybe you don't like them? Have you used nullable reference types on your projects? What went well? What difficulties did you have? I'm curious as to your view on nullable reference types.

In conclusion, once again, I would like to congratulate the .NET 6 development team with the release. I also want to say thank you to all those who contribute to this project. I am sure that they will fix the shortcomings. There are still many achievements ahead.

I also hope that I was able to remind you once again how the static analysis benefits the development process. If you are interested, you can try PVS-Studio on your project as well. By the way, click on this link, and get an extended license that is valid for 30 days, not 7. Isn't that a good reason to try the analyzer? ;)

And by good tradition, I invite you to subscribe to my Twitter so as not to miss anything interesting.

24