40

Automate EBS Snapshot archiving with Boto3 and Lambda

Recently, AWS released a new storage tier for EBS Snapshots: archive. Archiving reduces storage cost by up to 75%.

Unfortunately, archiving is not yet possible using the Backup Service, and can only be done manually over the EC2 console or the AWS CLI.

However, since the aws-sdk was updated as well, we can automate archiving using a scheduled lambda function.

Since I couldn't find any resources regarding this process, I decided to write up a tutorial to hopefully give you some insight on using this great new feature.

A Snapshot of a EBS volume attached to a EC2 instance is created daily via AWS Backup. The goal is to keep one Snapshot in warm storage (standard), while moving the others into cold storage (archive). The retention period of the snapshots is set to 91 days.

I will be using a Sam template to write my lambda function, and use a event bridge scheduled event in order to invoke the function on a daily basis to move older snapshots into archive.

If you haven’t, follow the instructions to install and setup the sam cli here.

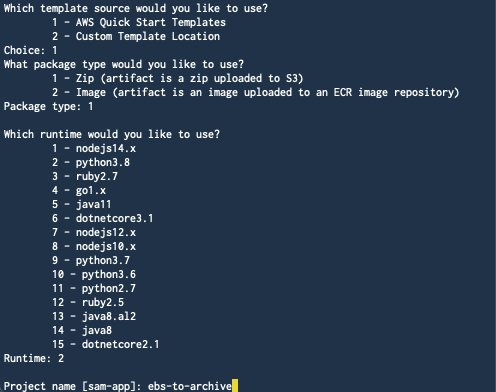

Next, we will run:

sam initWe will use a AWS Quick Start Template,

use Zip as package Type and select python3.8 as our runtime.

Our setup process should look like this:

After that, we choose the Hello World template, delete everything from the app.py except the lambda_handler function and the return statement, delete the HelloWorld event from the template.yaml and are good to go.

1. Setup packages and boto3

Now we import boto3 and datetime, and initialize the ec2 boto3 client.

Our code looks like this now:

import json

import boto3

from datetime import date

ec2 = boto3.client('ec2')

def lambda_handler():

return {

"statusCode": 200,

"body": json.dumps({

"message": "Success",

}),

}2. List our snapshots

To find the snapshots we want to archive, we first need to list our snapshots and filter accordingly.

I used the describe_snapshots method and filtered by our ownerID and the storage_type.

!Important Definitely specify your owner Id, otherwise the method will list all publicly available snapshots as well. For information check out the boto3 docs. You can also find a range of other filters over there.

as storage_type we will select > 'standard', as we only want to list the snapshots that haven't been moved to archive, yet.

In the code this looks like this:

snapshots = ec2.describe_snapshots(

Filters=[{

'Name': 'owner-id',

'Values': ['YOUR_OWNER_ID'],

},

{

'Name': 'storage-tier',

'Values': ['standard'],

},

])3. Move older snapshots to cold storage

The last step is moving all snapshots older than today to archive, so we loop over them and move all but the current into archive.

Usually there shouldn't be more than one, but just in case we missed one or the timing is off, we still do that.

To compare the date we use the 'StartTime" property from the response and simply compare it to the current date.

There a many ways to do this, so feel free to choose another approach and let me know in the comments.

The final code looks like this:

import json

import boto3

from datetime import date

ec2 = boto3.client('ec2')

def lambda_handler():

snapshots = ec2.describe_snapshots(

Filters=[{

'Name': 'owner-id',

'Values': ['YOUR_OWNER_ID'],

},

{

'Name': 'storage-tier',

'Values': ['standard'],

},

])

for snapshot in snapshots['Snapshots']:

if snapshot['StartTime'].date() < date.today():

response = ec2.modify_snapshot_tier(

SnapshotId=snapshot['SnapshotId'],

StorageTier='archive'

)

return {

"statusCode": 200,

"body": json.dumps({

"message": "Success",

}),

}3. Invoking on a schedule

In my base I want to invoke the function once a day, a few hours after the backup was created.

We simply add a Schedule as an Event to the function in our template.yaml like this:

Events:

ScheduledEvent:

Type: Schedule

Properties:

Schedule: "cron(* 10 * * ? *)"

Enabled: TrueThis will invoke the function every day at 10 am. To check out cron expressions in AWS click here.

Now we simply need to deploy the Sam App, add the appropriate policies to our function and we are good to go!

I hope I could give you some insight on how to automate snapshot archiving with the newly released storage tier from AWS.

40