22

😡 Agonies, Despair and self hosted Redash BI server on Microsoft Azure cloud platform 😋 part 2

Hello everyone,

Thank you for joining in today for part 2 to talk about the open source BI tool named Redash and how to set up a self hosted Redash server in the cloud.

We finished part 1 with a working instance in our local development environment and now we want to proceed on to the cloud. We will continue on from where we left off and if for any reason at all you didn't read the first part, i would suggest that you do that first.

Another thing i wanted to talk about is the base for this setup. In the website there is a section for setting up a self hosted instance, here is the link. In order to see the full picture we need also to visit the GitHub project. The issue i have with this specif setup is that in aims using a dedicated vm that runs the Redash using docker compose. This is a very wasteful approach in terms of resources and cost. We decided to change the approach for our use case utilizing Azure App Services that can run a multi container application with docker compose. We also need to remember that we want to utilize the cloud platform and not create all the services locally in the web app because of the management involved in it regarding the database. We want to use a managed service from Azure for it. Azure has it and its called Azure Database for PostgreSQL servers.

Let's list what we will need for our setup in Azure. As we already said, we will need a multi container web app under Azure App Services, we need a managed PostgreSQL database under Azure Database for PostgreSQL servers and of course we can't move forward without an account with Azure.

We will start from easy and proceed to the more challenging steps we need to accomplish in order to have a working instance.

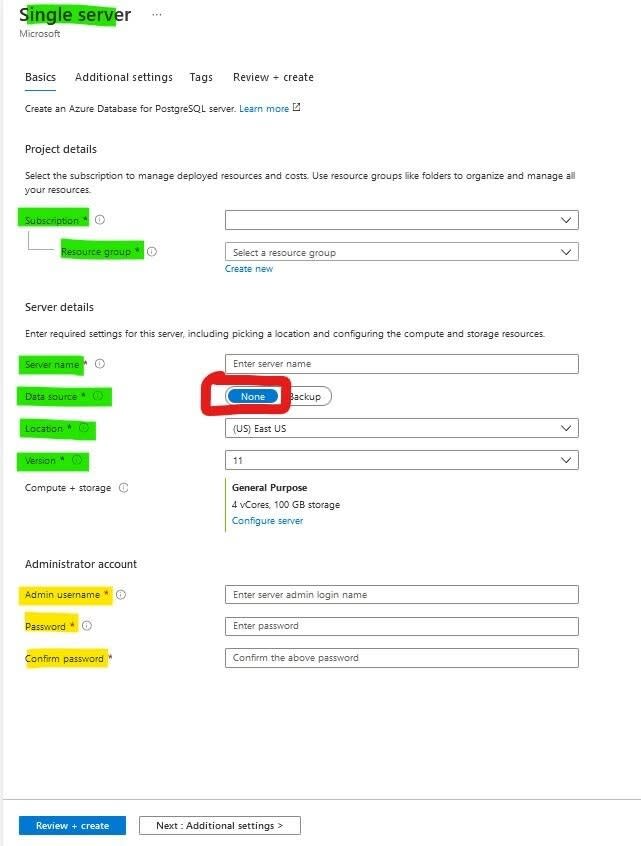

Step 1 is to create a managed PostgreSQL server in Azure. In order to accomplish it locate the search bar on the top of the screen in Azure portal and type Azure Database for PostgreSQL servers. Click on the option and navigate to the dedicate page. Locate the create button with ➕ sign on the top left hand side and click on it. In the next screen we will need to select the plan we need for production use so we will choose 'Single server' and in the following screen you will need to fill the details for this instance. Choose a subscription and create new resource group name, provide a name for this instance, choose location that is near you, leave the data source as 'none' and choose the PostgreSQL version. Pay attention that in the 'Compute + storage' option to choose 'General Purpose' this is very important. At the bottom you can provide the credentials for the admin user to PostgreSQL server, save these details because we will need them soon. Click next until you get to 'Review and Create' and click create. This may take couple of minutes. In case your review fails then go back to the 'Tags' tab and fill in these values: BusinessOwner, BusinessUnit, Application, Environment if any of them are empty. Once we got it to get into the creation process we can go on to the next step, as i said it may take a few minutes and we want to be efficient and not to waste time.

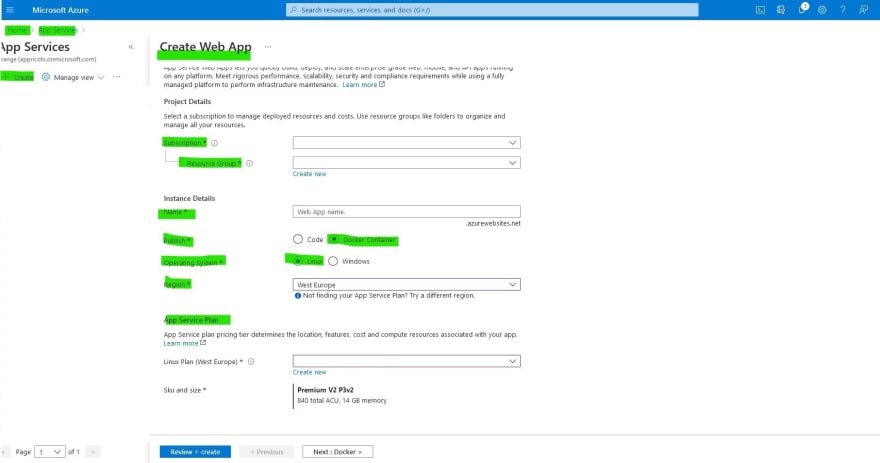

Step 2 is to create a multi container web application under Azure App Services. As before let's locate the search bar on the top of the screen in Azure portal and type App Services. Click on the option and navigate to the dedicate page. Locate the create button with ➕ sign on the top left hand side and click on it. You can see that the window we got is very similar to the one we had before when wanted to create a managed database server. Fill the details for this web app instance. Choose a subscription and the same resource group name you created in the previous step, provide a name for this web app instance, choose a region that is near you, under the publish option choose Docker container, under the operating system option choose Linux and choose a plan for the web app. Pay attention that we will not drill down yet with the web app configurations until we create it and proceed with this article. We want to understand what we need to proceed with it. The default is an empty Nginx docker instance.

Step 3 is to create a docker compose file that we can apply on the multi container web application. Now, in theory you could use the one that we already have from part 1 of this series and we are going to do this actually and then we will change what we need in order to fit it to our needs. We discussed about why we don't want to do it. This is the reason we create in the first step a managed PostgreSQL server. Hopefully by the time we are done with step 2, both the web app and the database server are created. You should see the progress under the bell icon 🔔 on the top right hand side in Azure portal. Once the web application created lets navigate to it. You can either look it up in the search bar or via the App Services screen pick it from the list. As we are in the specific web app management screen we need to check that the web app is working with a public IP address. You should be in the overview page, on the top left next to the menu, locate the 'browse' button and click on it. You should see a new web page that shows the default Nginx welcome page. We confirmed that the web app is up and alive. Now locate in the left hand side menu the option called 'App Service Logs'. We want to enable the log stream from docker into the web app itself. Under 'Application logging' choose 'file system' and under 'Quota' and 'Days' choose 50 (it doesn't really matter) and click save. Now locate the 'Deployment Center' in the same menu. In this screen under the 'Settings' tab first of all let's set the source to be container registry. Now under the 'Registry settings' we will choose container type to be docker compose, registry source to be Docker hub and the repository access to be public. In the config area paste the content of our docker-compose.yml file from the previous article and click save. Scroll up to the very top and click on the logs tab. You will be able to see the process of the image pulling and starting up docker compose. Once it is done you can click on the browse button to navigate to the public web page of this web application or if you didn't close the tab we opened earlier when we checked that the web app is alive just refresh it. You should at this point, in theory, see the same screen, Redash welcome window where you need to create the admin user, but we know that this is not the case. We need to go through the same process as before to create the management database and run the setup script. Well, we can't do that here! There was a time, not long ago, with the previous version of the Azure portal and the web application where we could specify a command that runs on the first startup, it is not available anymore. Let's see how we can fix our situation.

If we pause for a moment and review what happened until this point, we would basically think that not much is different from the local environment except for the fact that our 'local' environment is now a web app that is running in the cloud! Well, yes! And this is the exact experience i was trying to make you go through. Some of you who maybe paused in between the readings and read the information behind the links that I left would see that the process and the experience that Redash docs takes you is just insane. There are nuances to pay attention to and development environment is not the damn same as the production one, which is crazy, in my opinion! Why would i have 2 or more completely different configurations that are not keeping me in harmony with the service 😵 ?! We all know the first and simple rule - keep your development environment as close as you can to your production environment. You could also examine the docker compose configurations file carefully and find that even in development we didn't use the suggested development setup, we went all the way to prod from the start. Surprise! 💥💥💥

As we reveal this discovery, we now want to pay attention to the configurations themselves and we understand that we can omit the PostgreSQL database service along with the pgAdmin service. We could argue that we don't actually need the nginx service as well, but there is a reason its here, so we are going to leave it (we will talk about this later in the future). Now, what is about the Redis server ❓❓❓ Well , if you think that we should drop it as well together with the pgAdmin and PostgreSQL you are right, but this is actually would be the wrong thing to do. I will explain it momentarily. Let's fix the cloud version docker compose configurations file, update the multi container web application so it will do its thing and then fix our Redash server, but before that we need one very important piece of information and its the connection string for the managed PostgreSQL server. Navigate the instance we created and on the left hand side menu locate the option called connection string. This is the format of the connection string, just copy your details to replace the place holders.

There are couple of reasons we decided to leave the Redis service locally inside the web app running in a seperate container. The first is that Redis use case in the eyes of the Redash server is used for queueing and caching only, since it all happens in the memory, we need the memory to be available to it from the same machine. The second is the speed, caching or not, these containers are all in the same place and there is no latency caused because we need to be dependant on a remote service. The third is actually pricing. Spinning up a Redis resource or Azure container instances running Redis in a different remote location is a bit costly and not efficient.

// Azure PostgreSQL connection string format

postgresql://<your-admin-username>@<your-managed-server-name>:<your-password>@<your-host>:5432/redash?sslmode=requireAs we have this connection string ready for us to use, let's update it first in the file. Locate the key REDASH_DATABASE_URL and update the value with the connection string. Pay attention that we do specify the name of the actual database name that we are looking to get access to and its 'redash' on purpose.

x-environment: &base_environment

PYTHONUNBUFFERED: 0

REDASH_WEB_WORKERS: 4

REDASH_LOG_LEVEL: "INFO"

REDASH_RATELIMIT_ENABLED: "false"

REDASH_REDIS_URL: "redis://redis_server:6379/0"

REDASH_MAIL_DEFAULT_SENDER: "redash@example.com"

REDASH_ADDITIONAL_QUERY_RUNNERS: "redash.query_runner.python"

REDASH_DATABASE_URL: "YOUR CONNECTION STRING GOES HERE"Next we will locate the pgAdmin and the PostgreSQL services and delete them.

# delete these 2 services under the service object

services:

# database

postgresdb:

image: postgres:alpine

restart: always

container_name: postgresdb_server_local

ports:

- "5432:5432"

environment:

POSTGRES_HOST_AUTH_METHOD: "trust"

POSTGRES_USER: postgresuser

POSTGRES_PASSWORD: postgrespassword

POSTGRES_DB: redash

volumes:

- ./postgres-data:/var/lib/postgresql/data

# pgAdmin

pgAdmin:

container_name: "pgAdmin_local"

image: dpage/pgadmin4

restart: always

ports:

- "11180:80"

- "11443:443"

environment:

PGADMIN_CONFIG_ENHANCED_COOKIE_PROTECTION: "False"

PGADMIN_DEFAULT_EMAIL: pguser@mail.com

PGADMIN_DEFAULT_PASSWORD: pgpassword

depends_on:

- postgresdb

volumes:

- ./pgadmin:/var/lib/pgadmin

- ./pgadmin/backup:/var/lib/pgadmin/storageThere is one small step to do before we forget and it is to delete the dependency on the local PostgreSQL service under the Redash server service. We also want to delete the port 8080 as we do not use it at all in the web app because we are using the nginx reverse proxy.

# delete the postgresdb dependency

services:

# redash server

server:

<<: *base_redash

command: server

ports:

- "5000:5000"

- "5678:5678"

# delete this 1 port

- "8081:8080"

depends_on:

- redis_server

# delete this dependency

- postgresdbWe are left with this file content.

# Azure App Services docker-compose.yml Version

version: "3.9"

x-environment: &base_environment

PYTHONUNBUFFERED: 0

REDASH_WEB_WORKERS: 4

REDASH_LOG_LEVEL: "INFO"

REDASH_RATELIMIT_ENABLED: "false"

REDASH_REDIS_URL: "redis://redis_server:6379/0"

REDASH_MAIL_DEFAULT_SENDER: "redash@example.com"

REDASH_ADDITIONAL_QUERY_RUNNERS: "redash.query_runner.python"

# do not forget to update this key

REDASH_DATABASE_URL: "YOUR CONNECTION STRING GOES HERE"

x-base_redash: &base_redash

environment:

<<: *base_environment

image: redash/redash:8.0.2.b37747

restart: always

services:

# redis

redis_server:

image: redis:alpine

container_name: redis_server_local

restart: unless-stopped

# redash server

server:

<<: *base_redash

command: server

ports:

- "5000:5000"

- "5678:5678"

depends_on:

- redis_server

# redash scheduler

scheduler:

<<: *base_redash

command: scheduler

depends_on:

- server

environment:

<< : *base_environment

QUEUES: "celery"

WORKERS_COUNT: 1

# redash worker 1

scheduled_worker:

<<: *base_redash

command: worker

depends_on:

- server

environment:

<< : *base_environment

QUEUES: "scheduled_queries"

WORKERS_COUNT: 1

# redash worker 2

adhoc_worker:

<<: *base_redash

command: worker

depends_on:

- server

environment:

<< : *base_environment

QUEUES: "queries"

WORKERS_COUNT: 2

# redash worker 3

scheduled_worker:

<<: *base_redash

command: worker

depends_on:

- server

environment:

<< : *base_environment

QUEUES: "schemas"

WORKERS_COUNT: 1

# nginx

nginx:

image: redash/nginx:latest

ports:

- "8080:80"

depends_on:

- server

links:

- server:redash

restart: alwaysYou can save it now and upload it to the web application. If you recall we did it in step 3. Just repeat the step with this new file. A spoiler - nothing is going to change yet.

Can you guess what our next step is to make things work ? I want to challenge you actually. I can tell you that you ladies and gentlemen have the power to fix the issue without me telling you what to do! I really want you to try to think about it for couple of minutes and solve it. Just for the sports. I promise that i will tell you what to do next even if you are not successful.

⏰ ⏰ ⏰ ⏰ ⏰ Count to 600: This is your time window to solve it on your own and you got 540 seconds more than you need.

Alrighty then! We will need 2 things. The first is our local setup and the second is the new connection string. What we are going to do is actually spin up the local environment with the use of the database service being in the cloud rather the one we declared locally. So update the connection string, open a terminal, run the commands from part 1 and go back to the web application, in the overview screen restart it and then proceed to blibk on the browse button and wait to see that we have what we asked for. Just a friendly reminder go through the exact process as we did in the previous part in case you encounter issues.

First command to run:

# local environment version

$ docker-compose -f docker-compose.yml upSecond command to run:

# local environment version

$ docker-compose -f docker-compose.yml run server create_dbYou can understand that we are ignoring the local environment just for the sake of setting up the remote database. I will tell you that there is also another way to accomplish the same fix and it is to do a backup on the local PostgreSQL instance and restore it in the remote instance, but this approach is way to complex comparing to what we actually did for the fix. The backup and restore option is a great choice if you are not starting from scratch. Not our scenario here.

Well,

I hope you guys enjoyed it. It surely was a bumpy ride, but heck, we learned something. It's all about the nuances.

At this point we have a working instance of Redash and we can start using it. We can add data sources, write queries, setup scheduled tasks or queries, create visualizations and more. I will refer you to the official website and the YouTube channel for more information. I urge you guys to go over the Redash docs and learn how to use it. It is handy 👍

Stay tuned for next

Like, subscribe, comment and whatever ...

Goodbye

22