22

Search Engine Optimization with GatsbyJS

🔔 This article was originally posted on my site, MihaiBojin.com. 🔔

This is an introductory post about Search Engine Optimization with Gatsby.

It covers a few basics that are easy to implement but will help with your SEO ranking in the long run.

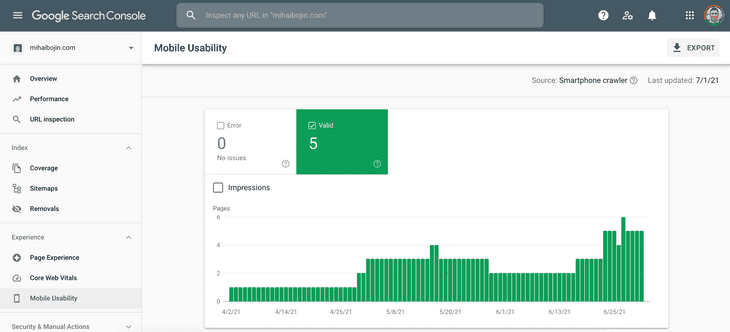

One piece of low-hanging fruit is to verify your domain with Google's Search Console.

Take 5 minutes to do it; you will get helpful information, for example:

Every page should define a canonical link. These are useful because they help search engines such as Google figure out the original content, routing all the 'juice' to that one page.

Consider the following URLs:

- http://site.com/article

- http://www.site.com/article

- https://site.com/articleThe same content can be access at multiple URLs. However, Google wouldn't know what page holds the original content, so it would most likely split the ranking between a few of these pages and mark most of them as duplicates. This is highly unpredictable and undesirable. A better approach is to define a canonical link, helping search engines rank a single URL, the one you choose.

You can define a canonical link by including the following tag in the site's head:

<link rel="canonical" href="https://MihaiBojin.com/"/>(where href is the URL you choose to represent your original content.)

This approach is highly effective when you syndicate your content on other sites. For example, if you did so on Medium, Google would most likely rank it as the original due to the site's higher overall ranking, leading to poor SEO impact on your domain.

There's an effortless way to add canonical links in Gatsby: using the gatsby-plugin-react-helmet-canonical-urls plugin.

Install it with npm install --save gatsby-plugin-react-helmet gatsby-plugin-react-helmet-canonical-urls.

Then add the following plugin to your gatsby-config.js:

module.exports = {

plugins: [

{

resolve: `gatsby-plugin-react-helmet-canonical-urls`,

options: {

siteUrl: SITE_URL,

},

},

],

};Google will gradually discover and index your site's pages over time. You can define a sitemap to help Google (and other search engines) find all your pages. This process does not ensure faster indexing, and in the early days of your site, it also doesn't make much of a difference, but it will matter more as your site grows!

First, install the gatsby-plugin-sitemap plugin.

npm install gatsby-plugin-sitemapThen you will need to add some custom configuration in gatsby-config.js. When I initially configured this plugin, this step took me a long time to get right. Part of that was caused by a bug that's since been fixed.

module.exports = {

plugins: [

{

resolve: 'gatsby-plugin-sitemap',

options: {

output: '/sitemap',

query: `

{

site {

siteMetadata {

siteUrl

}

}

allSitePage(

filter: {

path: { regex: "/^(?!/404/|/404.html|/dev-404-page/)/" }

}

) {

nodes {

path

}

}

}

`,

resolvePages: ({ allSitePage: { nodes: allPages } }) => {

return allPages.map((page) => {

return { ...page };

});

},

serialize: ({ path }) => {

return {

url: path,

changefreq: 'weekly',

priority: 0.7,

};

},

},

},

],

};If you're wondering why we're loading a siteUrl, it is required, as explained in the official docs.

Check your sitemap locally by running gatsby build && gatsby serve and accessing http://localhost:9000/sitemap/sitemap-index.xml. If everything is okay, publish your sitemap and then submit it to the Google Search Console.

If you're looking for an automated way to generate this file, gatsby-plugin-robots-txt is your friend!

Install the plugin by running npm install --save gatsby-plugin-robots-txt and add the following to your gatsby-config.js:

module.exports = {

plugins: [

{

resolve: 'gatsby-plugin-robots-txt',

options: {

host: process.env.SITE_URL,

sitemap: process.env.SITE_URL + '/sitemap/sitemap-index.xml',

policy: [

{

userAgent: '*',

allow: '/',

disallow: ['/404'],

},

],

},

},

],

};Setting up the plugin as above will generate a /robots.txt file that specifies the options listed above.

If, however, you're looking for a simpler approach, skip all of the above and create /static/robots.txt, with the same contents.

User-agent: *

Allow: /

Disallow: /404

Sitemap: https://[SITE_URL]/sitemap/sitemap-index.xml

Host: https://[SITE_URL]

Make sure to replace SITE_URL with the appropriate value!There is one disadvantage of the latter, in that you will have to remember to update the SITE_URL in this file. This is unlikely to be a problem in practice, but still... using the plugin and relying on a globally defined SITE_URL is less hassle in the long run.

Note: In Gatsby, any file created in the /static directory will be copied to and included as a static file on your site.

You may have noticed the Sitemap directive. That allows letting crawlers know where they can find your sitemap index. Earlier in this article, we accomplished that using the Google Search Console, which is the better approach. However, there are other search engines except for Google and having this directive means they can all find and process your sitemap.

We're finally at the end - albeit this is the trickiest part since it requires an understanding of how Gatsby does SEO.

If you have no experience with that, I recommended reading the Gatsby Search Engine Optimization page (don't worry about Social Cards for now).

This article won't go into advanced SEO tactics (mostly because I'm no SEO expert).

In time, as I write more content and I learn new search engine optimization tips and tricks, I will update this page.

There is, however, a minimal set of configurations any site owner should do, the bare minimum, if you will:

title, description, and keywords meta tags that represent your content

and a canonical link (explained above)

If you search the Gatsby Starters library, you will find many implementations and slightly different ways of achieving this goal.

Here's how I implemented it. I don't claim this is better or provides any advantages - it simply does the job!

import * as React from "react";

import PropTypes from "prop-types";

import { Helmet } from "react-helmet";

import { useSiteMetadata } from "../hooks/use-site-metadata";

function Seo({ title, description, tags, canonicalURL, lang }) {

const { siteMetadata } = useSiteMetadata();

const pageDescription = description || siteMetadata.description;

const meta = [];

const links = [];

if (tags) {

// define META tags

meta.push({

name: "keywords",

content: tags.join(","),

});

}

if (canonicalURL) {

links.push({

rel: "canonical",

href: canonicalURL,

});

}

return (

<Helmet

htmlAttributes={{

lang,

}}

// define META title

title={title}

titleTemplate={siteMetadata?.title ? `%s | ${siteMetadata.title}` : `%s`}

link={links}

meta={[

// define META description

{

name: `description`,

content: pageDescription,

},

].concat(meta)}

/>

);

}

Seo.defaultProps = {

lang: `en`,

tags: [],

};

Seo.propTypes = {

title: PropTypes.string.isRequired,

description: PropTypes.string.isRequired,

tags: PropTypes.arrayOf(PropTypes.string),

canonicalURL: PropTypes.string,

lang: PropTypes.string,

};

export default Seo;This code uses react-helmet to define the specified tags.

You might have noticed that it uses a react hook, useSiteMetadata. I learned this trick from Scott Spence.

My code looks like so:

import { useStaticQuery, graphql } from 'gatsby';

export const useSiteMetadata = () => {

const { site } = useStaticQuery(

graphql`

query SiteMetaData {

site {

siteMetadata {

title

description

author {

name

summary

href

}

siteUrl

}

}

}

`,

);

return { siteMetadata: site.siteMetadata };

};Don't forget to define the required metadata in gatsby-config.js:

module.exports = {

siteMetadata: {

title: `Mihai Bojin's Digital Garden`,

description: `A space for sharing important lessons I've learned from over 15 years in the tech industry.`,

author: {

name: `Mihai Bojin`,

summary: `a passionate technical leader with expertise in designing and developing highly resilient backend systems for global companies.`,

href: `/about`,

},

siteUrl: process.env.SITE_URL,

}

};And that's it - you will now have basic SEO for your GatsbyJS site, by including this component into every article page, e.g.:

<Seo title={} description={} tags={} canonicalURL={} />Typically, these values would be populated manually for static pages, or passed via frontmatter for blog posts.

For example, to populate canonical links I use a function to generate the appropriate value: frontmatter for blog posts or the page's path as a default.

function renderCanonicalLink(frontmatter, siteUrl, location) {

return frontmatter?.canonical || (siteUrl + location.pathname);

}This concludes my introductory post about Search Engine Optimization in GatsbyJS.

If you'd like to learn more about this topic, please add a comment on Twitter to let me know! 📢

Thank you!

If you liked this article and want to read more like it, please subscribe to my newsletter; I send one out every few weeks!

22