14

OpenShift for Dummies - Part 2

Why Should I Use OpenShift?

In Kubernetes for Dummies, we talked about the need for a container orchestration system. In 2015, there were many different orchestration systems that people were using including cloud foundry, mesosphere, docker swarm, and kubernetes to name a few. Today, the market has consolidated and kubernetes has come out on top. Red Hat bet early on K8s and is now the second largest contributor and influencer of its direction, only next to Google. K8s is the kernel of distributed systems, while OpenShift is a distribution of it. What this means for developers is that whenever there is a new version of Kubernetes available, Red Hat can take K8s from upstream, secure it, test it and certify it with hardware and software vendors. In addition, Red Hat patches 97% of all security vulnerabilities within 24 hours and 99% within the first week, showing the difference between Red Hat and their competition.

OpenShift, the Platform of the Future

OpenShift is a platform that can run on premise, in a virtual environment, in a private cloud, or in a public cloud. You can migrate all of your traditional applications to OpenShift so you can get all of the advantages of containerization, as well as software from independent software vendors. You can also build cloud-native greenfield applications (greenfield describes a completely new project that has to be executed from scratch) as well as integrate Machine Learning and Artificial Intelligence functions.

OpenShift also provides automated operations, multi-tenancy, secure by default capabilities, network traffic control, and the option for chargeback and showback. OpenShift is also pluggable so you can introduce third party security vendors if you wish. Developers also get a self service provisioning portal so operations teams can define what is available for developers and developers can request controls as authorized by the operations team. The OpenShift platform is very versatile in that it runs on most public cloud services such as AWS, Azure, Google Cloud Platform, IBM Cloud, and of course it runs on-premises as well.

OpenShift Demo

You can use the trial version of OpenShift by visiting:

https://www.redhat.com/en/products/trials?products=hybrid-cloud

For this demo, you will need a Red Hat account. We will be selecting the option that plainly says ‘Red Hat OpenShift - An enterprise-ready Kubernetes container platform’

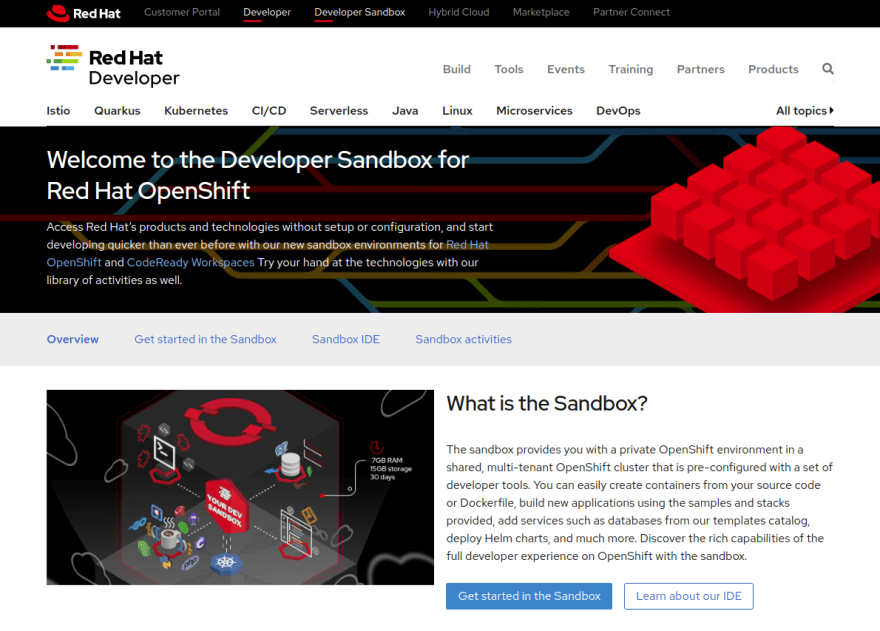

Select ‘Start your trial’ under ‘Developer Sandbox.’ The developer sandbox will suffice for this walkthrough. Please note that the account created will be active for 30 days. At the end of the active period, your access will be deactivated and all your data on the Developer Sandbox will be deleted. Upon logging in, you should be brought to this webpage:

If you are not brought here, visit https://developers.redhat.com/developer-sandbox.

Click ‘Get started in the Sandbox’ and then ‘Launch your Developer Sandbox for Red Hat OpenShift’ and then ‘Start using your sandbox.’ You may also need to verify your email address to continue.

Welcome to OpenShift!

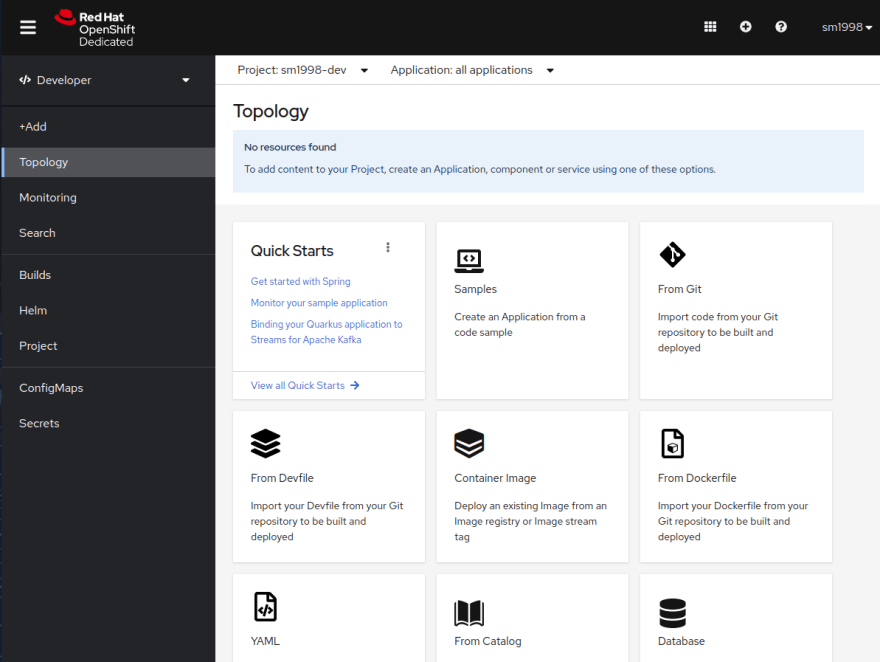

On the side bar you can see different options to select from...

The Administrator perspective can be used to manage workload storage, networking, cluster settings, and more. This may require additional user access.

Use the Developer perspective to build applications and associated components and services, define how they work together, and monitor their health over time.

Add

You can select a way to create an application component or service from one of the options.

Monitor

The monitoring tab allows you to monitor application metrics, create custom metrics queries, and view & silence alerts in your project.

Search

Search for resources in your Project by simply starting to type or by scrolling through a list of existing resources.

Now, switch to the Administrator perspective and look under projects.

Now, change back to the developer perspective. Under topology, we can see that we currently do not have any workloads. OpenShift gives us many options to create applications, components and services using the options listed.

Let’s explore the catalog to see what we can choose from. Through the developer catalog, the developer does not need to request from the infrastructure team that they need a new developing environment, database, runtime, etc. Rather, the developer can choose from a list of pre-approved apps, services or source-to-image builders. For our purposes, we will be using python to create a front end. I will be using a sample random background color generator to demonstrate the use of python in OpenShift. This app will randomly generate a color and a welcome message to the user who opens the website. Simply type in ‘Python’ in the developer catalog or find it under Languages > Python and click the option that plainly says ‘Python.’

Next, click ‘Create Application’

From here, we will paste the link from the github repository that holds the python script we will use for our webpage: https://github.com/StevenMcGown/OpenShift_Demo

You can also change the name of the application if you wish. For our purposes, we will leave everything in default settings. Once you click ‘Create’, OpenShift will begin to build the application. You can see the build process from the side bar by navigating Builds > open-shift-demo > Builds > open-shift-demo-1 > Logs. In this screenshot, we can see that OpenShift goes to the location of the source code, and copies the source code. Once the source code is copied, it is analyzed and it will build an application binary. Next, OpenShift creates a dockerfile which will install all of the app dependencies needed to run the application binary. The application dependencies are layered to make a container image, where it will be stored in a registry which is built into OpenShift. Finally, from that registry it will deploy an application file.

Next, click on the Topology tab in the sidebar. We can see our python application in a bubble with 3 smaller bubbles attached. The green check mark shows that the build was successful, and we can actually check the build log we just saw by clicking on it.

The bubble on the bottom right with a red C allows us to edit our source code with CodeReady Workspaces. CodeReady Workspaces allows you to edit the code within the browser. Opening CodeReady Workspaces will take some time to open, but when it opens you should see an IDE similar to that of VSCode.

Looking back at the Topology of our application, we can now see that a CodeReady Workspaces icon is now added to our project.

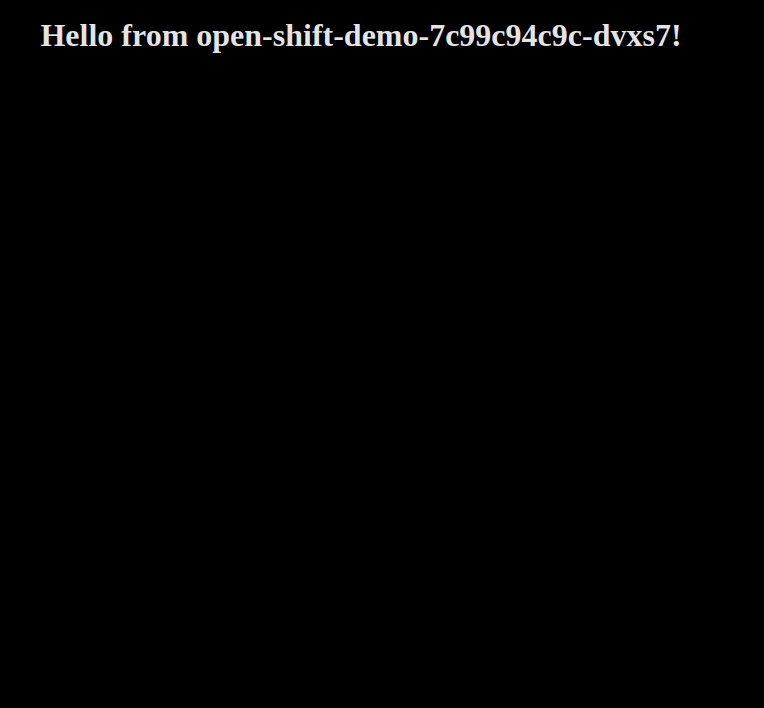

Clicking the bubble on the top-right on the python icon will open the container application. In this instance, it took green as the random color and we are welcomed with a message from the application open-shift-demo hosted on the ‘hkqbv’ container under the ‘7c749ff559’ replica set.

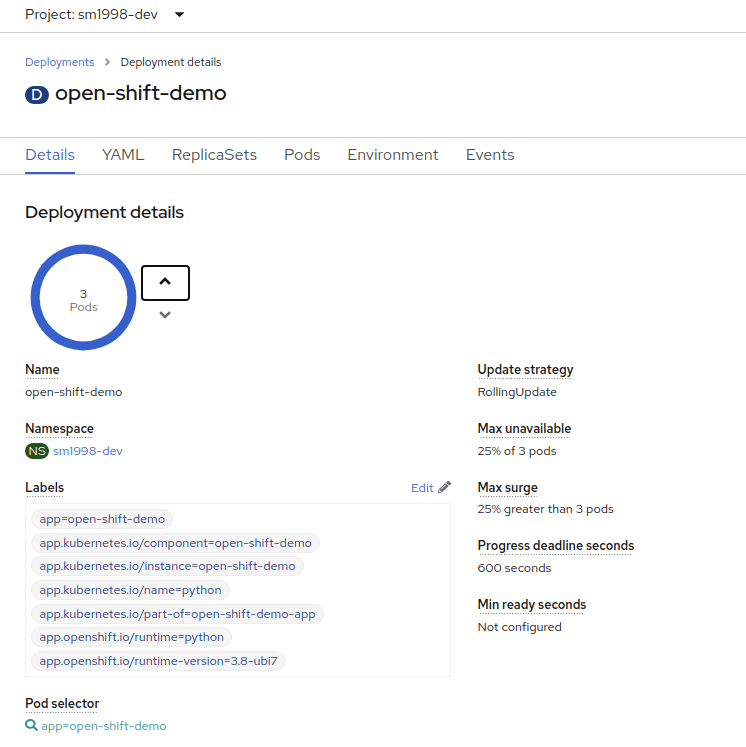

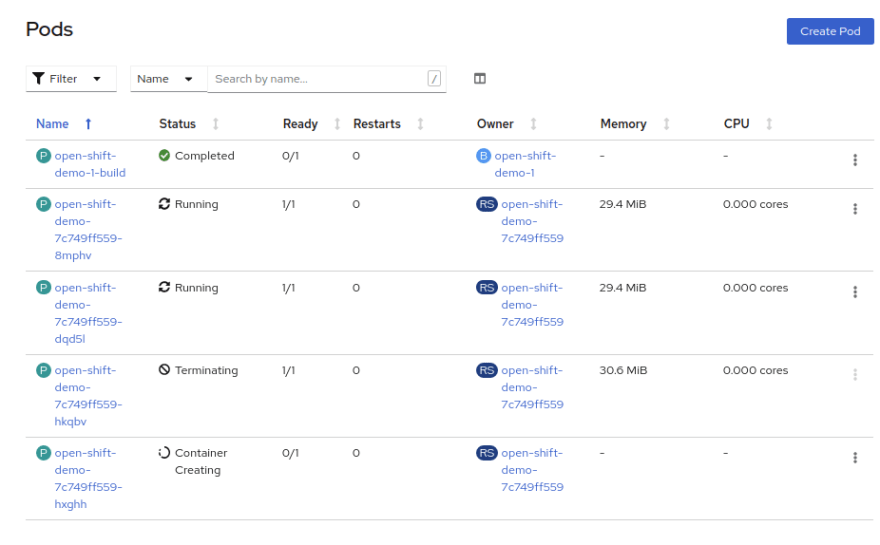

As an administrator, we are interested in giving the application high availability by scaling, control routing, etc. Let’s look at the application from an administrator’s perspective now. In the admin perspective, we can view our application pods by navigating to Workloads > Pods. Here we can see that only one pod is serving our application. If we want to increase our availability, we can navigate to Workloads > Deployments and increase the number of pods to serve our application. As a reminder, a deployment is a set of pods and ensures that a sufficient number of pods are running at one time to service an application. If you need to brush up on Kubernetes concepts such as deployments and pods, please read Kubernetes for Dummies.

Traditionally, if you wanted to increase the availability of your app, you would have to create an additional VM, create a load balancer, install the application and only then would you be set to have high availability. In OpenShift, increasing the availability is as simple as incrementing or decrementing the pod counter under ‘Deployment Details,’ which is done in seconds. Increasing the number of pods means an application will be hosted on each pod, meaning that the application we have will use 3 pods and thus 3 random colors.

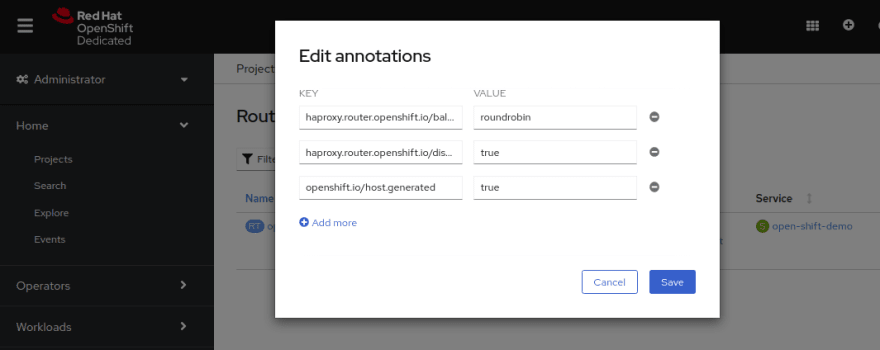

After refreshing your page, you may notice that the app does not ever change color… What gives? From a networking perspective, the default configuration is to have a sticky session, meaning that the user will always be hosted by the same container once they connect to the application. To change this, we will navigate to Networking > Routes and click on the 3 dots to edit annotations.

We will add these key-value pairs to our existing annotations:

haproxy.router.openshift.io/balance : roundrobin

haproxy.router.openshift.io/disable_cookies : true

For more information on the round robin scheduling algorithm and cookies, visit these links:

https://en.wikipedia.org/wiki/Round-robin_scheduling#Network_packet_scheduling

When you refresh the page, you will receive a new message each time indicating that you are being serviced by a different pod for the application. The background color, however, might be the same as another container since the app is initialized with a random color from an array of 7 colors.

Simulating a Crash

Let’s simulate one of the pods crashing to test our availability. In the administrator view, navigate to Workloads > Pods. You should see 3 pods running under the Replica Set tag, indicating that the pods are created using the same data set. If we delete one of these pods, it would mean that the pod immediately fails. In doing so, Kubernetes will simultaneously create a container to replace the pod that failed. This shows that the controller is always looking at how many pods are running vs. how many are needed. In this case, K8s will detect that there are only 2 pods running and immediately create a new pod to replace the failed pod.

Because the old pod was deleted, a new pod was created with a container ID ‘hxghh’ and purple background.

Developer Updates

Let's suppose the developer of the application updates the source code. When this happens, OpenShift needs to reflect the changes made by the developer. We can do this by building the project again by going into the developer perspective, clicking on the python icon, and clicking 'Start Build.' In this case, I added black to the array of colors.

One thing to note is a feature OpenShift uses called 'rolling updates.' Rolling updates ensure seamless transitions from one update to another. With rolling updates, new pods are commissioned while old ones are decommissioned one at a time until completion. This way, there is never a service loss for the end user. With some luck, we can now see a new color background for our web page as given by the developers.

Conclusion

That's all I have for now! Thank you so much for reading part 2 of OpenShift for Dummies. I plan on making more of these in the future, but please let me know if you have any questions or concerns for these posts!

I hope you have enjoyed reading. If you did, please leave a like and a comment! Also, follow me on LinkedIn at https://www.linkedin.com/in/steven-mcgown/

14