38

Create a CI/CD Pipeline with GitHub Actions

While working as a team, we use EC2 as a test server. But there were two problems we faced.

Guess it's the time to learn how to set up CI/CD pipeline. But first, what is CI/CD?

CI/CD is a method to frequently deliver apps to customers by introducing automation into the stages of app development. The main concepts attributed to CI/CD are continuous integration, continuous delivery, and continuous deployment. CI/CD is a solution to the problems integrating new code can cause for development and operations teams (AKA "integration hell").

source: What is CI/CD?

The pipeline makes this process seamless.

Before you begin, create an EC2 instance. Then, let's deploy a FastAPI application using docker and github action(It doesn't have to be a FastAPI application. Any backend application will be fine). There are many ways to do this. I'll show you two of them.

Requirements

With docker-compose, you can define and run multi-container Docker applications. There are two services here.

# docker-compose.yaml

version: "3.3"

services:

mysqldb:

image: "mysql"

ports:

- "3306:3306"

volumes:

- data:/data/db

- ./env/mysql.env:/env/mysql.env

env_file:

- ./env/mysql.env

app:

build: {the path where your Dockerfile is}

restart: always

ports:

- "8000:8000"

volumes:

- ./env/.env:/env/.env

env_file:

- ./env/.env

links:

- mysqldb

depends_on:

- mysqldb

volumes:

data:First one is MySQL database. With

image: "mysql", you can pull MySQL image from docker hub, and you don't have to install MySQL in your EC2 machine. If you want to use a specific version of it, image: "mysql:{version}".We are setting up a CI/CD pipeline. If the data in the database is lost whenever we deploy, it wouldn't be a seamless experience, right? To persist the data, use named volumes.

mysqldb:

image: "mysql"

ports:

- "3306:3306"

volumes:

- data:/data/db

- ./env/mysql.env:/env/mysql.env

env_file:

- ./env/mysql.env

...

volumes:

data:And the next one is your application. Make sure that

build: is pointing the directory where Dockerfile is.app:

build: ./

restart: always

ports:

- "8000:8000"

volumes:

- ./env/.env:/env/.env

env_file:

- ./env/.env

links:

- mysqldb

depends_on:

- mysqldbDue to security issue, it is not a good idea to commit your configuration file to github. However, we still want to automate deployment job with github action. If we gitignore the configuration file, the application cannot start because it can't find and import the file.

To avoid this problem, git pull from your EC2 machine, create configuration file in the machine(with config variables) as well as in the container(empty). You can share data with the files in your container and the machine that it is running with Bind Mounts.

# Dockerfile

FROM python:3.9

WORKDIR /

ENV DOCKERIZE_VERSION v0.2.0

RUN wget https://github.com/jwilder/dockerize/releases/download/$DOCKERIZE_VERSION/dockerize-linux-amd64-$DOCKERIZE_VERSION.tar.gz \

&& tar -C /usr/local/bin -xzvf dockerize-linux-amd64-$DOCKERIZE_VERSION.tar.gz

COPY ./requirements.txt /requirements.txt

RUN pip install --upgrade pip

RUN pip install --no-cache-dir --upgrade -r /requirements.txt

COPY . .

RUN touch env/.env

RUN touch env/mysql.env

RUN chmod +x docker-entrypoint.sh

ENTRYPOINT ./docker-entrypoint.sh

EXPOSE 8000

CMD ["python", "app/main.py"]# docker-compose.yaml

...

volumes:

...

- ./env/mysql.env:/env/mysql.env

...

volumes:

- ./env/.env:/env/.env

...

# Dockerfile

...

RUN touch env/.env # creates env file in container

RUN touch env/mysql.env # creates env file in container

...Note that I'm trying to create development environment. Bind Mounts are not meant to be used in production.

These are my environment files in the EC2 machine.

# env/.env

JWT_ALGORITHM=****

JWT_SECRET_KEY=****

SQLALCHEMY_DATABASE_URL=mysql+pymysql://{MYSQL_USER}:{MYSQL_PASSWORD}@mysqldb:3306/{MYSQL_DATABASE}

# env/mysql.env

MYSQL_USER=****

MYSQL_PASSWORD=****

MYSQL_ROOT_PASSWORD=****

MYSQL_DATABASE=****Since there's

depends_on: mysqldb in docker-compose.yaml, it automatically build a network for the containers. Therefore we can define database url using the name defined in the docker-compose.yaml(mysqldb).# docker-entrypoint.sh

dockerize -wait tcp://mysqldb:3306 -timeout 20s

echo "Start server"

alembic upgrade head

python /app/main.pyFirst, clone your repository in your EC2 machine and create env files.

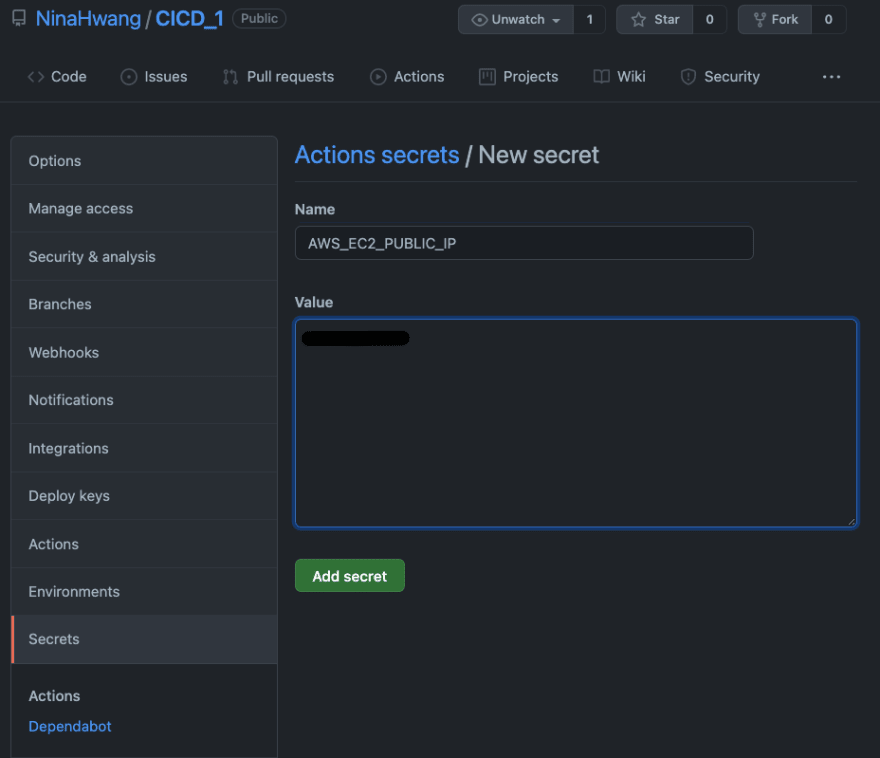

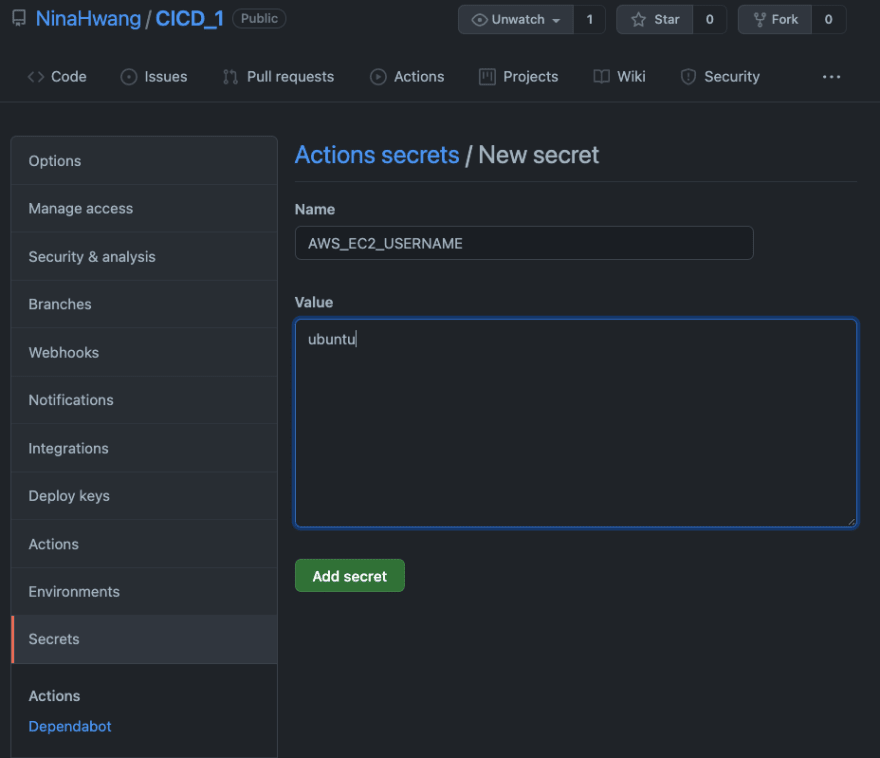

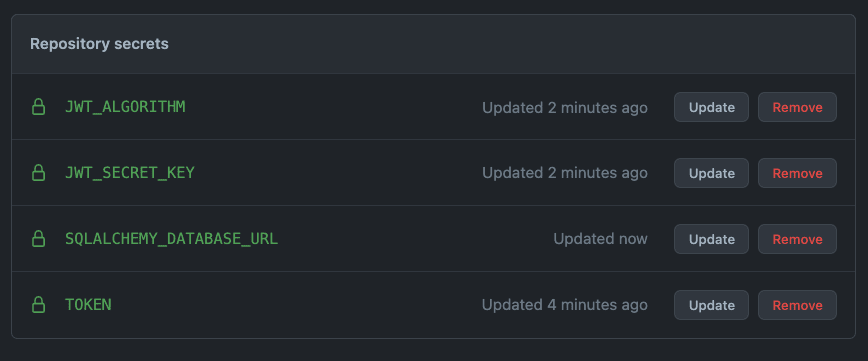

Then, let's set actions secrets. In your repository, go to Settings > Secrets.

By clicking

New repository secret, you can define environment variables which are using during github actions.Add your application environment values and EC2 credentials.

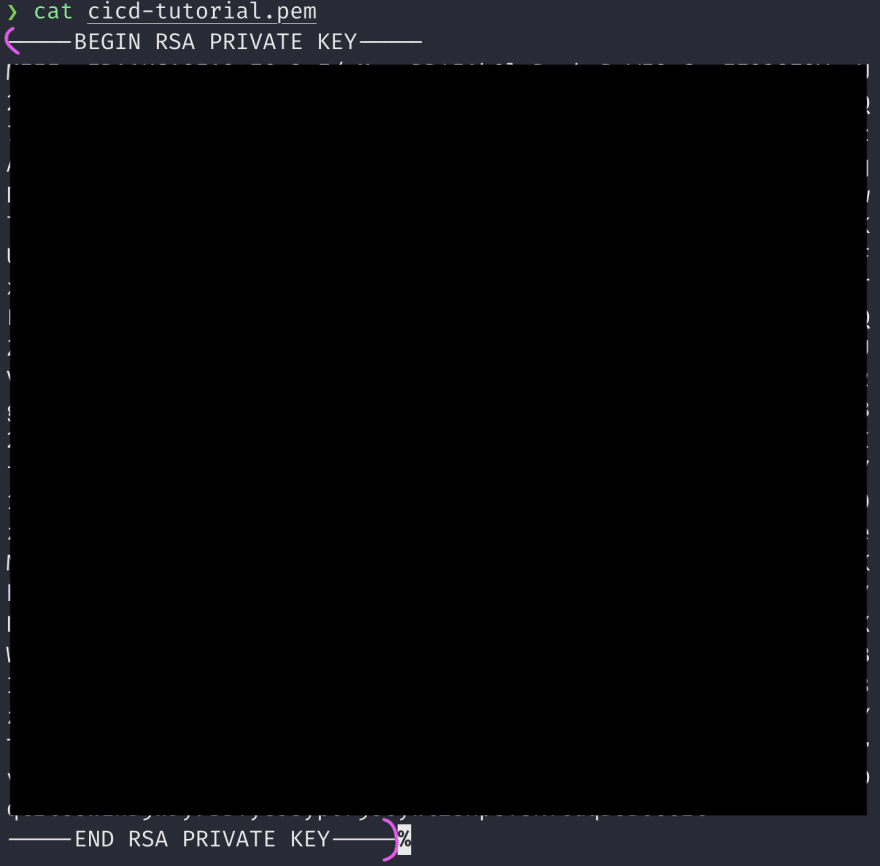

And for AWS_EC2_PEM, put this.

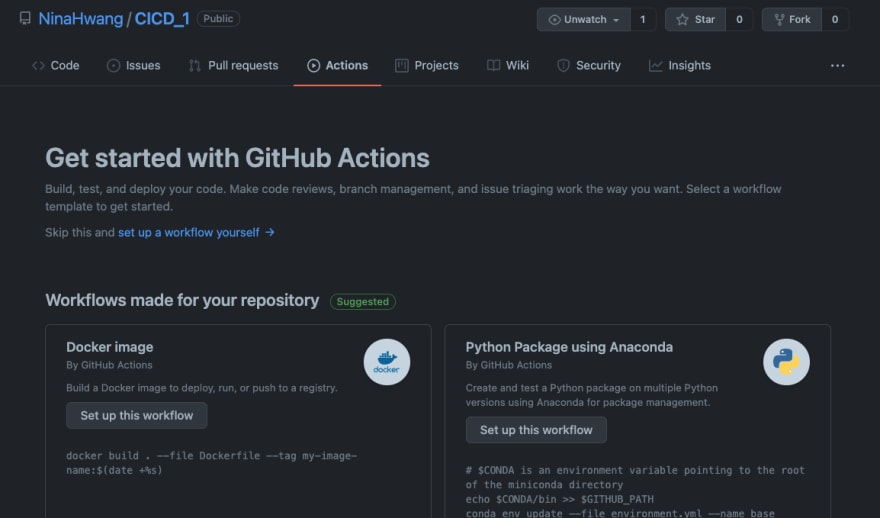

Click

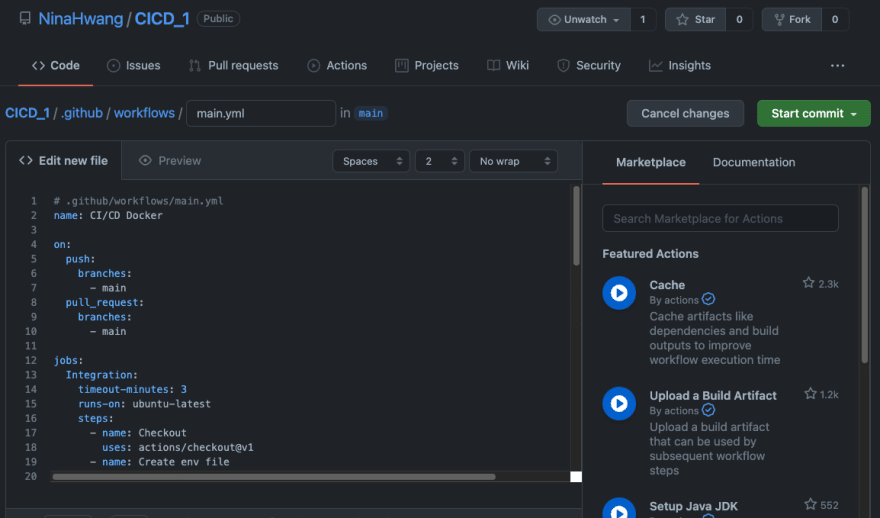

set up a workflow your self and create Github workflow.

# .github/workflows/main.yml

name: CI/CD Docker

on:

push:

branches:

- main

pull_request:

branches:

- main

jobs:

Integration:

timeout-minutes: 3

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v1

- name: Create env file

env:

JWT_ALGORITHM: ${{ secrets.JWT_ALGORITHM }}

JWT_SECRET_KEY: ${{ secrets.JWT_SECRET_KEY }}

SQLALCHEMY_DATABASE_URL: ${{ secrets.SQLALCHEMY_DATABASE_URL }}

MYSQL_USER: ${{ secrets.MYSQL_USER }}

MYSQL_PASSWORD: ${{ secrets.MYSQL_PASSWORD }}

MYSQL_ROOT_PASSWORD: ${{ secrets.MYSQL_ROOT_PASSWORD }}

MYSQL_DATABASE: ${{ secrets.MYSQL_DATABASE }}

run: |

mkdir env

touch ./env/.env

echo JWT_ALGORITHM="$JWT_ALGORITHM" >> ./env/.env

echo JWT_SECRET_KEY="$JWT_SECRET_KEY" >> ./env/.env

echo SQLALCHEMY_DATABASE_URL="$SQLALCHEMY_DATABASE_URL" >> ./env/.env

ls -a

cat env/.env

touch ./env/mysql.env

echo MYSQL_USER="$MYSQL_USER" >> ./env/mysql.env

echo MYSQL_PASSWORD="$MYSQL_PASSWORD" >> ./env/mysql.env

echo MYSQL_ROOT_PASSWORD="$MYSQL_ROOT_PASSWORD" >> ./env/mysql.env

echo MYSQL_DATABASE="$MYSQL_DATABASE" >> ./env/mysql.env

ls -a

cat env/mysql.env

shell: bash

- name: Start containers

run: docker-compose up -d

Deployment:

needs: Integration

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Git pull

env:

AWS_EC2_PEM: ${{ secrets.AWS_EC2_PEM }}

AWS_EC2_PUBLIC_IP: ${{ secrets.AWS_EC2_PUBLIC_IP }}

AWS_EC2_USERNAME: ${{ secrets.AWS_EC2_USERNAME }}

run: |

pwd

echo "$AWS_EC2_PEM" > private_key && chmod 600 private_key

ssh -o StrictHostKeyChecking=no -i private_key ${AWS_EC2_USERNAME}@${AWS_EC2_PUBLIC_IP} '

cd {/path/to/your/project/directory} &&

git checkout main &&

git fetch --all &&

git reset --hard origin/main &&

git pull origin main &&

docker-compose up -d --build

'Here, I will use GHCR(GitHub Container Registry). A container registry is a repository(or a collection of repositories), used to store container images.

Requirements

This time I'm going to use RDS instead of MySQL container, so it is much more simple to dockerize the app compared to the previous one.

FROM python:3.9

WORKDIR /

COPY ./requirements.txt /requirements.txt

RUN pip install --no-cache-dir --upgrade -r /requirements.txt

COPY . .

EXPOSE 8000

RUN touch .env

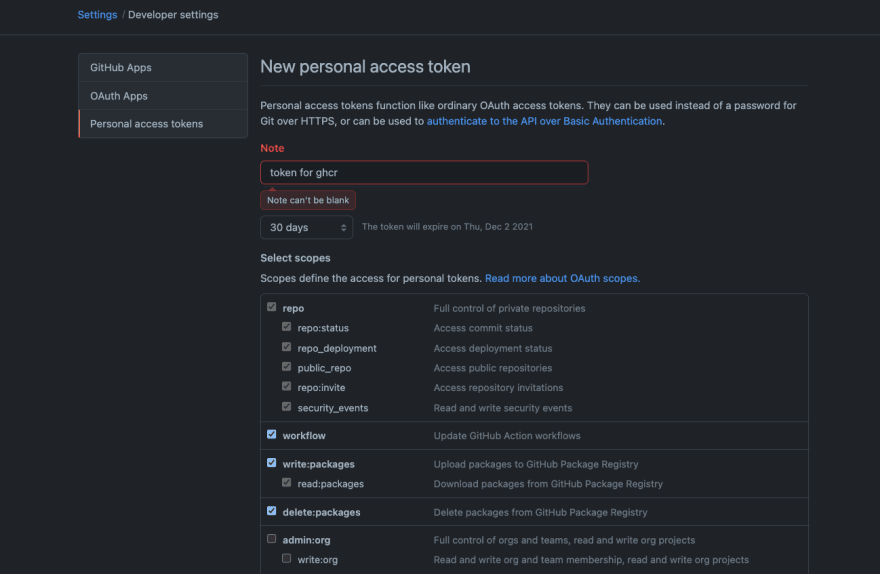

CMD ["python", "app/main.py"]Generate a token for github action. Click your profile and go to Settings > Developer settings > Personal access tokens.

Among scopes, select

repo, workflow, and packages.Copy the token, paste it to actions secrets, and add other secrets.

In the github repository, go to Settings > Actions > Runners, and click

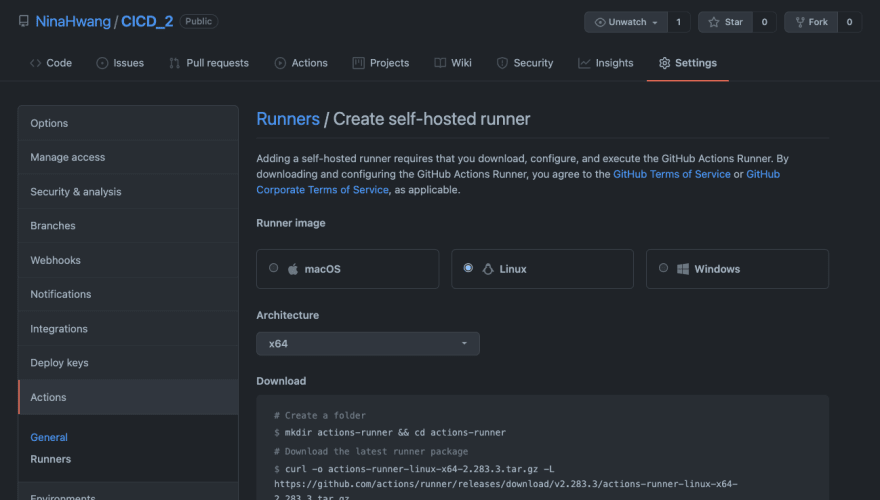

New self-hosted runner.Choose the right OS of your EC2 machine, and in the machine, do as the guidance says.

Before running it using

./run.sh command, let's configure workflow file.name: CI/CD Docker

on:

push:

branches:

- main

env:

DOCKER_IMAGE: ghcr.io/ninahwang/cicd_2 # this should be lower case !

VERSION: ${{ github.sha }}

jobs:

build:

name: Build

runs-on: ubuntu-latest

steps:

- name: Check out source code

uses: actions/checkout@v2

- name: Set up docker buildx

id: buildx

uses: docker/setup-buildx-action@v1

- name: Cache docker layers

uses: actions/cache@v2

with:

path: /tmp/.buildx-cache

key: ${{ runner.os }}-buildx-${{ env.VERSION }}

restore-keys: |

${{ runner.os }}-buildx-

- name: Login

uses: docker/login-action@v1

with:

registry: ghcr.io

username: ${{ github.actor }}

password: ${{ secrets.TOKEN }}

- name: Build and push

id: docker_build

uses: docker/build-push-action@v2

with:

builder: ${{ steps.buildx.outputs.name }}

push: ${{ github.event_name != 'pull_request' }}

tags: ${{ env.DOCKER_IMAGE }}:${{ env.VERSION }}

deploy:

needs: build

name: Deploy

runs-on: [self-hosted]

steps:

- name: Login to ghcr

uses: docker/login-action@v1

with:

registry: ghcr.io

username: ${{ github.actor }}

password: ${{ secrets.TOKEN }}

- name: Create .env file

env:

JWT_ALGORITHM: ${{ secrets.JWT_ALGORITHM }}

JWT_SECRET_KEY: ${{ secrets.JWT_SECRET_KEY }}

SQLALCHEMY_DATABASE_URL: ${{ secrets.SQLALCHEMY_DATABASE_URL }}

run: |

touch .env

echo JWT_ALGORITHM="$JWT_ALGORITHM" >> .env

echo JWT_SECRET_KEY="$JWT_SECRET_KEY" >> .env

echo SQLALCHEMY_DATABASE_URL="$SQLALCHEMY_DATABASE_URL" >> .env

shell: bash

- name: Docker run

run: |

docker ps -q --filter "name=cicd_2" | grep -q . && docker stop cicd_2 && docker rm -fv cicd_2

docker run -p 8000:8000 -d -v "$(pwd)/.env:/.env" --restart always --name cicd_2 ${{ env.DOCKER_IMAGE }}:${{ env.VERSION }}Now run

run.sh.38