17

Introduction to DevOps on AWS | AWS Whitepaper Summary

- AWS capabilities that help you accelerate your DevOps journey, and how AWS services can help remove the undifferentiated heavy lifting associated with DevOps adaptation.

- How to build a continuous integration and delivery capability without managing servers or build nodes, and how to leverage Infrastructure as Code to provision and manage your cloud resources in a consistent and repeatable manner.

DevOps is the combination of cultural, engineering practices and patterns, and tools that increase an organization's ability to deliver applications and services at high velocity and better quality. Over time, several essential practices have emerged when adopting DevOps:

- Continuous Integration

- Continuous Delivery

- Infrastructure as Code

- Monitoring and Logging

- Communication and Collaboration

- Security

Continuous Integration (CI) is a software development practice where developers regularly merge their code changes into a central code repository, after which automated builds and tests are run. CI helps find and address bugs quicker, improve software quality, and reduce the time it takes to validate and release new software updates.

-

AWS CodeCommit: is a secure, highly scalable, managed source control service that hosts private git repositories. Some benefits of using AWS CodeCommit are:

- Collaboration: CodeCommit is designed for collaborative software development where you can easily commit,branch, and merge your code to easily maintain control of your team’s projects.

- Encryption: your repositories are transefered using HTTPS or SSH and automatically encrypted through AWS Key Management Service (AWS KMS).

- Access Control: CodeCommit uses AWS Identity and Access Management (IAM) to control and also helps you monitor your repositories through AWS CloudTrail and Amazon CloudWatch.

- High Availability and Durability: CodeCommit stores your repositories in Amazon Simple Storage Service (S3) and Amazon DynamoDB.

- Notifications and Custom Scripts: receive notifications with mazon Simple Notification Service (Amazon SNS) for events impacting your repositories.

AWS CodeBuild: is a fully managed continuous integration service that compiles source code, runs tests, and produces software packages that are ready to deploy.

AWS CodeArtifact: is a fully managed artifact repository service that can be used by organizations to securely store, publish, and share software packages used in their software development process.

Continuous delivery (CD) is a software development practice where code changes are automatically prepared for a release to production.

it expands upon continuous integration by deploying all code changes to a testing environment and/or a production environment after the build stage.

-

AWS CodeDeploy: is a fully managed deployment service that automates software deployments to a variety of compute services such as Amazon Elastic Compute Cloud (Amazon EC2), AWS Fargate, AWS Lambda, and your on-premises servers. CodeDeploy has several benefits such as:

- Automated Deployments: CodeDeploy fully automates software deployments, allowing you to deploy reliably and rapidly.

- Centralized control: CodeDeploy enables you to easily launch and track the status of your application deployments through the AWS Management Console or the AWS CLI.

- Minimize downtime: CodeDeploy helps maximize your application availability by introducing changes incrementally, tracking application health according to configurable rules, providing an option to be easily stopped and rolled back if there are errors.

- Easy to adopt: CodeDeploy works with any application, and provides the same experience across different platforms and languages with easy integration with your existing continuous delivery toolchain.

-

AWS CodePipeline: service that enables you to model, visualize, and automate the steps required to release your software.

CodePipeline, you model the full release process for building your code, deploying to pre-production environments,

testing your application, and releasing it to production. CodePipeline has several benefits such as:

- Rapid Delivery: CodePipeline automates your software release process, allowing you to rapidly release new features to your users.

- Improved Quality: enables you to increase the speed and quality of your software updates by automating your build, test, and release processes.

- Easy to Integrate: CodePipeline can easily be extended to adapt to your specific needs. You can use the pre-built plugins or your own custom plugins in any step of your release process.

- Configurable Workflow: CodePipeline enables you to model the different stages of your software release process using the console interface, the AWS CLI, AWS CloudFormation, or the AWS SDKs.

Deployment Strategies define how you want to deliver your software. Organizations follow different deployment strategies based on their business model.

Some may choose to deliver software that is fully tested, and others may want their users to provide feedback and let their users evaluate under development features (for example, beta releases). Deployment strategies to be considered:

- In-Place Deployments: deployment is done in line with the application on each instance in the deployment group is stopped, the latest application revision is installed, and the new version of the application is started and validated. These same deployment strategies for in-place deployments are available within blue/green deployments.

- Blue/Green Deployments: is a technique for releasing applications by shift traffic between two identical environments running differing versions of the application. It helps you minimize downtime during application updates mitigating risks surrounding downtime and rollback functionality. It also enables you to launch a new version (green) of your application alongside the old version (blue), and monitor and test the new version before you reroute traffic to it, rolling back on issue detection.

- Canary Deployments: is a blue/green strategy that is more risk-averse, in which a phased approach is used. This can be two step or linear in which new application code is deployed and exposed for trial, and upon acceptance rolled out either to the rest of the environment or in a linear fashion.

- Linear Deployments: Linear deployments mean that traffic is shifted in equal increments with an equal number of minutes between each increment. You can choose from predefined linear options that specify the percentage of traffic shifted in each increment and the number of minutes between each increment.

- All-at-once Deployments: All-at-once deployments mean that all traffic is shifted from the original environment to the replacement environment all at once.

The following matrix lists the supported deployment strategies for Amazon Elastic Container Service (Amazon ECS), AWS Lambda, and Amazon EC2/On-Premise.

Amazon ECS is a fully managed orchestration service.

AWS Lambda lets you run code without provisioning or managing servers.

Amazon EC2 enables you to run secure, resizable compute capacity in the cloud.

| Deployment Strategies Matrix | Amazon ECS | AWS Lambda | Amazon EC2/On-Premise |

|---|---|---|---|

| In-Place | ✓ | ✓ | ✓ |

| Blue/Green | ✓ | ✓ | ✓* |

| Canary | ✓ | ✓ | X |

| Linear | ✓ | ✓ | X |

| All-at-Once | ✓ | ✓ | X |

Note that Blue/green deployment with EC2/On-Premise only works with EC2 instances.

-

AWS Elastic Beanstalk supports the following type of deployment strategies:

- All-at-Once: Performs in place deployment on all instances.

- Rolling: Splits the instances into batches and deploys to one batch at a time.

- Rolling with Additional Batch: Splits the deployments into batches but for the first batch creates new EC2 instances instead of deploying on the existing EC2 instances.

- Immutable: If you need to deploy with a new instance instead of using an existing instance.

- Traffic Splitting: Performs immutable deployment and then forwards percentage of traffic to the new instances for a pre-determined duration of time. If the instances stay healthy, then forward all traffic to new instances and shut down old instances.

- A fundamental principle of DevOps is to treat infrastructure the same way developers treat code.

- When code is compiled or built into applications, we expect a consistent application to be created, and the build is repeatable and reliable.

- All configurations should be defined in a declarative way and stored in a source control system such as AWS CodeCommit, the same as application code. Infrastructure provisioning, orchestration, and deployment should also support the use of the infrastructure as code.

- AWS provides services that enable the creation, deployment and maintenance of infrastructure in a programmatic, descriptive, and declarative way. These services provide rigor, clarity, and reliability.

-

AWS CloudFormation: is a service that enables developers create AWS resources in an orderly and predictable fashion.

The templates require a specific syntax and structure that depends on the types of resources being created and managed.-

A CloudFormation template is deployed into the AWS environment as a stack. You can manage stacks through the AWS Management Console, AWS Command Line Interface, or AWS CloudFormation APIs.Before making changes to your resources, you can generate a change set, which is a summary of your proposed changes. Change sets enable you to see how your changes might impact your running resources, especially for critical resources, before implementing them.

AWS CloudFormation creating an entire environment (stack) from one template workflow

- You can use a single template to create and update an entire environment or separate templates to manage multiple layers within an environment. This enables templates to be modularized, and also provides a layer of governance that is important to many organizations.

- When you create or update a stack in the console, events are displayed showing the status of the configuration. If an error occurs, by default the stack is rolled back to its previous state. Amazon Simple Notification Service (Amazon SNS) provides notifications on events.

-

AWS Cloud Development Kit (CDK): is an open source software development framework to model and provision your cloud application resources using familiar programming languages. AWS CDK enables you to model application infrastructure using TypeScript, Python, Java, and .NET. Developers can leverage their existing Integrated Development Environment (IDE), leveraging tools like autocomplete and in-line documentation to accelerate development of infrastructure.

Constructs are the basic building blocks of CDK code. A construct represents a cloud component and encapsulates everything AWS CloudFormation needs to create the component.

The AWS CDK includes the AWS Construct Library containing constructs representing many AWS services.AWS Cloud Development Kit for Kubernetes: is an open-source software development framework for defining Kubernetes applications using general-purpose programming languages. Once you have defined your application in a programming language, cdk8s will convert your application description in to pre-Kubernetes YML, which can be consumed by any Kubernetes cluster running anywhere.

Automation focuses on the setup, configuration, deployment, and support of infrastructure and the applications that run on it.

Internally AWS relies heavily on automation to provide the core features of elasticity and scalability.

Manual processes are error prone, unreliable, and inadequate to support an agile business.

Frequently an organization may tie up highly skilled resources to provide manual configuration, when time could be better spent supporting other, more critical, and higher value activities within the business.

Rapid changes

Improved productivity

Repeatable configurations

Reproducible environments

Leveraged elasticity

Leveraged automatic scaling

Automated testing

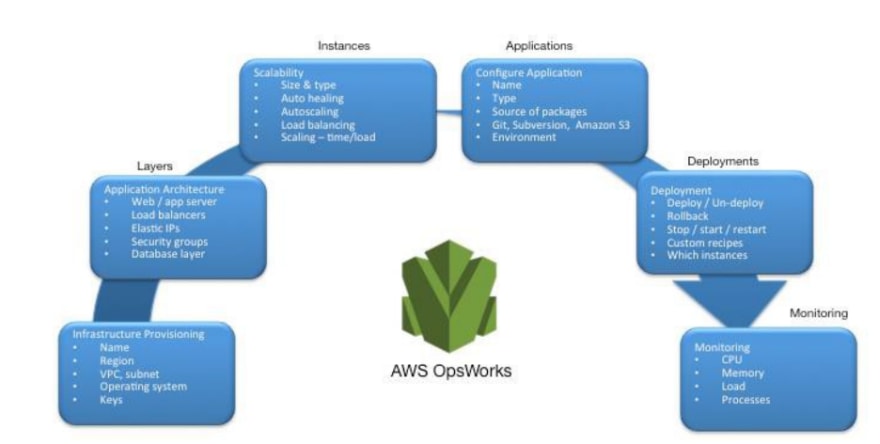

- AWS OpsWorks: provides even more levels of automation with additional features like integration with configuration management software (Chef) and application lifecycle management. You can use application lifecycle management to define when resources are set up, configured, deployed, undeployed, or shut down. With AWS OpsWorks, you define your application in configurable stacks. You can also select predefined application stacks.

-

AWS OpsWorks showing DevOps features and architecture

- Application stacks are organized into architectural layers so that stacks can be maintained independently.

- Out of the box, AWS OpsWorks also simplifies setting up Auto Scaling groups and Elastic Load Balancing load balancers, further illustrating the DevOps principle of automation.

- AWS OpsWorks supports application versioning, continuous deployment, and infrastructure configuration management.

- AWS OpsWorks also supports the DevOps practices of monitoring and logging.

AWS Elastic Beanstalk: is a service to rapidly deploy and scale web applications developed with Java, .NET, PHP, Node.js,

Python, Ruby, Go, and Docker on familiar servers such as Apache, NGINX, Passenger, and IIS.Elastic Beanstalk is an abstraction on top of Amazon EC2, Auto Scaling, and simplifies the deployment by giving additional features such as cloning, blue/green deployments, Elastic Beanstalk Command Line Interface (EB CLI) and integration with AWS Toolkit for Visual Studio, Visual Studio Code, Eclipse, and IntelliJ for increase developer productivity.

Because feedback is critical. In AWS, feedback is provided by two core services: Amazon CloudWatch and AWS CloudTrail. Together they provide a robust monitoring, alerting, and auditing infrastructure so developers and operations teams can work together closely and transparently.

Amazon CloudWatch: Amazon CloudWatch metrics automatically collect data from AWS services such as Amazon EC2 instances, Amazon EBS volumes, and Amazon RDS DB instances.These metrics can then be organized as dashboards and alarms or events can be created to trigger events or perform Auto Scaling actions.

Amazon CloudWatch Alarms: You can set up alarms based on the metrics collected by Amazon CloudWatch Metrics. The alarm can then send a notification to Amazon Simple Notification Service (Amazon SNS) topic or initiate Auto Scaling actions. An alarm requires period (length of the time to evaluate a metric), Evaluation Period (number of the most recent data points), and Datapoints to Alarm (number of data points within the Evaluation Period).

-

Amazon CloudWatch Logs: is a log aggregation and monitoring service. AWS CodeBuild, CodeCommit, CodeDeploy and CodePipeline provide integrations with CloudWatch logs so that all of the logs can be centrally monitored.

- With CloudWatch Logs you can:

- Query Your Log Data

- Monitor Logs from Amazon EC2 Instances

- Monitor AWS CloudTrail Logged Events

- Define Log Retention Policy

Amazon CloudWatch Logs Insights: scans your logs and enables you to perform interactive queries and visualizations. It understands various log formats and auto-discovers fields from JSON Logs.

-

Amazon CloudWatch Events: delivers a near real-time stream of system events that describe changes in AWS resources.

- You can match events and route them to one or more target functions or streams.

- CloudWatch Events becomes aware of operational changes as they occur.

- CloudWatch Events responds to these operational changes and takes corrective action as necessary, by sending messages to respond to the environment, activating functions, making changes, and capturing state information.

Amazon EventBridge: is a serverless event bus that enables integrations between AWS services, Software as a services (SaaS),

and your applications. In addition to build event driven applications, EventBridge can be used to notify about events from the services such as CodeBuild, CodeDeploy, CodePipeline, and CodeCommit.-

AWS CloudTrail: it’s important to understand who

is making modifications to your infrastructure. In AWS this transparency is provided by AWS CloudTrail service.- All AWS interactions are handled through AWS API calls that are monitored and logged by AWS CloudTrail.

- All generated log files are stored in an Amazon S3 bucket that you define.

- Log files are encrypted using Amazon S3 server-side encryption (SSE).

Whether you are adopting DevOps Culture in your organization or going through a DevOps cultural transformation, communication

and collaboration is an important part of you approach. At Amazon, we have realized that there is need to bring a changed in the

mindset of the teams and hence adopted the concept of Two-Pizza Teams.

"We try to create teams that are no larger than can be fed by two pizzas," said Bezos. "We call that the two-pizza team rule."

The smaller the team the better the collaboration.

-

Collaboration is also very important as the software releases are moving faster than ever. And a team’s ability to deliver the software can be a differentiating factor for your organization against your competition.

- Communication between the teams is also important we move towards the shared responsibility model and start moving out of the siloed development approach. This brings the concept of ownership in the team and shifts their perspective to look at this as an end-to-end. Your team should not think about your production environments as black boxes where they have no visibility.

- Cultural transformation is also important as you may be building a common DevOps team or the other approach is that you have one or more DevOps-focused members on your team. Both of these approaches do introduce shared responsibility in to the team.

Whether you are going through a DevOps Transformation or implementing DevOps principles for the first time, you should think about Security as integrated in your DevOps processes. This should be cross cutting concern across your build, test deployment stages.

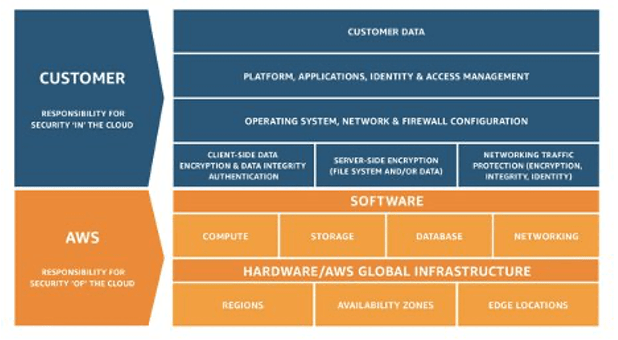

Security is a shared responsibility between AWS and the customer. The different parts of the Shared Responsibility Model are explained below:

AWS responsibility “Security of the Cloud”: AWS is responsible for protecting the infrastructure that runs all of the services offered in the AWS Cloud. This infrastructure is composed of the hardware, software, networking, and facilities that run AWS Cloud services.

Customer responsibility “Security in the Cloud”: Customer responsibility is determined by the AWS Cloud services that a customer selects. This determines the amount of configuration work the customer must perform as part of their security responsibilities.

AWS Identity and Access Management (IAM) defines the controls and polices that are used to manage access to AWS Resources. Using IAM you can create users and groups and define permissions to various DevOps services.

In addition to the users, various services may also need access to AWS resources.

IAM is one component of the AWS security infrastructure. With IAM, you can centrally manage groups, users, service roles and security credentials such as passwords, access keys, and permissions policies that control which AWS services and resources users can access.

IAM Policy lets you define the set of permissions, which can be attached to either a Role, User, or a Service to define their permission. You can also use IAM to create roles that are used widely within your desired DevOps strategy.

You can find the original AWS Whitepaper here.

This is a contribution to AWS Community MENA.

For more Whitepapers, please follow the hashtag #aws_whitepaper_summary.

Connect with me on LinkedIn

Follow AWSbelAraby

17