41

Red Hat OpenShift 1001: What is Red Hat OpenShift and Why Does it Matter?

If you are a technologist there is a very good chance you have at some time heard of Red Hat and their enterprise Linux solutions and maybe even have heard of Red Hat's platform called "OpenShift". But what exactly is Red Hat OpenShift (RHOS) and why does matter?

In this blog, I will try my best to summarize what Red Hat OpenShift and some of the high-level concepts and ideas that help you understand what it is and why it matters in the current climate in the development world.

And for those who may be interested in learning about Red Hat OpenShift in a webinar format where you can ask questions and see a demo of the RHOS platform, please register for free at this link. The Webinar took place on July 29th, 2021 @ 12pm EST/ 11am CST/ 9am PST. Click here to watch the replay:

So here is a breakdown of what this article will cover:

Simply, Red Hat OpenShift is a platform that allows you to easily manage, deploy and orchestrate containerized applications in the cloud. RHOS hopes to be the answer to one of the biggest problems that derives from developing and deploying microservice-based applications in the world. Its goal is to deliver a consistent and standardized approach to overseeing containerized application deployment.

So that all sounds really awesome and cool, but in reality, what does that actually mean?

So the first thing is understanding why a platform like RHOS even matters. Over the past years, the philosophy (or approach) of application development has evolved. Primarily from the "traditional" monolithic development architecture to the micro-service development architecture. To save from going into much detail on what the differences are between these two architectures, essentially a Monolithic application has all of its code is essentially in housed in one place and tightly coupled together meaning making a change to one aspect of the application's code can have a large impact on the whole application. On the other hand, an application using the micro-service architecture is composed of smaller services, micro-services, that can be housed in many different locations that are loosely coupled allowing for changes on one service not having huge impacts on the application as a whole.

And as you might guess, because of how micro-service based applications work, all of the smaller services (databases, servers, frontend, etc) can be housed in different environments across different clouds (Hybrid Cloud) making it a good amount of work to maintain, organize and orchestrate, connections, and manage credentials. And that's where RHOS steps in and is a huge help.

I'm a big fan of analogies and imagery to facilitate technical understand, so let's liken RHOS to an airport's ATC or Air Traffic Controller. At an airport, the whole purpose of the ATC is to help facilitate the departure, landings, and communications of all the planes that may be in the area who plan to in some way engage with the airport. In this analogy, imagine your micro-services that make up your application to be the planes that desire to use this airport.

Without an ATC, it's the responsibility of the planes to communicate and interact with each other in order to determine when it is okay to land, depart or fly within the vicinity of the airport. In the aviation world, an airport without an ATC would be called an "uncontrolled airport" and as the name suggests, there is no central control at the airport and in many ways, it's "every plane for themselves". Now in practice, an airport without an ATC generally operates okay. Planes communicate with each other, have good practices in place to keep each other safe, and are prepared for the circumstances but there are plenty of complications. By what means do planes communicate with each other? What if some planes are fitted with more modern technology like radar and some planes don't even have radios (even though they should)? What if some pilots follow the accepted aviations rules but others do not? These types of complications and considerations go on and on. And similarly, when a micro-service application does not have a central orchestrator to manage their services, there are plenty of complications. How do services communicate, how do services get deployed, and list of complications go on. Without something to manage the services it gets complicated and nuanced. In many ways you could say this type of application is an "uncontrolled application".

But With an ATC, an airport operates very differently. In this case, the "controlled airport" manages all of the affairs of the planes engaged with it. It keeps them aware of other planes in area, it lets them know when it is safe to land or depart, and it sets a standard for all of the planes that interact with the airport, ensuring no plane is unaccounted for or flying "rogue". Similarly, when a micro-service application is managed by RHOS, RHOS helps to make the application and makes is a "controlled application". RHOS can manage how services communicate, how services are deployed, how services are scaled, how security is implemented in the services and the list goes on and on. So instead of a team of operations engineers or developers having to guess how code will be implemented and deployed, teams can focus on doing their respective roles and not being bogged down with the complexities that come with micro-service development, deployment, and maintenance.

So if you are anything like me, you probably are thinking, "That's all fine and dandy that RHOS does that, but why should I really care?". Well, give me a few moments to answer that question and share why you should care about RHOS...

One thing you learn early in development is that the path from development to deployment can be a somewhat rocky road. Once the code for an application is starting to be developed , it's crucial that the project team begins thinking through how the application will be deployed and maintained. In the micro-service world, that can be more complicated than one would initially think. Questions like "Where will we deploy our services and manage them?", "How will we manage code changes and deployment of changes?", and "How will we manage application scaling to meet demand?". To be very honest, just dealing one of those questions can be a pretty hefty task. And that's not even considering the nuances that come with just communicating all the necessary details that need to be shared between the development and operations teams in the real world.

But that's where the power of RHOS really shines! RHOS not only makes managing code deployments and operations easier but it creates a kind of "one-stop shop" for both development and operations team.

For Developers, it makes it easy to focus on what developers are best at doing; developing and committing code. With RHOS, developers can focus on just putting their code into their desired code repositories and let tools like "Source-to-Image" manage the building and deployment of code.

For Operations Teams, now all services being used in your micro-service application can be managed in one single place. even in Hybrid or multi-cloud deployments. That means that Operations can focus on one single environment for managing ALL of their micro-services. With all of your services in one place, operations can focus on optimizing deployments, and configuring connections and security instead of managing each service in its own separate environment. Along with that, operations gets access to horizontal auto-scaling tools that takes some of the pain out of managing application demand in real-time.

One more great thing to note is that since RHOS is a container-based environment. That means that whatever the developer experiences in their local containerized environment will be the same as what is deployed in production. Thats means operations and development never have to encounter that dilemma where code is working on a developers local machine but not in the production environment. In my next blog in this series, Red OpenShift 1002, I will cover containerization more in detail so don't worry if you are not sure what that means right now.

Beyond RHOS being a great tool for developers and operations engineers, the introduction of RHOS into the technology industry ecosystem is a direct response to the needs and expectations being set in the larger industry; standardization and speed to market. So what do I mean by that? What I mean is that companies, large and small, are engaging more and more in competitive markets that necessitate tools that allow for their development and operations teams to rapidly and effectively deploy their applications. In the larger market, there is little tolerance for long application downtimes and slow development-to-deployment pipelines. And that's why RHOS continues to grow and be used by more organizations. I'll explain what I mean using three words: Consistency, Efficiency, and Efficacy.

Consistency: Companies now expect that new deployments of applications, services, and updates to existing applications to be repeatable and standardized. As companies augment teams and hire new employees to meet changing demands, they want to know that there are systems in place that team members can easily plug into. And that's where RHOS comes in. It's standardized platform that allows for teams to easily create "ways of working" and procedures for development and deployment in the real-world.

Efficiency: As I mentioned before, speed-to-market is of huge importance to companies. With RHOS, companies are more equipped than ever to develop, deploy, and monitor applications seamlessly. Even with applications that use services that span across multiple types of hosting environments, teams can manage connections and service credentials in one place, saving tons of time and effort. With the suite of tools that RHOS offers, teams can spend more time on doing what they're great at and less time worrying about the minutia of service deployment.

Efficacy: By Efficacy I mean, getting the results you desire from whatever you happen to be doing. And in application development, sometimes getting a desired result from local environment to all the possible deployment environments can be hard to come by. But with RHOS focus on containerization, which makes environmental deployment factors almost null-and-void, development teams and companies can be more assured that what they see in testing environments, production environments or any other environment will reflect exactly what they expect. But with RHOS it doesn't just stop at deployment. RHOS also has the ability to detect when a service goes down and can automatically rebuild the application and re-deploy it, minimizing unexpected downtimes. Along with that, operation engineers are able to use automatic scaling mechanisms to ensure that the applications are always able to meet demand and are always operating as expected.

As you continue to learn more about RHOS you will begin to see even more of its benefits and why the industry is adopting its technology so quickly.

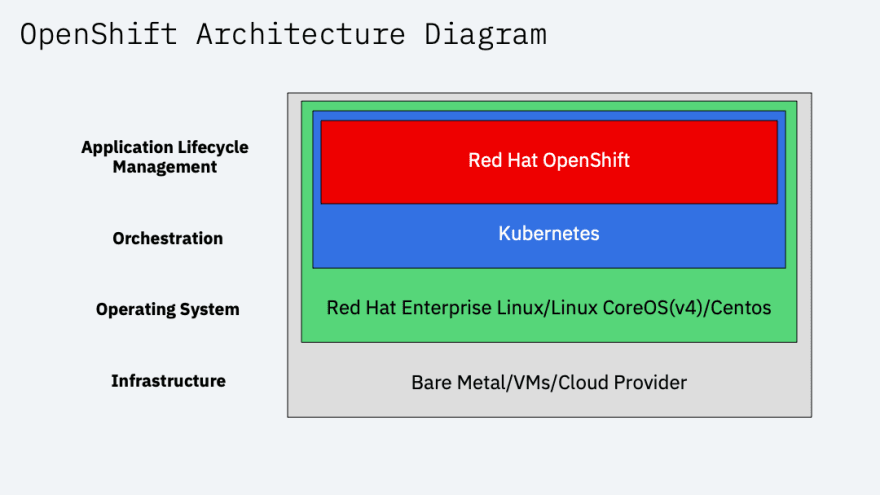

When learning RHOS and trying to dig into what it does and how it works, it becomes very helpful to have at least a basic understanding of how RHOS works behind the scenes. So let's take a few moments and dig into the architecture of RHOS and what creates its backbone. I will avoid deep diving into every element of its "tech stack" but hopefully this overview will give you a good idea of how it all works.

So working our way up from the bottom of the diagram, we have our Infrastructure Layer that RHOS sits on. This represents the actual location where RHOS and it's dependencies are housed. The base infrastructure could be a Bare Metal server, a Virtual machine, or any Cloud provider that supports RHOS. This gives you a lot of options and means that how you deploy RHOS is all up to what works best for you and your team.

So next up is the Operating System Layer that is managing the RHOS, which sits on the "Infrastructure Layer". In order for a server of any type to be useful to anyone, it generally needs to have some type of Operating System or OS that manages the kernel level processes needed to run applications or services. For RHOS, Red Hat Enterprise Linux, or RHEL, and now Linux CoreOS, as of RHOS v4, are the most often used OSs used. It is also common to see teams use Centos as an alternative to RHEL or CoreOS.

Sitting on top of our OS is our Orchestration Layer. On this layer we will find Kubernetes. One thing to note about Red Hat OpenShift is that it is essentially a "flavor" of Kubernetes or an opinionated version of Kubernetes. So what does that mean? So basically RHOS uses a specific approach on how it approaches orchestration using Kubernetes. RHOS sets the standard and approach on how Kubernetes is implemented. Kubernetes is fundamentally the software that manages the orchestration of our services and applications. It is now ubiquitous as the micro-services management tool.

So finally, on top of Kubernetes is Red Hat OpenShift in the Application Lifecycle Management Layer. As I just mentioned, RHOS is an opinionated version of Kubernetes, so under the hoods it is running Kubernetes. But it's more than just that. RHOS adds a new level options for Application lifecycle management. It gives you access to tools like Jenkins, Source-to-Image(S2I), Ansible Automations and much more.

Since I just mentioned the application Lifecycle tools that RHOS give us access to, let's take a look at how this might play out in the Real world for Developers and Operations engineers.

So here is a diagram of an example of what lifecycle management looks like in Red Hat OpenShift:

So let's start with the a Developer's perspective when using RHOS. So the first thing a developer needs to do is create a RHOS project, either using the RHOS CLI or the RHOS Web console. Once that is created, a developer would take their finished code and push it to a code repo. Because the developer has appropriately created the RHOS project and connected their repo to it, the code push will trigger a webhook that starts a Jenkins build process. That build process will create a container image from the code and push it upstream to a container registry; the container registry can be a built-in private RHOS container registry system, your own private registry or any public registry of your choosing. Once your image has be placed in a registry, RHOS will take that container image and push into the RHOS environment, or more specifically an OpenShift cluster. Once there, RHOS will create instances of that image in the cluster for use by your application. As you may have noticed, this is very lightweight process for developers. Once the RHOS project is setup, all developers have to do is focus on their code and push it to their desired repo.

Now that we have looked at the Developer's perspective using Red Hat OpenShift, let's take a look at what the experience might look like from an Operation Engineers perspective. So in a broad sense, there are really only two major things on a Operation Engineers mind:

The first is that the application is available at the maximum possible percentage and that the application availability never drops below some desired percentage, say 99%.

Second is the ability to scale the application and application services as needed depending on demand.

Luckily, RHOS helps Operations Engineers tackle both these concerns. With RHOS built in availability monitoring features teams can use RHOS web console to verify the status of applications and services and respond as needed. Also, with its built-in self-healing features, RHOS can automatically detect when a service has gone down and restart it when needed. Along with the ability to monitor services, RHOS offers automatic service scaling using Ansible Playbooks. Ansible allows Operations Engineers to use automation to intelligently scale services and applications depending demand. As Operations engineers readily know, managing scaling can be a very difficult and nuanced task when an application is live.

So hopefully from that simple example you can see some of the capabilities and powers that RHOS offers to both developers and operations engineers.

So now that you understand the basics of RHOS and why it matters, it's time to dive a bit deeper into its technology and some of its core principles.

Our next stop in this Red Hat OpenShift 1000 series it to look at the concept of containers and how they relate to RHOS. We will be focusing in on what container technology is, how it works, some of the common tools, and its connection to RHOS.

I encourage you to look out for the next entry in this series; Red Hat OpenShift 1002: What are Containers. This will also contain a blog, an associated video and, of course, a live webinar that will cover containers in detail and give you an opportunity to ask questions and see containers in action.

The webinar is scheduled for September 9th, 2021 @ 12pm EST/ 11am CST/ 9am PST and you can enroll now to watch it live here:

Beyond participating in the next part of the series, I very much encourage you to continue learning about RHOS and what it is and what it's capable of. Here are a few resources you can use to enrich your knowledge on RHOS:

"What is OpenShift" Link

"Introduction to Red Hat OpenShift Container Platform" Link

"Red Hat OpenShift 101: Learn about Enterprise Kubernetes"

Webinar on Crowdcast

"Red Hat OpenShift 101 continued: Hybrid Cloud with Kubernetes, Logging and Databases"

Webinar on Crowdcast

Also, if you are interested in the cloud and would like to experiment with IBM Cloud for FREE, please feel free to sign-up for an IBM Lite Account here: IBM Cloud Lite Signup

==== FOLLOW ME ON SOCIAL MEDIA ====

Twitter: Bradston Dev

Dev.to: @bradstondev

Youtube: Bradston YT

41