46

Operating Systems: Introduction

It is software that manages a computer's hardware.

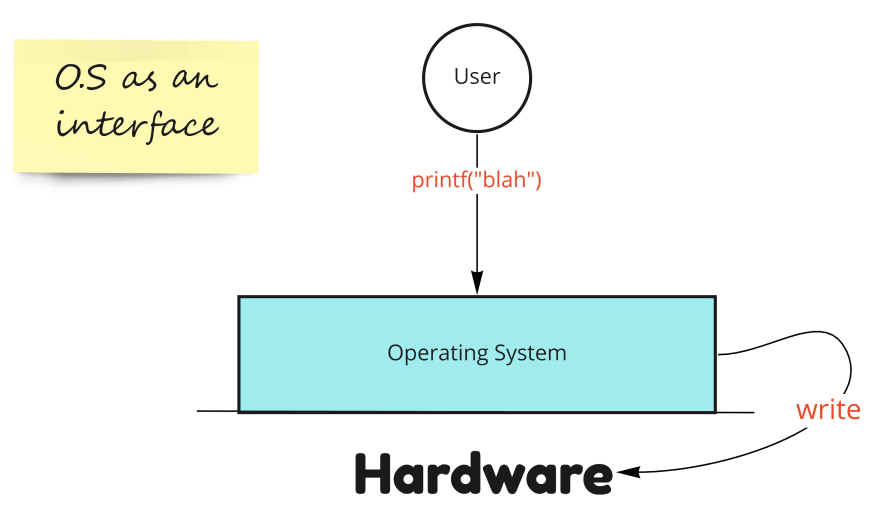

To put it more concretely, it acts as an interface between hardware and software.

For example, have you ever wondered how does

printf ( in C ), print( in Python ), println ( in Java ) function displays stuff on the console? The majority of you haven't!Let's take the example of

printf function of C language,

When you try to print some stuff on the console using

printf("blah blah"); , the internal code of printf makes a system call write which is provided by your operating system( windows, mac, linux, etc. ) Its because of this write call, you can display things on the console. The code for this write call is veryy complex as it is used to access the hardware(your monitor). In a world devoid of operating systems, think how complex it would be to just print stuff on the console!! I didn't even mention the complexity of reading input 😶

It acts as a resource allocator. The resource here means, CPU, memory(RAM + ROM), printer, scanner, etc.

It acts as a manager: It manages memory, processes, files, security, etc. In a simple sense, you can think it does book-keeping job in a library.

The primary goal of an operating system is convenience to the end-users. Being efficient is the secondary goal surprisingly 😯

Assume we have single CPU until unless specified.

‼ The definition varies from author to author so take it with a pinch of salt 🙂

The idea behind multiprogramming is, CPU should not be idle. Taking the above example, if J1 goes for IO, then CPU can happily execute J2. If J2 also goes for IO, CPU can execute J3.

So it seems like this is the best approach?

There's a problem here, for example, let's say J2 takes 1 unit time, J3 takes 2 unit time and J1 takes 100 unit time to execute and no IO is involved for any of the jobs. Now if J1 arrives first, it will not leave CPU for loooong time and thereby starving J2 and J3 which otherwise would have been executed in less time.

💡 In operating system lingo, this is called non-preemptive type of scheduling( of jobs ) because once we start a job, we don't stop executing it unless the job itself asks for IO or anything else.

A certain time is given to every process(job). If it is not completed in that time quantum, the process is scheduled later, and another process gets the chance.

In other words, it is preemptive multiprogramming.

Benefit: Responsiveness i.e. Starvation doesn't happen.

We have more than one CPU which is common these days!

So, idea is to apply multitasking principles to every CPU. This scheme is also known as parallelism.

In simple words, assign jobs to every CPU.

Benefit: less costly, more efficient than having separate computers, more reliable i.e. if CPU1 fails, still our work is not halted.

46