35

Getting Started with Fast API and Docker Part 1

Creating APIs, or application programming interfaces, is an important part of making your software accessible to a broad range of users. FastAPI is a high-performance API based on Pydantic and Starlette. It is an awesome modern Python framework designed to enable developers to quickly build robust and high performance APIs.

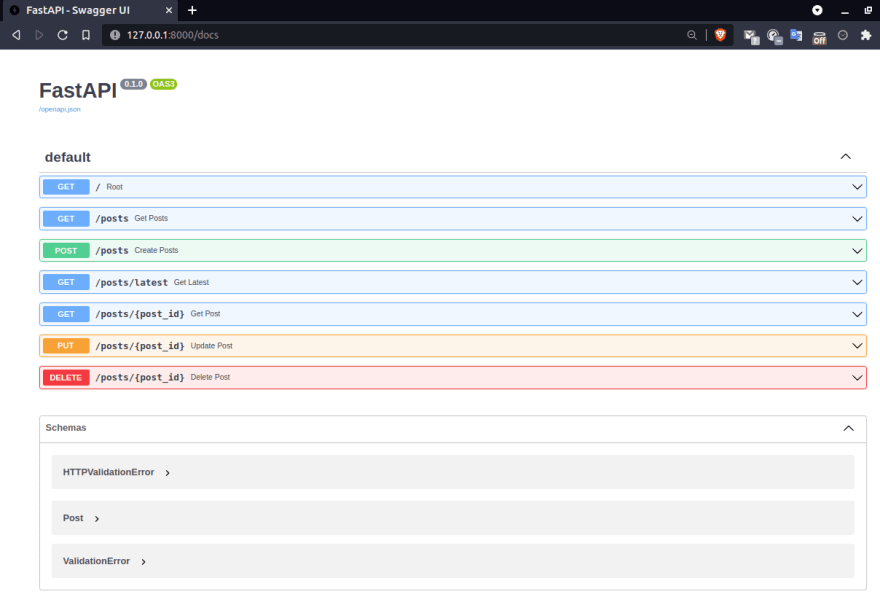

Some of the really cool features of fast api can be found in the frameworks official website. Besides all that the feature i really like about FastAPI is the automatic interactive API documentation provided by both swagger ui and ReDoc

Create our project directory

mkdir fastapi_learn && cd fastapi_learnOnce inside the project directory create an

app directory and enter it. Create an an empty file __init__.py and main.pyYou should have a directory structure that looks like:

.

├── app

├── __init__.py

└── main.pyFor good practice sake we start by creating a virtual environment to create an isolated environment for our FastAPI project.

# Python 2:

$ virtualenv env

# Python 3

$ python3 -m venv envIn order to use the environment’s packages/resources in isolation, you need to “activate” it. To do this, just run the following:

$ source env/bin/activate

(env) $Now install FastAPI :

$ pip install fastapiYou will also need an ASGI server, for production such as Uvicorn

$ pip install "uvicorn[standard]"Once installation is complete we are ready to build our application.

Create a new file and call it main.py.

touch main.pyInside main.py:

from fastapi import FastAPI

app = FastAPI()

@app.get("/")

def root():

return {"Hello": "World"}Here we import FastAPI the Python class that provides all the functionality for your API, create a FastAPI application "instance", define a single root path operation decorator that tells FastAPI that the function right below is in charge of handling requests that go to

/ using get operation and define a path operation function root which is a python function that is called by FastAPI whenever it receives a request to the URL / using a GET operation.. For a person coming from flask, the name path operations seemed very strange to me but this term is synonymous to routes ICYMI.Run the server with:

$ uvicorn main:app --reloadThe command uvicorn main:app refers to:

main: the file main.py (the Python "module").app: the object created inside of main.py with the line app = FastAPI().--reload: make the server restart after code changes. Only do this for development.

If everything is okay one should get the following output

INFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)

INFO: Started reloader process [28720]

INFO: Started server process [28722]

INFO: Waiting for application startup.

INFO: Application startup complete.Head over to your browser at http://127.0.0.1:8000/ and you should see the JSON response

{"Hello": "World"}Currently our API receives HTTP

GET requests in the path / the goal however is to create a simple CRUD API. A CRUD app is a specific type of software application that consists of four basic operations; Create, Read, Update, Delete. We start by creating our dummy database which is a simple list of dictionaries to store posts.#dummy in memory posts

posts = [{

"title": "title of post 1",

"content": "content of post 1",

"id": 1,

"published": True,

"rating": 8

},

{

"title": "title of post 2",

"content": "content of post 2",

"id": 2,

"published": True,

"rating": 6.5

}

]For every dummy post we store:

The titleThe contentThe IDThe published flagThe rating

We then create a new path operation to view all posts

# get endpoint to get all posts

@app.get("/posts")

def get_posts():

return {"data":f"{posts}"}we go ahead and create another path operation to create new posts. Here we need to send data from the client to our API. A

For this you declare your data model as a class that inherits from

request body is data sent by the client to your API. A response body is the data your API sends to the client. We leverage pydantic and use python type annotations put simply,We define how data(request and response body) should be in pure, canonical python and validate it with pydantic. For this you declare your data model as a class that inherits from

BaseModel and use standard Python types for all the attributes:from typing import Optional

from pydantic import BaseModel

...

# request body pydantic model

class Post(BaseModel):

title: str

content: str

published: bool = True

rating: Optional[int] = None

...# post endpoint to add a post

@app.post("/posts",status_code=status.HTTP_201_CREATED)

def create_posts(post:Post):

last_id = int(posts[-1]['id'])

payload = post.dict()

payload["id"] = last_id+1

posts.append(payload)

return {"data":f"{payload}"}We then get a single post by its ID and also get the latest post

# get endpoint to get a post by id as a path parameter

@app.get("/posts/{post_id}")

def get_post(post_id: int, response: Response):

post = find_post(post_id)

if not post:

raise HTTPException(status_code=status.HTTP_404_NOT_FOUND,

detail=f"post with id: {post_id} was not found")

#response.status_code = status.HTTP_404_NOT_FOUND

#return {"message": }

return {"data":post}# get endpoint to get the latest post

@app.get("/posts/latest")

def get_latest():

post = posts[len(posts)-1]

return {"data":f"{post}"}Update a single post by its id

# put endpoint to update a post

@app.put("/posts/{post_id}")

def update_post(post_id:int,post:Post):

if not find_post(post_id):

raise(HTTPException(status_code=status.HTTP_404_NOT_FOUND,detail=f"post with id: {post_id} was not found"))

else:

#update the post

for key,value in post.dict().items():

#print(f"{key} : {value}")

posts[post_id-1][key] = value

return{"data":f"{posts[post_id-1]}","message":f"Successfully updated post wihth id {post_id}"}The last piece of the crud is to Delete a post by its ID

# post end point to delete a post

@app.delete("/posts/{post_id}",status_code=status.HTTP_204_NO_CONTENT)

def delete_post(post_id:int):

post = find_post(post_id)

if not post:

raise(HTTPException(status_code=status.HTTP_404_NOT_FOUND,detail=f"post with id: {post_id} was not found"))

else:

# delete the post

posts.pop(post_id-1)

return Response(status_code=status.HTTP_204_NO_CONTENT)main.py looks like this at this point:from fastapi import FastAPI,Response,status,HTTPException

from fastapi.params import Body

from typing import Optional

from pydantic import BaseModel

# aplication instance

app = FastAPI()

# request body pydantic model

class Post(BaseModel):

title: str

content: str

published: bool = True

rating: Optional[int] = None

#dummy in memory posts

posts = [{

"title": "title of post 1",

"content": "content of post 1",

"id": 1,

"published": True,

"rating": 8

},

{

"title": "title of post 2",

"content": "content of post 2",

"id": 2,

"published": True,

"rating": 6.5

}

]

def find_post(id):

for i,post in enumerate(posts):

if post["id"] == id:

return post

#path operations - synonymous to routes

#root

@app.get("/")

def root():

message = "Hello am learning FastAPI!!"

return{"message":f"{message}"}

# get endpoint to get all posts

@app.get("/posts")

def get_posts():

return {"data":f"{posts}"}

# post endpoint to add a post

@app.post("/posts",status_code=status.HTTP_201_CREATED)

def create_posts(post:Post):

last_id = int(posts[-1]['id'])

#print(last_id)

payload = post.dict()

payload["id"] = last_id+1

#print(payload)

posts.append(payload)

return {"data":f"{payload}"}

# get endpoint to get the latest post

@app.get("/posts/latest")

def get_latest():

post = posts[len(posts)-1]

return {"data":f"{post}"}

# get endpoint to get a post by id as a path parameter

@app.get("/posts/{post_id}")

def get_post(post_id: int, response: Response):

post = find_post(post_id)

if not post:

raise HTTPException(status_code=status.HTTP_404_NOT_FOUND,

detail=f"post with id: {post_id} was not found")

#response.status_code = status.HTTP_404_NOT_FOUND

#return {"message": }

return {"data":post}

# post end point to delete a post

@app.delete("/posts/{post_id}",status_code=status.HTTP_204_NO_CONTENT)

def delete_post(post_id:int):

post = find_post(post_id)

if not post:

raise(HTTPException(status_code=status.HTTP_404_NOT_FOUND,detail=f"post with id: {post_id} was not found"))

else:

# delete the post

posts.pop(post_id-1)

return Response(status_code=status.HTTP_204_NO_CONTENT)

# put endpoint to update a post

@app.put("/posts/{post_id}")

def update_post(post_id:int,post:Post):

if not find_post(post_id):

raise(HTTPException(status_code=status.HTTP_404_NOT_FOUND,detail=f"post with id: {post_id} was not found"))

else:

#update the post

for key,value in post.dict().items():

#print(f"{key} : {value}")

posts[post_id-1][key] = value

return{"data":f"{posts[post_id-1]}","message":f"Successfully updated post wihth id {post_id}"}Once our application is done we export our virtual environment dependencies to a

requirements.txt file by running:$ pip freeze > requirements.txt

Docker is a tool that makes it easier to create, deploy, and run applications using containers.With Docker you can easily deploy a web application along with its dependencies, environment variables, and configuration settings - everything you need to recreate your environment quickly and efficiently.A

docker container is a collection of dependencies and code organised as software that enables applications to run quickly and efficiently in a range of computing environments.A

docker image, on the other hand, is a blueprint that specifies how to run an application. In order for Docker to build images automatically, a set of instructions must be stored in a special file known as a Dockerfile.The instructions in this file are executed by the user on the command line interface in order to create an image.

There are two main Docker tools to be installed: Docker (CLI) and Docker Compose. Note that I am running on an Ubuntu 18.04.6 LTS system however installation instructions for other operating systems can be found here.

$ sudo apt-get remove docker docker-engine docker.io containerd runcUpdate the

apt package index and install packages to allow apt to use a repository over HTTPSsudo apt-get install ca-certificates curl gnupg lsb-release$ curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpgUpdate the

apt package and install the latest version of Docker Engine and containerd$ sudo apt-get update

$ sudo apt-get install docker-ce docker-ce-cli containerd.ioI found it annoying running the

docker command as root this is the default behaviour to change this add your username to the docker group:$ sudo usermod -aG docker ${USER}For the changes to take effect reboot your machine

Docker can build images automatically by reading the instructions from a Dockerfile. A Dockerfile is a text document that contains all the commands a user could call on the command line to assemble an image. Using docker build users can create an automated build that executes several command-line instructions in succession.A Dockerfile adheres to a specific format and set of instructions which you can find at Dockerfile reference.We create our

Dockerfile at the root of our working directory, and add the following instructions to it :FROM python:3.6

WORKDIR /fastapi

COPY requirements.txt /fastapi

RUN pip install -r requirements.txt

COPY ./app /fastapi/app

CMD ["uvicorn", "app.main:app", "--host", "0.0.0.0", "--port", "80"]Here's what each instruction means:

FROM specifies the parent image Python:3.6 from which you are buildingWORKDIR sets the current working directory to /fastapi for any RUN, CMD,COPY and ADD instructions that follow it in the Dockerfile.COPY Copies the file with the requirements to the /fastapi directory.CMD specifies what command to run within the container.The main purpose of a CMD is to provide defaults for an executing container.In this case it runs the uvicorn server.There can only be one CMD instruction in a Dockerfile. If you list more than one CMD then only the last CMD will take effect.RUN actually runs a command and commits the result; CMD does not execute anything at build time, but specifies the intended command for the image.Here is how your project directory should look like:

.

├── app

│ ├── __init__.py

│ └── main.py

├── Dockerfile

├── requirements.txt

└── env$ docker build -t fastapi_learn .Run a container based on your image:

$ docker run -d -p 8000:80 fastapi_learnonce again if we visit http://127.0.0.1:8000/ we get:

{"message": "Hello am learning FastAPI!!"}

As mentioned FastAPI autogenerates docs for your api to test this out navigate to: http://127.0.0.1:8000/docs

So in this post, you learned how you can start using FastAPI for building high-performance APIs.I have only scratched the surface of it.Hopefully, in part 2, I will be discussing some advanced topics like integrating with DB, Authentication, and other things. We've also covered how to dockerize a FastAPI application

35