31

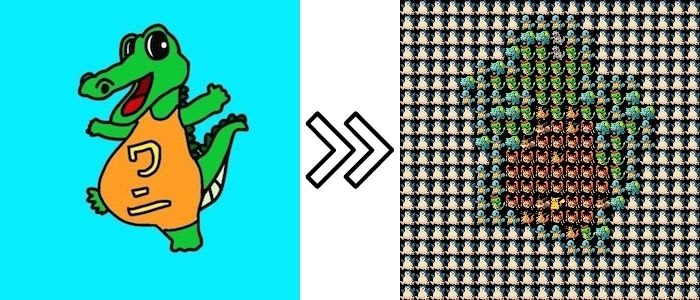

OpenCV in Lambda: Created an API to convert images to Pokémon ASCII art style using AWS and OpenCV

Hey guys 👋👋👋

I have developed an API with AWS and OpenCV, that convert images to Pokémon ASCII art style.

Look this!

It's almost same? lol

In this article, I will explain how to develop this API.

※ This article is the seventh week of trying to write at least one article every week.

I split the image and compared each of them to Pokémon images and replaced them with one that has similar hue.

Since

pip install is not available in Lambda, I used a Docker image to use OpenCV.So, the configuration for this project is as follows.

Here's what I did.

First, download the images from PokeAPI.

Checking the images, there are quite a few margins, so I removed the margins and downloaded the images with a size of 15px*15px.

Checking the images, there are quite a few margins, so I removed the margins and downloaded the images with a size of 15px*15px.

This time, I used Google Colaboratory for this process.

Of course, this is your choice.

All you need is to have downloaded images ready.

Of course, this is your choice.

All you need is to have downloaded images ready.

import requests

import cv2

import numpy as np

import tempfile

import urllib.request

from google.colab import files

# Cropping an image

def crop(image):

# Loading images

img = cv2.imread(image)

# Convert the original image to grayscale

gray_img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# Convert grayscale image to black and white

ret_, bw_img = cv2.threshold(gray_img, 1, 255, cv2.THRESH_BINARY)

# Extraction of contours in black and white images

contours, h_ = cv2.findContours(bw_img, cv2.RETR_EXTERNAL, method=cv2.CHAIN_APPROX_NONE)

# Calculate the banding box from the contour.

rect = cv2.boundingRect(contours[-1])

# Crop the source image with the coordinates of the bounding box

crop_img = img[rect[1]:rect[1]+rect[3]-1, rect[0]:rect[0]+rect[2]-1]

return crop_img

# Specify the numbers of the Pokémon you want, like lists = [1,4,7,10,16,24,25,26].

# If you try to download too many images at once, you will end up losing some, so download about 10 at a time.

for i in lists:

url = "https://pokeapi.co/api/v2/pokemon/{}/".format(i)

tmp_input = '{}.png'.format(i)

r = requests.get(url, timeout=5)

r = r.json()

image =r['sprites']['front_default']

urllib.request.urlretrieve(image, tmp_input)

img = crop(tmp_input)

img2 = cv2.resize(img , (15, 15))

cv2.imwrite(tmp_input , img2)

files.download(tmp_input)In this case, due to processing speed, I prepared 30 different images.

I have stored these images in a folder named

I have stored these images in a folder named

pokemons.What I did is the following.

The full code is below.

# app.py

import json

import cv2

import base64

import numpy as np

import glob

def calc_hue_hist(img):

# Convert to H (Hue) S (Saturation) V (Lightness) format.

hsv = cv2.cvtColor(img, cv2.COLOR_BGR2HSV)

#hsv = img

# Separate each component.

h, s, v = cv2.split(hsv)

# Calculated pixel values of an image (histogram)

h_hist = calc_hist(h)

return h_hist

def calc_hist(img):

# Calculate the histogram.

hist = cv2.calcHist([img], channels=[0], mask=None, histSize=[256], ranges=[0, 256])

# Normalize the histogram.

hist = cv2.normalize(hist, hist, 0, 255, cv2.NORM_MINMAX)

# (n_bins, 1) -> (n_bins,)

hist = hist.squeeze(axis=-1)

return hist

def base64_to_cv2(image_base64):

# base64 image to cv2

image_bytes = base64.b64decode(image_base64)

np_array = np.fromstring(image_bytes, np.uint8)

image_cv2 = cv2.imdecode(np_array, cv2.IMREAD_COLOR)

return image_cv2

def cv2_to_base64(image_cv2):

# cv2 image to base64

image_bytes = cv2.imencode('.jpg', image_cv2)[1].tostring()

image_base64 = base64.b64encode(image_bytes).decode()

return image_base64

# Receive json in event { 'img': base64img }

def handler(event, context):

input_img = event['img'] #Assign the second image to the read object "trimmed_img"

input_img = base64_to_cv2(input_img)

height, width, channels = input_img.shape[:3]

max_ruizido = 0

tmp_num = 5

crop_size = 15

ch_names = {0: 'Hue', 1: 'Saturation', 2: 'Brightness'}

poke_imgs = glob.glob('pokemons/*.png')

for i in range(height//crop_size):

for j in range(width//crop_size):

# Split the image into crop_size*crop_size

trimed_img = input_img[crop_size*i : crop_size*(i+1), crop_size*j: crop_size*(j+1)]

max_ruizido = -1

tmp_num = 5

h_hist_2 = calc_hue_hist(trimed_img)

for poke_name in poke_imgs:

poke_img = cv2.imread(poke_name) #Assign the image to the read object "poke_img"

# Get a histogram of the Hue components of an image

h_hist_1 = calc_hue_hist(poke_img)

h_comp_hist = cv2.compareHist(h_hist_1, h_hist_2, cv2.HISTCMP_CORREL)

if max_ruizido < h_comp_hist:

max_ruizido = h_comp_hist

tmp_num = poke_name

if j == 0:

# The leftmost image

tmp_img = cv2.imread(tmp_num)

else:

# Images to be merged

tmp_img2 = cv2.imread(tmp_num)

# Consolidated images

tmp_img = cv2.hconcat([tmp_img, tmp_img2])

if i == 0:

# Now that the first line is complete, move on to the next line

output_img = tmp_img

else:

output_img = cv2.vconcat([output_img, tmp_img])

return {'data': cv2_to_base64(output_img)}Create a Dockerfile to run the program we wrote above.

Prepare the basic image for using python in Lambda.

FROM public.ecr.aws/lambda/python:3.7Next, since Lambda does not allow pip install, we can do

pip install opencv-python or pip install numpy here to be able to use OpenCV and the libraries we need.RUN pip install opencv-python

RUN pip install numpyCopy the app.py and Pokémon images (in the Pokemons folder) that you created into the image.

COPY app.py /var/task/

COPY pokemons/ /var/task/pokemons/Here, the official says

_COPY app.py . /, but this will not work well with OpenCV, so be sure to specify and copy /var/task/.The full code is below.

FROM public.ecr.aws/lambda/python:3.7

RUN pip install opencv-python

RUN pip install numpy

COPY app.py /var/task/

COPY pokemons/ /var/task/pokemons/

CMD [ "app.handler" ]Click on ECR from the tabs on the left of the ECS page.

Then, create repository.

If you go into the repository and click on the View push command, you will find instructions on how to use it.

It's quite easy :)

Next, create a Lambda function to pull the Docker image from the ECR.

Click on "create function" and choose "create from container image" as the method of creating the function.

Specify the name of the function and the URL of the image.

Register the URL of the DockerImage you have pushed in the Container image URI section.

Register the URL of the DockerImage you have pushed in the Container image URI section.

You can copy and paste the URL from the ECR repository screen.

If you don't have an IAM role, you should create one for Lambda.

The default setting is 3 seconds, so this time I extended it to 30 seconds.

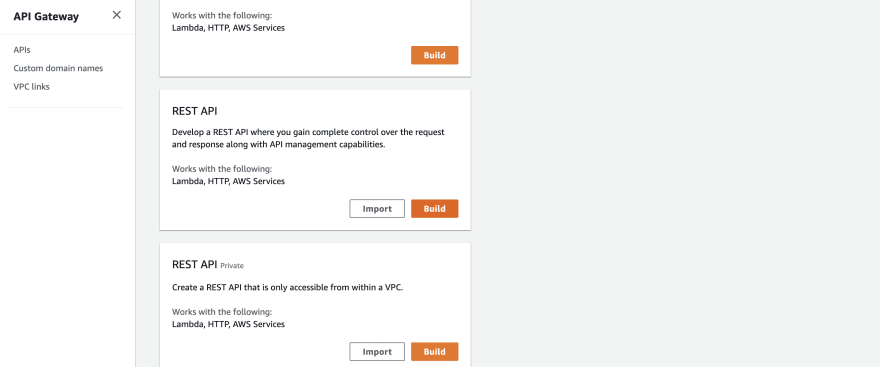

Click Build on the non-private REST API.

Press the Actions button and select Create Resource.

You can name resource names.

Don't forget CORS setting.

Select Create Methods from Actions and choose POST.

Now select the Lambda function you just created.

Now select the Lambda function you just created.

Test it and if it passes, select Deploy API from Actions and deploy it.

I don't know if this is required or not, but when I actually tried to post this API from a website, it was rejected due to the permissions to access Lambda from the API Gateway, so I created and granted a role to access Lambda from the API Gateway.

Then finish!

Thanks for reading.

This is my first time to use OpenCV, and I am happy to make such a funny API :D

This is my first time to use OpenCV, and I am happy to make such a funny API :D

🍎🍎🍎🍎🍎🍎

Please send me a message if you need.

31