31

Docker On AWS | AWS Whitepaper Summary

This content is the summary of the AWS whitepaper entitled “ Docker on AWS “ written by Brandon Chavis and Thomas Jones. It discusses the exploitation of the container’s benefits in AWS. I tried to simplify and to gather the most interesting points from each paragraph, in order to give the readers very brief and effective content.

PS: Although the introduction is always ignored by many readers, I found that the authors provided an excellent set of information as an opening to our subject. This is why I found it fruitful o summarize the introduction as well by an explanative figure.

The benefits of containers reach all the elements of organizations and they are:

Speed: Helps all the contributors to software development activities to act quickly.

Because:

- The architecture of containers allows for full process isolation by using the Linux kernel namespaces and cgroups. Containers are independent and share kernel on host OS(No need for full virtualization or for hypervisor)

-

Containers can be created quickly thanks to their modular nature and lightweight. This becomes more observable in development lifecycle. The granularity leads an easy versioning of released applications .Also it leads to a reduction in resource sharing between application components which minimizes compatibility issues.

Consistency: The ability to relocate entire development environments by moving a container between systems highlights.

Containers provide predictable, consistent and stable applications in all the stages of their lifecycle (Development, test and production) as it encapsulates the exact dependencies, thus minimizes the risk of bugs.Density and Resource Efficiency: The enormous support of the community to the Docker project increased density and modularity of computing resources.

• Containers increase the efficiency and the agility of applications thanks to the abstraction from OS and hardware. Multiple containers run on a single system.

• You can make a compromise between what resources containers need and what are the hardware limits of the host to reach a maximum number of containers: Higher density, increasing efficiency of computing resources, saving money of the excessed capacity, changing the number of assigned containers to a host instead of horizontal scaling to reach optimal utilization.Flexibility: Based on Docker portability, ease of deployment, and small size.

• Unlike other applications that require intensive instructions, Docker provides (just like Git) a simple mechanism to download and install containers and their subsequent applications using this command:

$ docker pull

• Docker provides a standard interface : It is easy to deploy

wherever you like and it’s portable between different versions of Linux.

• Containers make microservice architecture possible where services are isolated to adjacent service’s failure and errant patches or upgrades.

• Docker provides clean ,reproducible and modular environment

There are two ways to deploy containers in AWS :

AWS Elastic Beanstalk: It is a management layer for AWS services like Amazon EC2, Amazon RDS and ELB.

• It is used to deploy, manage and scale containerized applications

• It can deploy containerized applications to Amazon ECS

• After you specify your requirements (memory, CPU, ports, etc.),it places your containers across your cluster and monitors their health.

• The command-line utility eb can be used to manage AWS Elastic Beanstalk and Docker containers.

• It is used for deploying a limited number of containers

Amazon EC2 Container Service

• Amazon ECS is a high performant management system for Docker containers on AWS .

• It helps to launch, manage, run distributed applications and orchestrate thousands of Linux containers on a managed cluster of EC2 instances, without having to build your own cluster management backend.

• It offers multiple ways to manage container scheduling, supporting various applications.

• Amazon ECS container agent is open source and free ,it can be built into any AMI to be used with Amazon ECS

• On a cluster , a task definition is required to define each Docker image ( name ,location, allocated resources,etc.).

• The minimum unit of work in Amazon ECS is ‘a task’ which is a running instance of a task definition.

About the clusters in this context:

• Clusters are EC2 instances running the ECS container agent that communicates instance and container state information to the cluster manager and dockerd .

• Instances register with the default or specified cluster.

• A cluster has an Auto Scaling group to satisfy the needs of the container workloads.

• Amazon ECS allows managing a large cluster of instances and containers programmatically.

Container-Enabled AMIs: The Amazon ECS-Optimized Amazon Linux AMI includes the Amazon ECS container agent (running inside a Docker container), dockerd (the Docker daemon), and removes the not required packages.

Container Management:

Amazon ECS provides optimal control and visibility over containers, clusters, and applications with a simple, detailed API. You just need to call the relevant actions to carry out your management tasks.

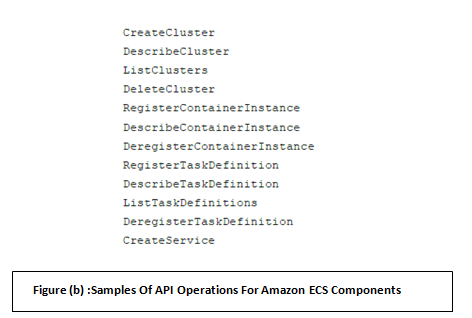

Here is a list containing examples of available API Operations for Amazon ECS.

Scheduling

• Scheduling ensures that an appropriate number of tasks are constantly running , that tasks are registered against one or more load balancers, and they are rescheduled when a task fails .

• Amazon ECS API actions like StartTask can make appropriate placement decisions based on specific parameters (StartTask decisions are based on business and application requirements).

• Amazon ECS allows the integration with custom or third-party schedulers.

• Amazon ECS includes two built-in schedulers:

- The RunTask: randomly distributes tasks across your cluster.

- CreateService: ideally suited to long-running stateless services.

Container Repositories

• Amazon ECS is repository-agnostic so customers can use the repositories of their choice.

• Amazon ECS can integrate with private Docker repositories running in AWS or an on-premises data center.

Logging and Monitoring

Amazon ECS supports monitoring of cluster contents with Amazon CloudWatch.

Storage

• Amazon ECS allows to store and share information between multiple containers using data volumes. They can be shared on a host as:

•• Empty, non-persistent scratch space for containers

OR

•• Exported volume from one container to be mounted by other containers on mountpoints called containerPaths.

• ECS task definitions can refer to storage locations (instance storage or EBS volumes) on the host as data volumes. The optional parameter referencing a directory on the underlying host is called sourcePath.If it is not provided, data volume is treated as scratch space.

• volumesFrom parameter : defines the relationship of storage between two containers .It requires sourceContainer argument to specify which container's data volume should be mounted.

Networking

• Amazon ECS allows networking features (port mapping ,container linking ,security groups ,IP addresses and ressources, network interfaces, etc.).

• AWS Costumers combine software capabalities (of Docker, SElinux, iptables,etc) with AWS security measures( IAM, security groups, NACL,VPC) provided in AWS architecture for EC2 and scaled by clusters

• AWS customers maintain, control and configure of the EC2 instances, OS and Docker daemon through AWS deployment &management services.

• Security measures are scaled through clusters.

1.Batch jobs

Packaging containers that can batch, extract, transform, and load jobs and deploy them into clusters. Jobs then start quickly. Better performance is witnessed.

2.Distributed Applications

Containers build:

• Distributed applications, which provide loose coupling ,elastic and scalable design. They are quick to deploy across heterogeneous servers ,as they are characterized by density ,consistency and flexibility.

• microservices into adequate encapsulation units.

• Batch job processes which can run on a large numbers of containers.

3.Continuous Integration and Deployment

Containers are a keystone component of continuous integration (CI) and continuous deployment (CD) workflows. It supports streamlined build, test, and deployment from the same container images. As it leverages CI features in tools like GitHub, Jenkins, and DockerHub

4.Platform As a Service

PaaS is a type of service model that presents a set of software, tools and and an underlying infrastructure where the cloud provider manages networking, storage ,OS ,Middleware and the customer performs resources configuration.

The issue : Users and their resources need to be isolated .This is a challenging task for PaaS providers .

The solution: Containers provide the needed isolation concept .they allows also creating and deploying template resources to simplify isolation process.

Also each product offered by the PaaS provider could be built into its own container and deployed on demand quickly.

All the containers defined in a task are placed onto a single instance in the cluster. So a task represents an application with multiple tiers requiring inter-container communication.

Tasks give users the ability to allocate resources to containers, so containers can be evaluated on resource requirements and collocated.

Amazon ECS provides three API actions for placing containers onto hosts:

RunTask : allows a specific cluster instance to be passed as a value in the API call

StartTask: uses Amazon ECS scheduler logic to place a task on an open host

CreateService: allows for the creation of a Service object, which, combination of a TaskDefinition object and an existing Elastic Load Balancing load.

Service discovery: Solves challenges with advertising internal container state, such as current IP address and application status, to other containers running on separate hosts within the cluster. The Amazon ECS describe API actions like describe-service can serve as primitives for service discovery functionality.

Since the commands used in this Walkthrough can be exploited in other complex projects, I suggest a bash file that can help to solve repetitive and difficult real-world problems:

View: link

1.Create your first cluster named ‘Walkthrough’ with create-cluster command

PS: each AWS account is limited to two clusters.

2.Add instances

If you would like to control which cluster the instances register to(not to default cluster), you need to input UserData to populate the cluster name into the /etc/ecs/ecs.config file.

In this lab, we will launch a web server ,so we configure the correct security group permissions and allow inbound access from anywhere on port 80.

3.Run a quick check with the list-container-instances command:

PS: To dig into the instances more, use the describe-container-instances command

4.Register a task definition before running in on ECS cluster:

a)Create the task definition:

It is created a the JSON file called ‘nginx_task.json’ . This specific task launches a pre-configured NGINX container from the Docker Hub repository.

View: link

b)Register the task definition with Amazon ECS:

5.Run the Task with run-task command:

PS:

• Note of the taskDefinition instance value (Walkthrough:1) returned after task registration in the previous step.

• To obtain the ARN use the aws ecs list-task-definitions command.

6.Test the container: The container port is mapped to the instance port 80, so you can curl the utility to test the public IP address .

This whitepaper summary can be a useful resource for those interested in the cloud native technologies. It sheds the light on containers generally, and Docker on AWS specifically. It details the benefits of those technologies especially while using the EC2 cluster, it gives a step-by-step guide for beginners to deploy their first container on a cluster and also provides a bash Script that helps to automate those tasks in more complex projects.

31