23

Creating a Google Kubernetes Engine Autopilot cluster using Terraform

In the previous part we created our network stack. In this part we will configure the GKE Autopilot cluster.

The following resources will be created:

- GKE Autopilot Cluster

Our GKE Autopilot Cluster is hosted in the Web subnet. The public API server endpoint can only be accessed from a specific range of IP addresses.

Create the terraform file infra/plan/gke.tf:

resource "google_container_cluster" "private" {

provider = google-beta

name = "private"

location = var.region

network = google_compute_network.custom.name

subnetwork = google_compute_subnetwork.web.id

private_cluster_config {

enable_private_endpoint = false

enable_private_nodes = true

master_ipv4_cidr_block = var.gke_master_ipv4_cidr_block

}

master_authorized_networks_config {

dynamic "cidr_blocks" {

for_each = var.authorized_source_ranges

content {

cidr_block = cidr_blocks.value

}

}

}

maintenance_policy {

recurring_window {

start_time = "2021-06-18T00:00:00Z"

end_time = "2050-01-01T04:00:00Z"

recurrence = "FREQ=WEEKLY"

}

}

# Enable Autopilot for this cluster

enable_autopilot = true

# Configuration of cluster IP allocation for VPC-native clusters

ip_allocation_policy {

cluster_secondary_range_name = "pods"

services_secondary_range_name = "services"

}

# Configuration options for the Release channel feature, which provide more control over automatic upgrades of your GKE clusters.

release_channel {

channel = "REGULAR"

}

}Complete the file infra/plan/variable.tf:

variable "gke_master_ipv4_cidr_block" {

type = string

default = "172.23.0.0/28"

}Let's deploy our cluster

cd infra/plan

gcloud services enable container.googleapis.com --project $PROJECT_ID

terraform applyLet's check if the cluster has been created and is working correctly:

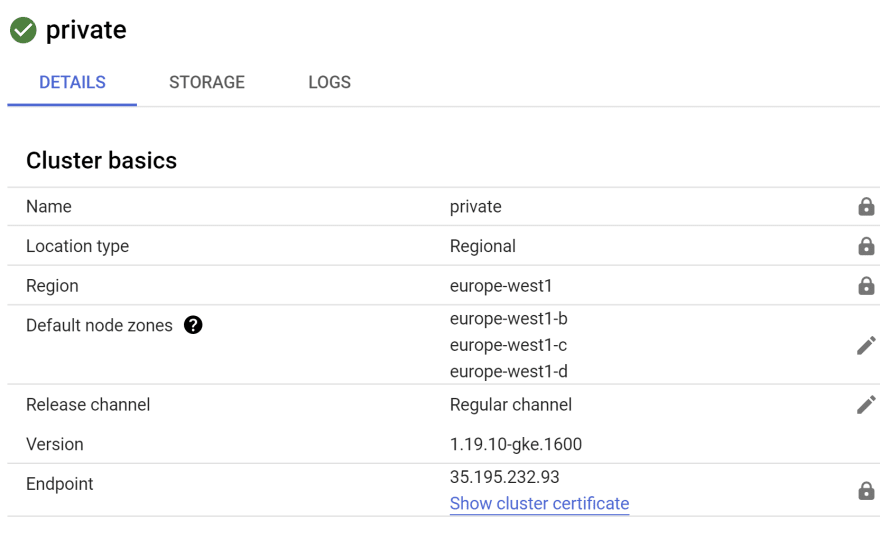

GKE Autopilot cluster

Our GKE cluster is now active. In the next part, we will focus on setting up the Cloud SQL instance.

23