18

Notes on Kafka: Brokers, Producers, and Consumers

We had a good overview of what brokers, producers, and consumers are in the previous sections. Now it's time to go deeper! You can simply skip ahead onto the parts you're interested in or you could also check out each one below (which I hope you do!)

As previously tackled, a Kafka cluster is composed of multiple brokers and each broker is basically a server.

- Each broker is identified by an ID (integer)

- Each broker contains certain topic partitions

- good start is 3 brokers

It is also important to note that when you connect to a broker, which is called the bootstrap broker, you're already connecting to the entire cluster.

Also, recall that when you create a topic, the topic and its partitions will be distributed among the available brokers in the cluster. In the example below, we have cluster of three brokers and three topics.

Each topic has different number of partitions. This means that for Topic-A, the data streaming to that topic will be distributed to the 4 partitions. The same goes for Topic-B, but since this topic has only 3 partitions, it's only occupying 3 brokers.

On the other hand, Topic-C has 6 partitions which means two brokers (BROKER-101 and BROKER-3) will contain 2 partitions.

Besides specifying the partition when you create a topic, you need to also indicate the replication factor.

- repl factor should be greater than 1 (normally 2 or 3)

- ensures that when 1 broker is down, another one can serve the data

- example: repl factor of 2 means 2 copies

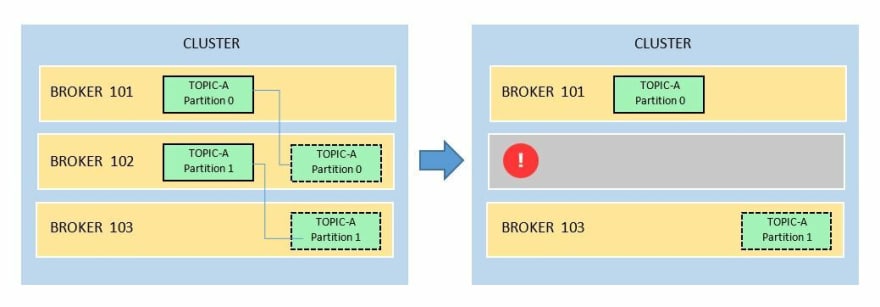

In the example below, we have Topic-A with two partitions. We've also set it's replication factor to 2. When broker 102 goes down, the topic will still have both partitions accessible, ensuring that data will still be served.

We've learned so far that topics are split into partitions and these partitions are distributed into the brokers. The partitions also needs to each be replicated to make sure that there's fault tolerance.

Between the partition and it's replica, one is elected as a leader and the other replica/s is called the in-sync-replica.

- Only the leader can receive and serve data for that partition

- the in-sync replica will just synchronize the data

- the leader and ISR is determined by the zookeeper

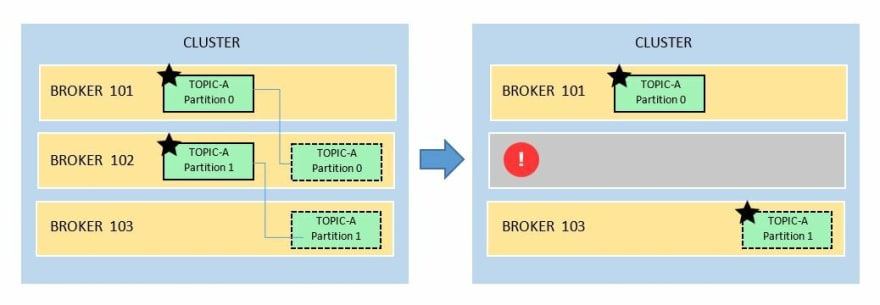

Using the same example we have Topic-A with 2 partitions.

- topic-A-partition-0 in broker 101 is elected the leader.

- this means it's replica in broker 102 will be the ISR.

- broker 102 also has topic-A-partition-1 elected as the leader,

- this makes it's replica in broker 103 the ISR

In the event broker 102 goes down,

- there will a reelection in partition 1

- since it only has 1 ISR, it is promoted to leader for partition 1

- when broker 102 comes back, topic-A-partition-1 will resume leadership

- the partition-1's replica in broker 103 will return to being ISR.

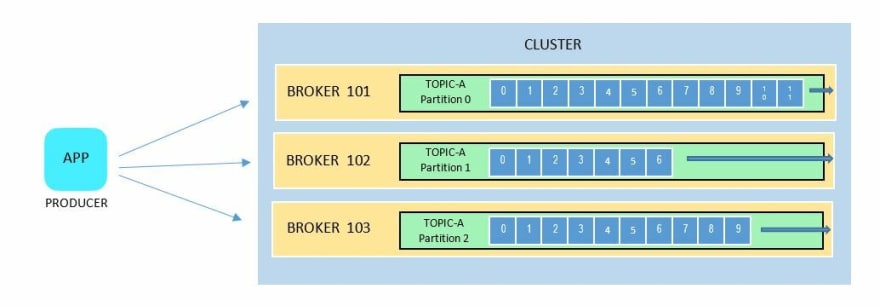

Producers write data into topics. These producers are client apps that automatically know which broker and partition to write to.

- messages are appended to a topic-partition in the order they're sent

- producers automatically recover in case of broker failure

- if no key is specified, it load-balances data into the partitions

Producers can also choose to receive acknowledgement of data writes. This means producers can opt to wait for confirmation from the brokers that the data was written successfully to the partitions. There's three types of acknowledgement:

| Acknowledgment | Description |

|---|---|

| acks=0 | - Producer doesn't wait for confirmation - If producer sends the data to a broker which failed, the data is loss. |

| acks=1 | - Leader confirms to producer if data write is successful - Limited data loss. |

| acks=all | - Producer waits for both leader's and ISR's confirmation - No data loss |

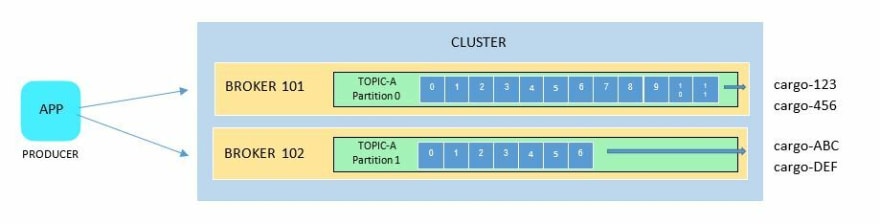

Producers can send the message with a key. Message keys allows the producer to assign the data to a specific partition.

- keys can be strings, number, etc.

- message keys are used for message ordering for a specific field

- all messages for that key will always go to the same partition

- if no key is specified, data will be send round-robin to the partitions

- as long as no new partitions are added, messages with same key will always go to the same partition

Think of keys as tags. As an example, we have a producer sending data to a topic with two partitions below. We can assign:

- key cargo-123 for partition 0

- key cargo-456 for partition 0

- key cargo-ABC for partition 1

- key cargo-DEF for partition 1

All data "tagged" with cargo-123 and Cargo-456 will be sent to partition 0, while those "tagged" with cargo-ABC and cargo-DEF will be sent to partition 1.

Consumers are applications on the other ends. They read data from the topic, and just like producers. they also know which topic to read from.

- data is read in order within each partitions

- consumers can also read from multiple topics in parallel

- however each partition is read independently

- consumers can automatically recover in case of broker failure

In the example below, we have two consumers that read from the same topic. Consumer 1 reads from a single partition only while Consumer 2 read data in parallel from 2 partitions.

In a real-world scenario, consumers actually read data in groups. Consumers within a group read from exclusive partitions.

- consumers read messages in the order they're stored (FIFO)

- if you have consumers > partitions, some consumers will be inactive

- automatically uses GroupCoordinator or ConsumerCoordinator

In the example below, we have three consumer groups - each with its own number of consumer apps.

- group-app-1 only has 2 consumers so it only reads data from 2 partitions

- group-app-3 has 3 consumers so all 3 can read from the 3 partitions

- group-app-2 has 4 consumers so only 3 can read from the partitions, one is inactive

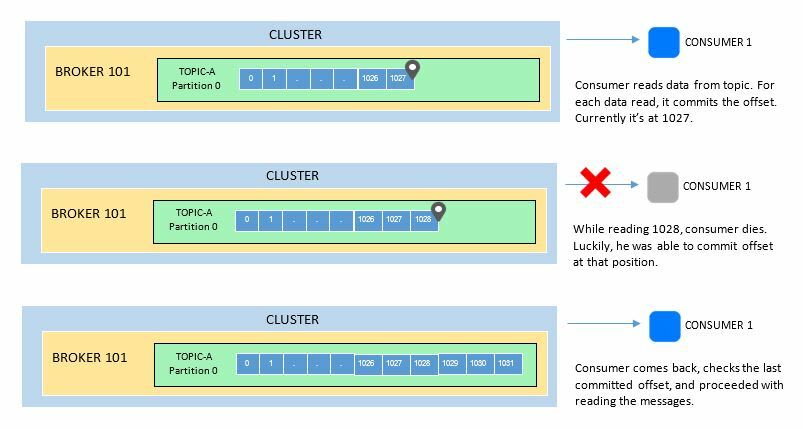

As discussed in the previous sections, offsets are a way to "bookmark" the current position in the partition.

- offsets are committed live in a topic named consumeroffsets

- once a consumer in a group is done processing data, it commits the offsets

- automatically done in the background

The purpose of offsets are for consumers to know where they left off in case the consumer dies - "bookmarked" the last read data. So that when the consumer comes back, it can check the commited offset and proceed to the last known position in the partition.

Additionally, consumers also has a choice on when to commit offsets. These are called delivery semantics and there are three types:

At most once

- offsets are committed as soon as message is received

- if processing goes wrong, message is lost

At least once (preferred)

- offsets are committed after the message is processed

- if processing goes wrong, message will be read again

- can cause duplicate messages - ensure processing is idempotent

Exactly once

- can only be done if Kafka to Kafka using Kafka Streams API

- for Kafka to external system, use idempotent processing

When a client connects to a broker from a cluster, it is automatically connected to all the brokers inside that cluster. This is because each broker knows all of the other brokers, topics, and partitions - this information is called the metadata.

Behind the scenes, this is how broker discovery is done when a Kafka client (producer or consumer) first connect to one broker:

- Kafka client connects to a broker for the first time.

- Kafka client sends a metadata request.

- The broker sends the cluster metadata, including the list of brokers to the client.

- When client starts producing or consuming, it knows which broker to connect to.

Awesome job getting to the end!

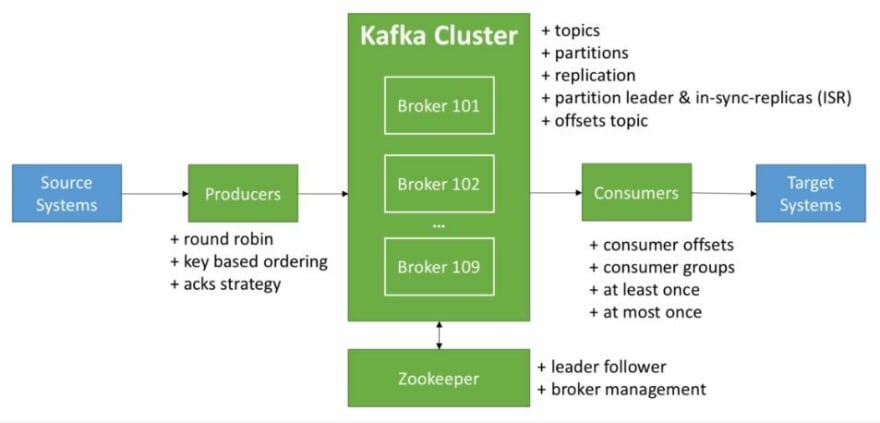

Whew, this article has covered a lot and I'm pretty sure you've gained a ton too. Now, before we conclude the Kafka theory and proceed with the labs, here's a summary of all we've discussed.

Photo is from Stephane Maarek's course - such an extraordinary instructor! If you'd like to know more, you can check out the his course, along with other ones that I find useful:

Apache Kafka Series - Learn Apache Kafka for Beginners v2 by Stephane Maarek

Getting Started with Apache Kafka by Ryan Plant

Apache Kafka A-Z with Hands on Learning by Learnkart Technology Private Limited

The Complete Apache Kafka Practical Guide by Bogdan Stashchuk

If you find this write-up interesting, I'll be glad to talk and connect with you on Twitter! 😃

18