43

Ethics in Artificial Intelligence: From A to Z

Artificial intelligence ethics is something everyone’s talking about now. Self-driving cars, face recognition and critical assessment systems are all cutting-edge solutions that raise a lot of ethical considerations. In this post, we are going to talk about the common problems that AI of today faces and how AI ethicists try to fix them.

I assume that if you’re reading this post, you have encountered the term ‘artificial intelligence’ before and know what it means. If not, please, check out our post about artificial intelligence, it will help.

It is also possible that the word ‘ethics’ is a bit unclear for you. No worries ― here’s a simple explanation.

There are three main subfields of ethics.

Normative ethics regulates behavior. It tells us what the right or the wrong thing to do is. For example, The Golden Rule is an example of normative ethics: treat others like you want to be treated.

Applied ethics studies moral problems and dilemmas that often arise in personal, political, or social life. An example of applied ethical issue is whether euthanasia should be legalized.

Meta-ethics studies the theory of ethics. It defines the concepts, argues whether ethical knowledge is even possible, and so on.

Ethics of AI belongs to the field of applied ethics. It is concerned with two things: the moral behavior of humans that create AI and also the behavior of machines.

Artificial intelligence is a tool. Whether it’s good or bad depends on how you use it. Make AI cure cancer? Good. Steal the credit card PINs from innocent citizens, bad. After all, stealing is illegal (duh).

But what to do when things are not that obvious? For example, what if we lived in the world where virtual assistant designers were mostly men, and they would assign female gender to their bots, and teach them to respond with a flirtatious joke on harassing or rude comments?.. Oh, wait a minute.

It’s not illegal to create virtual assistants. It’s not even illegal to expect women to act in a certain way. But is it morally acceptable to build biased technology?

Ethics in artificial intelligence is there to tell us when we’re doing the wrong thing. Even if the law doesn’t have anything against it. The law is never able to race scientific developments and always plays catch up, hence the requirement of ethics. It is on the tech companies to enforce boundaries on themselves.

Every society lives by certain rules. Some of them have made it into the legal system. For example, in many countries, copyright infringement is illegal.

At the same time, some rules aren’t written anywhere. No law prescribes you to help a lost 5-year old find his parents or an old lady cross the road. But you would still do it even if no one is looking.

Humans have moral values that police their behavior much more effectively than fear of legal punishment. And we are not the only ones.

For years, scientists believed that the only species that has empathy are humans. They have conducted multiple experiments trying to cause animals, from primates to elephants and rats, to help each other. And didn’t discover much altruism in their relationship.

But Fransiscus Bernardus Maria (Frans) de Waal didn’t agree. He spent decades studying the social relationships of primates. And he found out that in many cases, animals just didn’t understand how to engage in complex interactions proposed by the scientists. When de Waal and his research team came up with more animal-user-friendly experiments, they made many interesting discoveries. Elephants turned out to be capable of teamwork and chimpanzees demonstrated great respect for private property. Other animals like dolphins and bonobos were also quite pro-social. All because selfish behavior doesn’t add up to the preservation of species in the long run.

Today we live side by side with artificial intelligence. Even though the AI of today isn’t as intelligent as androids from the world of Detroit: Become Human, it already actively participates in important decision-making. For instance, it was an AI algorithm that decided who is more worthy of medical help and supplies during the COVID-19 crisis.

If we let AI make such important decisions for us, we need to make sure that it’s on our own side. Even when it’s legally compliant, AI can still be harmful. AI should inherit moral values from us and, basically, learn how to be a good guy entity.

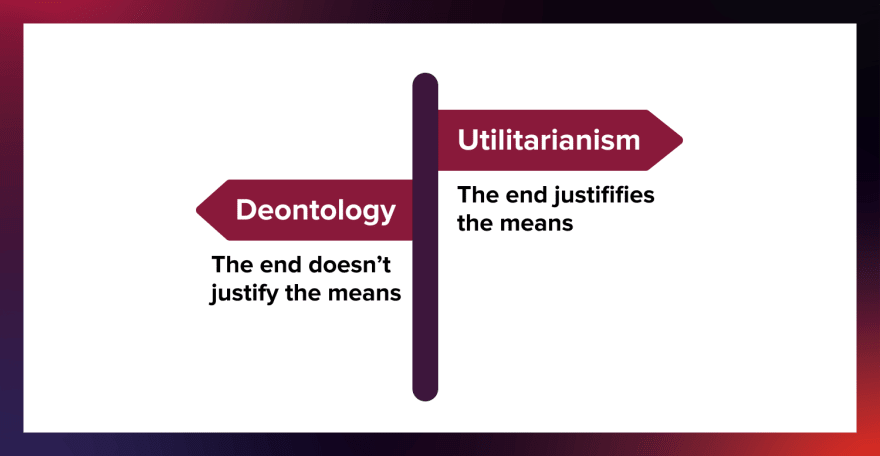

Artificial intelligence ethical field is being actively researched today. Among all the ethical and philosophical frameworks there are two that prevail: utilitarianism and deontology.

Deontology is often associated with Immanuel Kant. He believed that because every person has an ability to reason, they have inherent dignity. Therefore, it is immoral to violate or disrespect this dignity. For example, we shouldn’t treat a person as merely ‘a means to an end’.

The majority of ethicists today think that deontology is the approach that should be used to develop AI systems. We can encode rules in their programs and that will guarantee that AI will always be on our side. But still, the consequences of closely following a predefined set of rules can still be rather unpredictable. It is hard to make rules that for sure won’t make all the universe go into paperclips.

That is why it’s also impossible not to mention Isaac Asimov, a sci-fi writer. He proposed Three Laws of Robotics, which guide the moral action of machines in his Robot Series, as early as in 1940-s:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

Even though a lot of time has passed, many AI researchers and developers agree that our goals today should not come too far from following these rules. Asimov’s laws basically encompass the core principles of ethics of AI such as beneficence and non-maleficence, fairness, and privacy protection.

Here are some of the issues that have made ethics such a necessary field in AI today.

When we’re talking about problems concerning AI algorithms, fairness and bias are among the most frequently mentioned.

We want to treat all people equally. First of all, because like Immanuel Kant we believe that all humans deserve respect. Secondly, because fairness is fundamental to having a stable society. When a gender or ethinic minority feels discriminated against, it creates distress and unease in the society. AI is slowly becoming one of the social institutions, and the majority of people expect their social institutions to be fair (or, at least, striving to be fair).

The thing with fairness is, however, that it is very complicated. How do you define fairness in a set of concrete rules so that an algorithm could understand?

Bias is an obstacle to achieving fairness. When we’re talking about humans, by cognitive bias we usually mean some partiality about a thing, event, group of people. The danger of bias is that usually people aren’t aware of it ― to them, it just feels like common sense.

Computers can also be biased. Sometimes it’s because of the algorithmic logic encoded by biased people. But more often, AI has prejudice against a group of people because of the anomaly in data. For example, Amazon’s hiring system discarded women’s CVs not because developers didn’t want them hired but because historically few women filled in these roles.

Imagine you built a facial recognition system for the metro to automatically identify and catch criminals. It was working fine matching passengers with the faces of demanded criminals until one day it made a mistake. And again, and again. You had to retain several innocent people and invite a team of developers to fix bugs in the system that caused it to fail.

But with AI, it’s not always possible to tell why it made a certain conclusion. Facial recognition systems are usually based on neural networks and work by the so-called “black box” principle. You feed it some data and ask to search for patterns but what rules will it learn for itself is almost impossible to predict. More importantly, you can’t open it and see the rules it uses.

For example, AI computer vision that is used to diagnose an illness can provide a more frequent positive diagnosis for photos that were taken in a medical office. If a person is already in the medical office, there is a higher probability that a person is sick. But, quite obviously, being in the doctor’s office does not cause people to be sick.

This lack of visibility is a problem when these systems are used to make decisions that influence people’s lives. A developer team can literally spend years until they find what causes the wrong predictions in the system.

Moreover, there is a moral issue involved: not only does a patient deserve to know their diagnosis but also be informed based on what a doctor decided on this diagnosis. Imagine a situation: family members of a critical patient get informed that their relative is being switched off from the life support machine. They ask whether it’s necessary, maybe something can still be done. And receive an answer: “We don’t know, our AI told us it would be more optimal”. Or think of a risk assessment system: if you’re in a trial, you would probably like to know for sure that you aren’t given a more severe punishment just because of your skin tone.

Machines today make mistakes and, most likely, will continue making them in the future. But the responsibility for these mistakes becomes vague. For example, it’s unclear who is accountable for a self-driving car accident: the car manufacturer, the owner, or the algorithm itself?

In AI ethics, responsibility is understood in three dimensions:

- Which people (or groups of people) are accountable for the impact of algorithms or AI?

- How can society use and develop AI in a way that is legally and morally acceptable?

- What moral and legal responsibility should we demand from the AI system itself?

Responsibility can be legal or moral. Legal responsibility describes events and circumstances when legal punishment must take place. The actor is responsible for an action when their guilt is proven by the law enforcement institutions.

Moral responsibility and legal responsibility aren’t always the same thing. In philosophy, moral responsibility is the ability to decide what actions deserve praise. This inner responsibility is inner status: while norms that exist in the society might influence it, every person decides for themselves what values they consider morally binding.

If you want to continue learning about the ethics of AI, here are some resources that you can find useful:

- Einstein AI has an archive of relevant scientific papers about the ethics of AI.

- If you’re looking for concrete guidelines for the AI ethics implementation, check out the website of European Commission.

- The main source of information about the USA AI ethics strategy can be found on the US Department of Defense website.

- In the Stanford Encyclopedia of Philosophy, you will find more information about the philosophy and ethics of AI and robotics.

And, of course, stay tuned to our blog! We will continue to publish materials about the responsible use of technology.

43