34

Create, Train, Race your first AWS DeepRacer Model

Ever wanted to race in F1 against legends like Daniel Ricciardo? For someone like me who is very passionate about F1 and Machine learning but driving skills are insanely bad, there is only one way to make my dream come true: AWS DeepRacer

In this tutorial, we will see what is AWS DeepRacer and its underlying Machine Learning Concepts and then create and race our first DeepRacer model.

AWS DeepRacer is a cloud based 3D racing simulator, fully autonomous 1/18th scale race car driven by reinforcement learning, and global racing league. We can build models in Amazon SageMaker and train, test, and iterate quickly and easily on the track in the AWS DeepRacer 3D racing simulator. It gives you an interesting and fun way to get started with reinforcement learning (RL). RL is an advanced machine learning (ML) technique that learns very complex behaviours without requiring any labelled training data, and can make short term decisions while optimizing for a longer-term goal.

AWS DeepRacer Evo is the next generation in autonomous racing. It comes fully equipped with stereo cameras and LiDAR sensor to enable object avoidance and head-to-head racing, giving developers everything they need to take their racing to the next level. In object avoidance races, developers use the sensors to detect and avoid obstacles placed on the track. In head-to-head, developers race against another DeepRacer on the same track and try to avoid it while still turning in the best lap time. Forward-facing left and right cameras make up the stereo cameras, which helps the car learn depth information in images. This information can then be used to sense and avoid objects being approached on the track. The LiDAR sensor is backwards-facing and detects objects behind and beside the car. We will be using simulated car and track in this tutorial

Before jumping into creating a model, it is very important to understand some basic concepts of Reinforcement Learning.

Before jumping into creating a model, it is very important to understand some basic concepts of Reinforcement Learning.

In reinforcement learning, models learn by a continuous process of receiving rewards and punishments for every action taken. It’s all about rewarding positive actions and punishing negative ones.

Let’s take an example. Imagine a simple game wherein you have a robot that runs from left to right and must avoid obstacles by jumping over them at the right time. If your robot successfully clears an obstacle, it gets 1 point, but if the robot runs into it, it loses points. The goal of the game, of course, is for your robot to clear the obstacles and avoid a crash. Although simple in concept, this is a great starting point for understanding how reinforcement learning works and how you can use it to solve a concrete challenge.

Let’s take an example. Imagine a simple game wherein you have a robot that runs from left to right and must avoid obstacles by jumping over them at the right time. If your robot successfully clears an obstacle, it gets 1 point, but if the robot runs into it, it loses points. The goal of the game, of course, is for your robot to clear the obstacles and avoid a crash. Although simple in concept, this is a great starting point for understanding how reinforcement learning works and how you can use it to solve a concrete challenge.

I highly recommend you to take AWS DeepRacer e-learning course for free to understand the underlying concepts better.

First, we need to create an AWS Account to get started. Sign up for a free account if you don't have one. (Set region to us-east-1). And let’s get started.

Note: This exercise is designed to be completed in your AWS account. AWS DeepRacer is part of AWS Free Tier, so you can get started with the service at no cost. For the first month after sign-up, you are offered a monthly free tier of 10 hours of Amazon SageMaker training, and 60 simulation units of Amazon RoboMaker (enough to cover 10 hours of training). If you go beyond those free tier limits, you will accrue additional costs. For more information see the AWS DeepRacer Pricing page.

Go ahead and click “Create Model” and give the relevant model name and description.

We need the track to train our DeepRacer model, let’s go with ReInvent 2018. You can experiment with other tracks.

To start off in a simple way, let’s select Time Trail as our race mode. The objective is to complete the race in fastest possible time.

Now we need to concentrate on two important things that will make our DeepRacer model race better than other models

Now we need to concentrate on two important things that will make our DeepRacer model race better than other models

As we had already discussed, reward function gives the reward to the agent for its good action and penalises for incorrect action.

Lets design a simple reward function (Pre-available reward template in AWS DeepRacer). We will make the DeepRacer car stay as close as possible to the center line. If it is away or moves out of track, we will penalise it.

Lets design a simple reward function (Pre-available reward template in AWS DeepRacer). We will make the DeepRacer car stay as close as possible to the center line. If it is away or moves out of track, we will penalise it.

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| def reward_function(params): | |

| ''' | |

| Example of rewarding the agent to follow center line | |

| ''' | |

| # Read input parameters | |

| track_width = params['track_width'] | |

| distance_from_center = params['distance_from_center'] | |

| # Calculate 3 markers that are at varying distances away from the center line | |

| marker_1 = 0.1 * track_width | |

| marker_2 = 0.25 * track_width | |

| marker_3 = 0.5 * track_width | |

| # Give higher reward if the car is closer to center line and vice versa | |

| if distance_from_center <= marker_1: | |

| reward = 1.0 | |

| elif distance_from_center <= marker_2: | |

| reward = 0.5 | |

| elif distance_from_center <= marker_3: | |

| reward = 0.1 | |

| else: | |

| reward = 1e-3 # likely crashed/ close to off track | |

| return float(reward) |

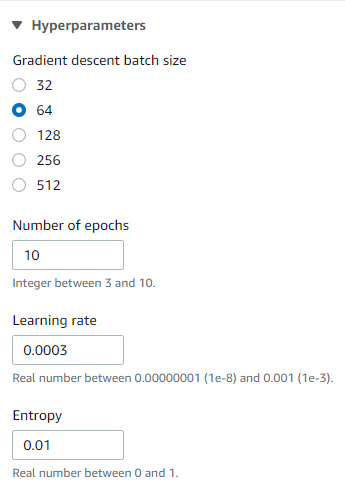

The hyperparameters of a model is what we supply externally to the model. Though we can go with the default values, we can play with the values of each hyperparameter to see if we can reach the best performance. It is more of trail and error method.

Before we start training, lets set the stopping condition i.e, the training time. The more the model gets trained, the better the performance. Keeping the free tier limit and costs, lets set to 60 mins of training.

We need to wait for 60 minutes to complete the training before we evaluate the trained model. Let’s grab a cup of coffee meanwhile :)

Once the training is complete, under Evaluation tab, view the simulation of the trained model of AWS DeepRacer performing on the the simulated track

Once the training is complete, under Evaluation tab, view the simulation of the trained model of AWS DeepRacer performing on the the simulated track

Congrats, We have successfully trained and raced our first AWS DeepRacer Model.

I know the performance of the model is not up to the mark, that is because we have a simple reward function, default hyperparameters and less training time .

In our next part of this tutorial series, we will see how we can improve our AWS DeepRacer Model.

In our next part of this tutorial series, we will see how we can improve our AWS DeepRacer Model.

If you have reached this far, I appreciate your patience and interest. Do like, save and share if you find this article interesting and do follow me for the next part!

The race is on, gentlemen!

34